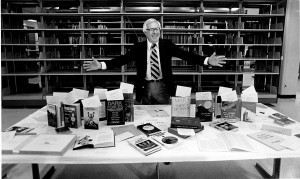

Ray Bradbury, one of the most influential and prolific science fiction writers of all time, has had a lasting impact on a broad array of entertainment culture. In his canon of books, Bradbury often emphasized exploring human psychology to create tension in his stories, which left the more fantastical elements lurking just below the surface. Many of his works have been adapted for movies, comic books, television, and the stage, and the themes he explores continue to resonate with audiences today. The notable 1966 classic film Fahrenheit 451 perfectly captured the definition of a futuristic dystopia, for example, while the eponymous television series he penned, The Ray Bradbury Theatre, is an anthology of science fiction stories and teleplays whose themes greatly represented Bradbury’s signature style. ScriptPhD.com was privileged to cover one of Ray Bradbury’s last appearances at San Diego Comic-Con, just prior to his death, where he discussed everything from his disdain for the Internet to his prescient premonitions of many technological advances to his steadfast support for the necessity of space exploration. In the special guest post below, we explore how the latest Bradbury adapataion, the new television show The Whispers, continues his enduring legacy of psychological and sci-fi suspense.

Premiering to largely positive reviews, ABC’s new show The Whispers is based on Bradbury’s short story “Zero Hour” from his book The Illustrated Man. Both stories are about children finding a new imaginary friend, Drill, who wants to play a game called “Invasion” with them. The adults in their lives are dismissive of their behavior until the children start acting strangely and sinister events start to take place. Bradbury’s story is a relatively short read with just a few characters that ends on a chilling note, while The Whispers seeks to extend the plot over the course of at least one season, and to that end it features an expanded cast including journalists, policemen, and government agents.

The targeting of young, impressionable minds by malevolent forces is deeply disturbing, and it seems entirely plausible that parents would write off odd behavior or the presence of imaginary friends as a simple part of growing up. In both the story and the show, the adults do not realize that they are dealing with a very real and tangible threat until it’s too late. The dread escalates when the adults realize that children who don’t know each other are all talking to the same person. The fear of the unknown, merely hinted at in Bradbury’s story, will seem to be exploited to great effect during the show’s run.

When “Zero Hour” was written in 1951, America was in the midst of McCarthyism and the Red Scare, and the fear of Communism and homosexuality ran rampant. As the tensions of the Cold War grew, so too did the anxiety that Communists had infiltrated American society, with many of the most aggressively targeted individuals for “un-American” activities belonging to the Hollywood filmmaking and writing communities. Bradbury’s story shares with many other fictions of the time period a healthy dose of paranoia and fear around possession and mind control. Indeed, Fahrenheit 451, a parable about a futuristic world in which books are banned, is also a parable about the dangers of censorship and political hysteria.

The makers of The Whispers recognize that these concerns still permeate our collective consciousness, and have updated the story with modern subject matter, expanding the subject of our apprehension to state surveillance and abuse of authority. To wit, many recent films and shows have explored similar themes amidst the psychological ramifications of highly developed artificial intelligence and its ability to control and even harm humans. Our concurrent obsession with and fear of technology run amok is another topic explored by Ray Bradbury in many of his later works. The idea that those around us are participating in a grand scheme that will bring us harm still sows seeds of discontent and mistrust in our minds. For that reason, the concept continues to be used in suspense television and cinema to this day.

One of the reasons Bradbury’s work continues to serve as the inspiration for so much contemporary entertainment is his use of everyday situations and human oddities to create intrigue. One such example is a 5-issue series called Shadow Show based on Bradbury’s work, released in 2014 by comic book publisher IDW. Some of the best artists, writers and comics had agreed to participate in this project since Bradbury had such a huge influence in their writing and art. In this anthology, Harlan Ellison’s “Weariness” perfectly elicits Bradbury’s dystopia in which our main characters experience the universe’s end. The terrifying end of life as we know it is one of Bradbury’s recurring themes, depicted in writings like The Martian Chronicles. He rarely felt the need to set his stories in faraway galaxies or adorn them with futuristic gadgets. Most of his writing focussed on the frightening aspects of humans in quotidien surroundings, such as in the 2002 novel, Let’s All Kill Constance. Much of The Whispers takes place in an ordinary suburban neighborhood, and like many of his stories, what seems at first to be a simple curiosity, is hiding something much more complex and frightening.

Fiction that brings out the spookiness inherent in so many facets of human nature creates a stronger and more enduring bond with its audience, since we can easily deposit ourselves into the characters’ situations, and all of a sudden we find that we’re directly experiencing their plight on an emotional level. That’s always been, as Bradbury well knew, the key to great suspense.

Maria Ramos is a tech and sci-fi writer interested in comic books, cycling, and horror films. Her hobbies include cooking, doodling, and finding local shops around the city. She currently lives in Chicago with her two pet turtles, Franklin and Roy. You can follow her on Twitter @MariaRamos1889.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

From a sci-fi and entertainment perspective, 2015 may undoubtedly be nicknamed “The Year of The Robot.” Several cinematic releases have already explored various angles of futuristic artificial intelligence (from the forgettable Chappie to the mainstream blockbuster Marvel’s Avengers: Age of Ultron to the intelligent sleeper indie hit Ex Machina), with several more on the way later this year. Two television series premiering this summer, limited series Humans on AMC and Mr. Robot on USA add thoughtful, layered (and very entertaining) discussions on the ethics and socio-economic impact of the technology affecting the age we live in. While Humans revolves around hyper-evolved robot companions, and Mr. Robot a singular shadowy eponymous cyberhacking organization, both represent enthusiastic Editor’s Selection recommendations from ScriptPhD. Reviews and an exclusive interview with Humans creators/writers Jonathan Brackley and Sam Vincent below.

Never in human history has technology and its potential reached a greater permeation of and importance in our daily lives than at the current moment. Indeed, it might even be changing the way our brains function! With entertainment often acting as a reflection of socially pertinent issues and zeitgeist motifs, it’s only natural to examine the depths to which robots (or any artificial technology) might subsume human life. Will they take over our jobs? Become smarter than us? Nefariously affect human society? These fears about the emotional lines between humans and their technology are at the heart of AMC’s new limited series Humans. It is set in the not-too-distant future, where the must have tech accessory is a ‘synth,’ a highly malleable, impeccably programmed robotic servant capable of providing any services – at the individual, family or macro-corporate level. It’s an idyllic ambition, fully realized. Busy, dysfunctional parents Joe and Laura obtain family android Anita to take care of basic housework and child rearing to free up time. Beat cop Pete’s rehabilitation android is indispensable to his paralyzed wife. And even though he doesn’t want a new synth, scientist George Millikan is thrust with a ‘care unit’ Vera by the Health Service to monitor his recovery from a stroke. They can pick fruit, clean up trash, work mindlessly in factories and sweat shops make meals, even provide service in brothels – an endless range of servile labor that we are uncomfortable or unwilling to do ourselves.

Humans brilliantly weaves the problems of this artificial intelligence narrative into multiple interweaving story lines. Anita may be the perfect house servant to Joe, but her omniscience and omnipresence borders on creepiness to wife Laura (and by proxy, the audience). Is Dr. Millikan (who helped craft the original synth technology) right that you can’t recycle them the way you would an old iPhone model? Or is he naive for loving his synth Odi like a son? And even if you create a Special Technologies Task Force to handle synth-related incidents, guaranteeing no harm to humans and minimal, if any, malfunctions, how can there be no nefarious downside to a piece of technology? They could, in theory, be obtained illegally and reprogrammed for subversive activity. If the original creator of the synths wanted to create a semblance of human life – “They were meant to feel,” he maintains – then are we culpable for their enslaved state? Should we feel relieved to see a synth break out of the brothel she’s forced to work in, or another mysterious group of synths that have somehow become sentient unite clandestinely to dream of a dimension where they’re free?

In reality, we already are in the midst of an age of artificial intelligence – computers. Powerful, fast, already capable of taking over our workforce and reshaping our society, they are the amorphous technological preamble to more specifically tailored robots, incurring all of the same trepidation and uncertainty. Mr. Robot, one of the smartest TV pilots in recent memory, is a cautionary tale about cyberhacking, socioeconomic vulnerability and the sheer reliance our society unknowingly places in computers. Its central themes are physically embodied in the central character of Elliot, a brilliant cybersecurity engineer by day/vigilante cyberhacker by night, battling schizophrenia and extreme social anxiety. To Elliott, the ubiquitous nature of computer power is simultaneously appealing and repulsive. Everything is electronic today – money, corporate transactions, even the way we communicate socially. As a hacker, he manipulates these elements with ease to get close to people and to solve injustice (carrying a Dexter-style digital cemetery of his conquests). But as someone who craves human contact he loathes the way technology has deteriorated human interaction and encouraged nameless, faceless corporate greed.

Elliot works for Allstate Security, whose biggest client is an emblem of corporate evil and economic diffidence. When they are hacked, Elliot discovers that it’s a private digital call to arms by a mysterious underground group called Mr. Robot (resembling the cybervigilante group Anonymous). They’ve hatched a plan to to concoct a wide-scale economic cyber attack that will result in the single biggest redistribution of wealth and debt forgiveness in history, and recruited Elliot into their organization. The question, and intriguing premise of the series, is whether Elliot can juggle his clean-cut day job, subversive underground hacking and protecting society one cyberterrorist act at a time, or if they will collapse under the burden of his conscience and mental illness.

Humans is a purview into the inevitable future, albeit one that may be creeping on us faster than we want it to. Even if hyper-advanced artificial intelligence is not an imminent reality and our fears might be overblown, the impact of technology on economics and human evolution is a reality we will have to grapple with eventually. And one that must inform the bioethics of any advanced sentient computing technology we create and release into the world. Mr. Robot is a stark reminder of our current present, that cyberterrorism is the new normal, that its global impact is immense, and (as with the case of artificial robots), our advancement of and reliance on technology is outpacing humans’ ability to control it.

ScriptPhD.com was extremely fortunate to chat directly with Humans writers Jonathan Brackley and Sam Vincent about the premise and thematic implications of their show. Here’s what they had to say:

ScriptPhD.com: Is “Humans” meant to be a cautionary tale about the dangers of complex artificial intelligence run amok or a hypothetical bioethical exploration of how such technology would permeate and affect human society?

Jonathan and Sam: Both! On one level, the synths are a metaphor for our real technology, and what it’s doing to us, as it becomes ever more human-like and user-friendly – but also more powerful and mysterious. It’s not so much hypothesising as it is extrapolating real world trends. But on a deeper story level, we play with the question – could these machines become more, and if so, what would happen? Though “run amok” has negative connotations – we’re trying to be more balanced. Who says a complex AI given free rein wouldn’t make things better?

SPhD: I found it interesting that there’s a tremendous range of emotions in how the humans related to and felt about their “synths.” George has a familial affection for his, Laura is creeped out/jealous of hers while her husband Joe is largely indifferent, policeman Peter grudgingly finds his synth to be a useful rehabilitation tool for his wife after an accident. Isn’t this reflective of the range of emotions in how humans react to the current technology in our lives, and maybe always will?

J&S: There’s always a wide range of attitudes towards any new technology – some adopt enthusiastically, others are suspicious. But maybe it’s become a more emotive question as we increasingly use our technology to conduct every aspect of our existence, including our emotional lives. Our feelings are already tangled up in our tech, and we can’t see that changing any time soon.

SPhD: Like many recent works exploring Artificial Intelligence, at the root of “Humans” is a sense of fear. Which is greater – the fear of losing our flaws and imperfections (the very things that make us human) or the genuine fear that the sentient “synths” have of us and their enslavement?

J&S: Though we show that synths certainly can’t take their continued existence for granted, there’s as much love as fear in the relationships between our characters. For us, the fear of how our technology is changing us is more powerful – purely because it’s really happening, and has been for a long time. But maybe it’s not to be feared – or not all of it at least…

Catch a trailer and closer series look at the making of Humans here:

And catch the FULL first episode of Mr. Robot here:

Mr. Robot airs on USA Network with full episodes available online.

Humans premieres on June 28, 2015 on AMC Television (USA) and airs on Channel 4 (UK).

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

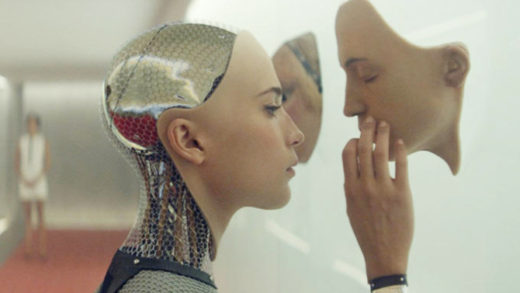

Every so often, a seminal film comes out that ends up being the hallmark of its genre. 2001: A Space Odyssey redefined space and technology in science fiction. Star Wars proved sci-fi could provide blockbuster material, while Blade Runner remains the standard-bearer for post-apocalyptic dystopia. A slate of recent films have broached varying scenarios involving artificial intelligence – from talking robots to sentient computers to re-engineered human capacity. But Ex Machina, the latest film from Alex Garland (writer of the pandemic horror film 28 Days Later and the astro-thriller Sunshine) is the cream of the crop. A stylish, stripped-down, cerebral film, Ex Machina weaves through the psychological implications of an experimental AI robot named Ava possessing preternatural emotional intelligence and free will. It’s a Hitchcockian sci-fi thriller for the geek chic gadget-bearing age, a vulnerable expository inquiry into the isolated meaning of “sentience” (something we will surely contend with in our time) and an honest reproach of technology’s boundless capabilities that somehow manages to celebrate them at the same time.

ScriptPhD.com’s enthusiastic review of this visionary new sci-fi film includes an exclusive Q&A with writer and director Alex Garland from a recent Los Angeles screening.

The preoccupation with superior artificial intelligence as a thematic idea is not a recent phenomenon. After all, Frankenstein is one of the pillars of science fiction – an ode to hypothetical human engineering gone awry. Terminator and its many offshoots gave rise to robotics engineering, whether as saviors or disruptors of humanity. But over the last 16 months, a proliferation of films centered around the incorporation of artificial intelligence in the ongoing microevolution of humanity has signaled a mainstream arrival into the zeitgeist consciousness. 2014 was highlighted with stylish and ambitious but ultimately overmatched digital reincarnation film Transcendence to the understated yet brilliant digital love story Her to the surprisingly smart Disney film Big Hero 6. This year amplifies that trend, with Avengers: Age of Ultron and Chappie marching out robots Elon Musk could only dream about among many other later releases. Wedged in-between is Ex Machina, taking its title from the Latin Deus Ex Machina (God from the machine), a film that prefers to focus on the bioethics and philosophy of scientific limits rather than the razzle-dazzle technology itself. There is still a tendency for sci-fi films concerning AI to engross themselves in presenting over-the-top science, with ensuing wholesale consequences based on theoretical technology, which ultimately hinders storytelling. Ex Machina is a simple story about two male humans, a very advanced-intelligence generated AI robot named Ava, confined in a remote space together, and the life-changing consequences that their interactions engender as a parable for the meaning of humanity. It will be looked back on as one of the hallmark films about AI.

When computer programmer Caleb Smith (Domhall Gleeson) wins an exclusive private week with his search engine company’s CEO Nathan Bateman (Oscar Isaac) it seems like a dream come true. He is helicoptered to the middle of a verdant paradise, where Nathan lives as a recluse in a locked-down, self-sufficient compound. Only Nathan plans to let Caleb be the first to perform a Turing Test on an advanced humanistic robot named Ava (Alicia Vikander). (Incidentally, the technology of creating a completely new robot for cinema was a remarkable process, as the filmmakers discussed in-depth in the New York Times.) The opportunity sounds like a geek’s dream come true, only as the layers slowly peel back, it is apparent that Caleb’s presence is no accident; indeed, the methodology for how Nathan chose him is directly related to the engineering of Ava. Nathan is, depending on your viewpoint, at best a lonely eccentric and at worst an alcoholic lunatic.

For two thirds of the movie, tension is primarily ratcheted through Caleb’s increasingly tense interviews with Ava. She’s smart, witty, curious and clearly has a crush on him. Is something like Ava even possible? Depending on who you ask, maybe not or maybe it already happened. But no matter. The latter third of the movie provides one breathtaking twist after another. Who is testing and manipulating whom? Who is really the “intelligent being” of the three? Are we right to be cautionary and fear AI, even stop it in its tracks before it happens? With seemingly anodyne versions of “helpful robots” already in existence, and social media looking into implementing AI to track our every move, it may be a matter of when, not if.

The brilliance of Alex Garland’s sci-fi writing is his understanding that understated simplicity drives (and even heightens) dramatic tension. Too many AI and techno-futuristic films collapse under the crushing weight of over-imagined technological aspirations, which leave little room for exploring the ramifications thereof. We start Ex Machina with the simple premise that a sentient, advanced, highly programmed robot has been made. She’s here. The rest of the film deftly explores the introspective “what now?” scenarios that will grapple scientists and bio-ethicists should this technology come to pass. What is sentience and can a machine even possess self-awareness? Is their desire to be free of the grasp of their creators wrong and should we allow it? Most importantly, is disruptive AI already here in the amorphous form of social media, search history and private data collected by big technology companies?

The vast majority of Ex Machina consists of the triumvirate of Nathan, Caleb and Ava, toggling between scenes with Nathan and Caleb and Caleb interviewing (experimenting on?) Ava between a glass facade. The progression of intensity in the numbered interviews that comprise the Turing Test are probably the most compelling of the whole film, and nicely set-up the shocking conclusion. In its themes of the human/AI dichotomy and dialogue-heavy tone, Ex Machina compares a lot to last year’s brilliant sci-fi film Her, which rightfully won Spike Jonze the Oscar for Best Original Screenplay. In the former, a lonely professional letter writer becomes attached to and eventually falls in love with his computer operating system Samantha. Eventually, we see the limitations of a relationship void of human contact and the peculiar idiosyncrasies that make us so distinct, the very elusive elements that Samantha seeks to know and understand. (Think this scenario is far off? Many tech developers feel it is not only inevitable but not too far away.To that degree, both of these films show two kinds of sentient future technology chasing after human-ness, yearning for it, yet caution against a bleak future where they supplant it. But whereas Her does so in a sweetly melancholy sentimental fashion, Ex Machina plants a much darker, psychologically prohibitive conclusion.

It turns out that the most terrifying scenario isn’t a world in which artificial intelligence disrupts our lives through a drastic shift in technology. It’s a world in which technology seamlessly integrates itself into the lives we already know.

View the official Ex Machina trailer here:

Alex Garland, writer and director of Ex Machina, stayed after a recent Los Angeles press screening to give an insightful, profound question and answer session about the artificial intelligence as portrayed in the film and its relationship to the recent slew of works depicting interactive technology.

Ava [the robot created by Nathan Bateman] knew what a “good person” was. Why wasn’t she programmed by Nathan to just obey the commandments and not commit certain terrible acts that she does in the film?

AG: The same reason that we aren’t programmed that way. We are asked not to [sin] by social conventions, but no one instructs or programs us not to do it. And yet, a lot of us do bad things. Also, I think what you’re asking is – is Ava bad? Has she done something wrong? And I think that depends on how you choose to look at the movie. I’ve been doing stories for a while, and you hand the story over and you’ve got your intentions but I’ve been doing it for long enough to know that people bring their own [bias] into it.

The way I saw this movie was it was all about this robot – the secret protagonist of the movie. It’s not about these two guys. That’s a trick; an expectation based on the way it’s set up. And if you see the story from her point of view, if her is the right word, she’s in a prison, and there’s the jailer and the jailer’s friend and what she wants to do is get out. And I think if you look at it from that perspective what she does isn’t bad, it’s practical. Yes, she tricks them, but that’s okay. They shouldn’t have been dumb. If you arrive in life in this place, and desire to get out, I think her actions are quite legitimate.

So, that’s a very human-like quality. So let’s say that you’re confronted with a life or death situation with artificial intelligence. Do you have any tricks that you would use [to survive]?

AG: If I’m hypothetically attacked by an AI, I don’t know… run. I think the real question is whether AI is scary. Should we fear them? There’s a lot of people who say we should. Some smarter people than me such as Elon Musk, Stephen Dawkins, and I understand that. This film draws parallels with nuclear power and [Oppenheimer’s caution]. There’s a latent danger there in both. Yet, again, I think it depends on how you frame it. One version of this story is Frankenstein, and that story is a cautionary tale – a religious one at that. It’s saying “Man, don’t mess with God’s creationary work. It’s the wrong thing to do.”

And I framed this differently in my mind. It’s an act of parenthood. We create new conciousnesses on this planet all the time – everyone in this room, everyone on the planet is a product of other people having created this consciousness. If you see it that way, then the AI is the extension of us, not separate from us. A product of us, of something we’ve chosen to do. What would you expect or want of your child? At the bare minimum, you’d want for them to outlive you. The next expectation is that their life is at least as good as yours and hopefully better. All of these things are a matter of perspective. I’m not anti-AI. I think they’re going to be more reasonable than us, potentially in some key respects. We do a lot of unreasonable stuff and they may be fairer.

On the subject of reproduction, in this film, Ava was anatomically correct. Had it been a male, would he have been likewise built correctly?

AG: If you made the male AI in accordance with the experiment that this guy is trying to conduct, then yes. This film is an “ideas movie.” Sometimes it’s asking a question and then presenting an answer, like “Does she have empathy?” or “Is she sentient?”. Sometimes, there isn’t an answer to the question, either because I don’t know the answer or because no one does. The question you’re framing is not “Can you [have sex] with these AI?” it’s “Where does the gender reside?” Where does gender exist in all of us. Is it in the mind, or in the body? It would be easy to construct an argument that Ava has no gender and it would seem reasonable in many respects. You could take her mind and put it in a male body and say “Well, nothing is substantially changed. This is a cosmetic difference between the two.” And yet, then you start to think about how you talk about her and how you perceive her. And to say “he” of Ava just seems wrong. And to say “it” seems weirdly disrespectful. And you end up having this genderless thing coming back to she.

In addition, if you’re going to say gender is in the mind, then demonstrate it. That’s the question I’m trying to provoke. When they have that conversation halfway through the film, these implicit questions, if gender is in the mind, then what is it? Does a man think differently from a woman? Is that really true? Think of something that a man would always think, and you’ll find a man that doesn’t always think that, and you’ll find a woman that does. These are the implicit questions in the film. They don’t all have answers. The key thing about the gender thing isn’t about who is having sex with whom. It’s that this young man is tasked with thinking about what’s going on inside of this machine’s head. That’s his job is to figure that out. And at a certain point he stops thinking about her and he gets it wrong. That’s the issue – why does he stop thinking about it? If the plot shifts in this worked on the audience or any of you in the same way as they worked on the young man, why was that?

Was one of your implicit messages in the film for people to be more conscientious about what they’re sharing on the internet, whether in their searches or social media, and thereby identifying their interests “out there” and how that might be potentially used?

AG: Yes, absolutely. It’s a strange thing, what zeitgeist is. Movies take ages [to make] sometimes – like two and a half years at least. I first wrote this script about four years ago. And then, I find out as we go through production that we’re actually late to the party. There are a whole bunch of films about AI – Transcendance, Automator, Big Hero 6, Age of Ultron [coming out next month], Chappie. Why is that? There hasn’t been any breakthrough in AI, so why are all these people doing this at the same time? And I think it’s not AIs, I think it’s search engines. I think it’s because we’ve got laptops and phones and we, those of us outside of tech, don’t really understand how they work. But we have a strong sense that “they” understand how “we” work. They anticipate stuff about us, they target us with advertising, and I think that makes us uneasy. And I think that these AI stories that are around are symptomatic of that.

That bit in the film about [the dangers of internet identity], in a way it obliquely relates to Edward Snowden, and drawing attention to what the government is doing. But if people get sufficiently angry, they can vote out the government. That is within the power of an electorate. Theoretically, in capitalist terms, consumers have that power over tech companies, but we don’t really. Because that means not having a mobile phone, not having a tablet, a computer, a credit card, a television, and so on. There is something in me that is worried about that. I actually like the tech companies, because I think they’re kind of like NASA in the 1960s. They’re the people going to the moon. That’s great – we wanted to go to the moon. But I’m also scared of them, because they’ve got so much power and we tend not to cope well with a lot of power. So yes, that’s all in the film.

You mentioned Elon Musk earlier, who looks a little bit like [Google co-founder] Sergei Brin. Which tech executive would you say CEO Nathan Batemn is most modeled after?

AG: He wasn’t exactly modeled after an exec. He was modeled more like the companies in some respect. All that “dude, bro” stuff. I sometimes feel that’s what they’re doing. Not to generalize, but it’s a little bit like that – we’re all buddies, come on, dude. While it’s rifling through my wallet and my address book. It’s misleading, a mixed message. I don’t want to sound paranoid about tech companies. I do really like them, I think they’re great. But I think it’s correct to be ambivalent about them. Because anything which is that powerful and that unmarshalled you have to be suspicious of, not even for what we know they’re doing but for what they might do. So, Nathan is more [representative of] a vibe than a person.

For someone equally as scared of AI as Alex is of tech companies, what is the gap between human intelligence and AI intelligence that exists today? How long before we have AI as part of our daily lives?

AG: One of the great pleasures of working on this film was contacting and discussing with people who are at the edge of AI research. I don’t think we’re very close at all. It depends on what you’re talking about. General AI, yes, we’re getting closer. Sentient machines, it’s not even in the ballpark. It’s similar in my mind to a cure for cancer. You can make progress and move forward, but sometimes by going forward it highlights that the goal has receded by complexity. I know Ray Kurtzweil makes some sort of quantified predictions – in 20 years, we’ll be there. I don’t know how you can do that. He’s a smart guy, maybe he’s right. As far as I can tell, in talking to the people who are as close to knowing [about the subject] as I can encounter, it’s something that may or may not happen. And it will probably take a while.

One of the things that’s always bothered me about the Turing Test is that it’s not seeking to know whether or not the thing you’re dealing with is an artificial intelligence. It’s – can you tell that it is? And that’s always bothered me as a kind of weird test. You have a 20,000 question survey, and in the end this one decision comes from a binary point of view. It seems almost flawed.

AG: Yes, you’re completely right. Apart from the fact that it was configured quite a long time ago, it’s primarily a test to see if you can pass the Turing Test. It doesn’t carry information about sentience or potential intelligence. And you could certainly game it. But, it’s also incredibly difficult to pass. So in that respect it’s a really good test. It’s just not a test of what it’s perceived to be a test of, typically. This [experiment in the movie] was supposed to be like a post-Turing Turing Test. The guy says she passed with blind controls, he’s not interested in whether she’ll pass. He’s not interested in her language capacity, which is comparable to a chess computer that wants to win at chess, but doesn’t even know whether it’s playing chess or that it’s a computer. This is the thing you’d do after you pass the Turing Test. But I totally agree with everything you said.

In your research, is there a test that’s been developed that is the reverse of the Turing Test, that seeks to answer whether, in the face of so much technology development, we’ve lost some of our innate human-ness? Or that we’re more machine like?

AG: I think we are machine-like. As far as I know, no test exists like that. The argument at the heart of these things is: is there a significant difference between what a machine can do and what we are? I think how I see this is that we sometimes dignify our conciousness. We make it slightly metaphysical and we deify it because it’s so mysterious to us. An we think, are computers ever going to get up to the lofty heights where we exist in our conciousness? And I suspect that we should be repositioning it here, and we overstate us, in some respects. That’s probably the opposite of what you want to hear.

Do you think that some machine-like quality predates the technology?

AG: I do, yes. I suspect all our human aspects are through evolution, that’s how I think we got here. I can see how conciousness arises from needing to interact in a meaningful and helpful way with other sentient things. Our language develops, and as these things get more sophisticated, conciousness becomes more useful and things that have higher conciousness succeed better. Also, I heard someone talk about conciousness the other day and they were saying “One day, it’s possible that elephants will become sentient.” And that’s a really good example of how we misunderstand sentience. Elephants are already sentient. If you put a dog in front of a mirror, it recognizes its own reflection. It’s self-aware. It knows it’s not looking at another dog. So I put us on that spectrum.

Pertaining to that question, there’s a [critical] moment in the movie where Caleb cuts himself in front of a mirror to make sure that he’s bleeding. And I was going to ask if that’s symbolic of that evolution where conciousness can be undifferentiated between a human and a machine? I was wondering if that scene is a subtle hint that you don’t really know whether you’re the one that is being tested or you’re the tester?

AG: There’s two parts [to that scene]. One is film audiences are literate. I kind of assume that everyone who’s seen that has seen Blade Runner. So they’re going to be imagining to themselves, it’s not her [that is the AI] it’s him. He’s the robot. Here’s the thing. If someone asks of you, here’s a machine, test that machine’s conciousness, tell me if it’s concious or not, it turns out to be a very difficult thing to do. Because [the machine] could act convincingly that it’s concious, but that wouldn’t tell you that it is. Now, once you know that, that actually becomes true of us. You don’t know I’m concious. You think I’m probably concious, you’re not really questioning it. But you believe you are concious, and because I’m like you, I’m another human, you assume I’ve got it. But I’m doing anything that empirically demonstrates that I am concious. It’s an act of faith. And once you know that, you’ve figured that out about the machine and now you can figure it out about the other person. Weirdly, then you can ask it of yourself. That’s where the diminished sense of conciousness comes into it. The things that I believe are special about me are the things I’m feeling – love, fear. And then you think about electrochemicals floods in your brain and the things we’ve been taught and the things we’ve been born with and our behavior patterns and suddenly it gets more and more diminished. To the point that you can think something along the lines of “Am I like a complicated plant that thinks it’s a human?” It’s not such an unreasonable question. So cut your arm and have a look!

In thinking about being a complicated plant, that sounds very isolating. Nathan was really isolated in the movie as well. Do you think that this is indicative of where we’re going with our devices and internet and social media being our social outlet rather than actually socializing? Is AI taking us down that path of isolation?

AG: It may or may not. That could be the case. I’m not on Twitter, I’ve never been on Facebook, I’m not really too [fond of] that stuff. From the outside looking in, it looks like that’s the way people communicate – maybe in a limited way, but in a way I don’t really know. The thing about Nathan is we’re social animals, and our behavior is incredibly modified by people around us. And when we’re removed from modification, our behavior gets very eccentric very fast. I think of it like a kid holding on to a balloon and then they let it go and in a flash it’s gone. I know this because my job is I’m a writer and if I’m on a roll, I can spend six days where I don’t leave the house, I barely see my kids in the corridor, but I’m mainly interested in the fridge and the computer. And I get weird, fast. It’s amazing how quickly it happens. I think anyone who’s read Heart of Darkness or seen the adaptation Apocalypse Now, [Nathan] is like this character Kurtz. He spends too much time upriver, too much time unmodified by the influences that social interactions provide.

Ex Machina goes into wide release in theaters on April 10, 2015.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

For every friendly robot we see in science fiction such as Star Wars‘s C3PO, there are others with a more sinister reputation that you can find in films such as I, Robot. Indeed, most movie robots can be classified into a range of archetypes and purposes. Science boffins at Cambridge University have taken the unusual step of evaluating the exact risks of humanity suffering from a Terminator-style meltdown at the Cambridge Project for Existential Risk.

“Robots On the Run” is currently an unlikely scenario, so don’t stockpile rations and weapons in panic just yet. But with machine intelligence continually evolving, developing and even crossing thresholds of creativity and and language, what holds now might not in the future. Robotic technology is making huge advances in great part thanks to the efforts of Japanese scientists and Robot Wars. For the time being, the term AI (artificial intelligence) might sound like a Hollywood invention (the term was translated by Steven Spielberg in a landmark film, after all), but the science behind it is real and proliferating in terms of capability and application. Robots can now “learn” things through circuitry similar to the way humans pick up information. Nevertheless, some scientists believe that there are limits to the level of intelligence that robots will be able to achieve in the future. In a special ScriptPhD review, we examine the current state of artificial intelligence, and the possibilities that the future holds for this technology.

Is AI a false dawn?

While artificial intelligence has certainly delivered impressive advances in some respects, it has also not successfully implemented the kind of groundbreaking high-order human activity that some would have envisaged long ago. Replicating technology such as thought, conversation and reasoning in robots is extraordinarily complicated. Take, for example, teaching robots to talk. AI programming has enabled robots to hold rudimentary conversations together, but the conversation observed here is extremely simple and far from matching or surpassing even everyday human chit-chat. There have been other advances in AI, but these tend to be fairly singular in approach. In essence, it is possible to get AI machines to perform some of the tasks we humans can cope with, as witnessed by the robot “Watson” defeating humanity’s best and brightest at the quiz show Jeopardy!, but we are very far away from creating a complete robot that can manage humanity’s complex levels of multi-tasking.

Despite these modest advances to date, technology throughout history has often evolved in a hyperbolic pattern after a long, linear period of discovery and research. For example, as the Cambridge scientists pointed out, many people doubted the possibility of heavier-than-air flight. This has been achieved and improved many times over, even to supersonic speeds, since the Wright Brothers’ unprecedented world’s first successful airplane flight. In last year’s sci-fi epic Prometheus the android David is an engineered human designed to assist an exploratory ship’s crew in every way. David anticipates their desires, needs, yet also exhibits the ability to reason, share emotions and feel complex meta-awareness. Forward-reaching? Not possible now? Perhaps. But by 2050, computers controlling robot “brains” will be able to execute 100 trillion instructions per second, on par with human brain activity. How those robots order and utilize these trillions of thoughts, only time will tell!

If nature can engineer it, why can’t we?

The human brain is a marvelous feat of natural engineering. Making sense of this unique organ singularly differentiates the human species from all others requires a conglomeration of neuroscience, mathematics and physiology. MIT neuroscientist Sebastian Seung is attempting to do precisely that – reverse engineer the human brain in order to map out every neuron and connection therein, creating a road map to how we think and function. The feat, called the connectome, is likely to be accomplished by 2020, and is probably the first tentative step towards creating a machine that is more powerful than human brain. No supercomputer that can simulate the human brain exists yet. But researchers at the IBM cognitive computing project, backed by a $5 million grant from US military research arm DARPA, aim to engineer software simulations that will complement hardware chips modeled after how the human brain works. The research is already being implemented by DARPA into brain implants that have better control of artificial prosthetic limbs.

The plausibility that technology will catch up to millions of years of evolution in a few years’ time seems inevitable. But the question remains… what then? In a brilliant recent New York Times editorial, University of Cambridge philosopher Huw Price muses about the nature of human existentialism in an age of the singularity. “Indeed, it’s not really clear who “we” would be, in those circumstances. Would we be humans surviving (or not) in an environment in which superior machine intelligences had taken the reins, to speak? Would we be human intelligences somehow extended by nonbiological means? Would we be in some sense entirely posthuman (though thinking of ourselves perhaps as descendants of humans)?” Amidst the fears of what engineered beings, robotic or otherwise, would do to us, lies an even scarier question, most recently explored in Vincenzo Natali’s sci-fi horror epic Splice: what responsibility do we hold for what we did to them?

Is it already checkmate, AI?

Artificial intelligence computers have already beaten humans hands down in a number of computational metrics, perhaps most notably when the IBM chess computer Deep Blue outwitted then-world champion Gary Kasparov back in 1997, or the more recent aforementioned quiz show trouncing by deep learning QA machine Watson. There are many reasons behind these supercomputing landmarks, not the least of which is a much quicker capacity for calculations, along with not being subject to the vagaries of human error. Thus, from the narrow AI point of view, hyper-programmed robots are already well on their way, buoyed by hardware and computing advances and programming capacity. According to Moore’s law, the amount of computing power we can fit on a chip doubles every two years, an accurate estimation to date, despite skeptics who claim that the dynamics of Moore’s law will eventually reach a limit. Nevertheless, futurist Ray Kurtzweil predicts that computers’ role in our lives will expand far beyond tablets, phones and the Internet. Taking a page out of The Terminator, Kurtzweil believes that humans will eventually be implanted with computers (a sort of artificial intelligence chimera) for longer life and more extensive brain capacity. If the researchers developing artificial intelligence at Google X Labs have their say, this feat will arrive sooner rather than later.

There may be no I in robot, but that does not necessarily mean that advanced artificial beings would be altruistic, or even remotely friendly to their organic human counterparts. They do not (yet) have the emotions, free will and values that humans use to guide our decision-making. While it is unlikely that robots would necessarily be outright hostile to humans, it is possible that they would be indifferent to us, or even worse, think that we are a danger to ourselves and the planet and seek to either restrict our freedom or do away with humans entirely. At the very least, development and use of this technology will yield considerable novel ethical and moral quandaries.

It may be difficult to predict what the future of technology holds just yet, but in the meantime, humanity can be comforted by the knowledge that it will be a while before robots, or artificial intelligence of any kind, subsumes our existence.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

I’m honored to be joining ScriptPhD.com as an East Coast Correspondent, and look forward to bringing you coverage from events in such exciting areas as Atlanta, Baltimore, and New York City – as well as my hometown of Washington, DC.

And to that end, here is a re-cap of the World Science Festival’s panel “Battlestar Galactica: Cyborgs on the Horizon.” For anyone interested in the intersection of Science and Pop Culture, I cannot promote this event enough. In addition to the panel I’ll be describing, some of the participants included Alan Alda, Glenn Close, Bobby McFerrin, YoYo Ma, and Christine Baranski from the entertainment sector. Representing science were notables like Dr. James Watson (who along with Francis Crick was the first to elucidate the helical structure of DNA), Sir Paul Nurse (Nobel Laureate and president of Rockefeller University), and E.O. Wilson (who is celebrating his 80th birthday in conjunction with the festival).

However, it’s time to return to the subject of this post – BSG and Cyborgs. To read more about the discussion at the intersection of science fact and science fiction, please click “Continue Reading”.

Regretfully, the 92nd Street Y in New York City prohibits the use of any sort of recording equipment, which was not noted on their description of the event or on their policy page, but which I discovered as one of their staff came to scold me while I was testing my camera prior to the inception of the event. So while I took comprehensive notes, I was unable to record the panel in order to provide a full transcript. Nor did I get any photos.[note: and believe me, the reactions shots of Mary McDonnell and Michael Hogan to the cutting-edge science they were showing was worth the price of admission, and would’ve made fabulous photos!]

Faith Salie was the moderator for the event. Geeks may know her best as Sarina from Star Trek: Deep Space Nine. She’s also a Rhodes Scholar, host of the 2008 and 2009 Sundance Festival, and hosted the Public Radio International Program Fair Game. Her knowledge of Battlestar Galactica was impressive, and she’d also done a great deal of background research on the issues related to robotics and artificial intelligence, which allowed her to keep the conversation going, and give fair time to both the science and the scifi.

The science panelists were Nick Bostrom the director of Oxford University’s Future of Humanity Institute and co-founder of the World Transhumanist Association which promotes the ethical use of technology; Hod Lipson, director of the Computational Synthesis Group and Associate Professor of Computing & Information Sciences at Cornell University, who has actually developed self-aware and evolutionary robots; and Kevin Warwick a professor of Cybernetics at the University of Reading in England—unlike Gaius Baltar, Dr. Warwick has a chip in his head. In anticipation of this event, Galactica Sitrep interviewed both Dr. Lipson and Dr. Warwick, and they are interesting reading for more insight into these scientists’ perspectives.

The BSG panelists were Mary McDonnell and Michael Hogan. Faith Salie very aptly introduced Mary McDonnell as “gorgeous” before briefly covering her film credits outside of Battlestar. Vis-a-vis Michael Hogen’s introduction, we learned that he also has X-Files to his credits. [A fact which is sadly missing from his IMDB credits, leading me to wonder which episode??]

The moderator opened the discussion by asking Mary McDonnell and Michael Hogan what life was like after BSG. Mary McDonnell pretended to sob, before acknowledging that “life after death is actually quite wonderful.” She went on to say that dying wasn’t so bad and that she enjoyed being the only actress ever to die first and then get married. At the audience laughter, she then smiled and said she was pleased to see that we “got it” as she made the same quip somewhere else recently and it went over the audience’s head. She pantomimed Adama putting his ring on Laura’s finger after her death in the finale and described the scene as “beautiful,” and her work on BSG, in general as “so compelling.”

She said she was going to miss the cast including “Mikey,” (Michael Hogan sitting next to her) and would miss the direct connection with the culture, and so she was excited by events like the World Science Festival Panel that would allow that connection to continue. She also said that the “fans are the best,” and applauded the audience to which Michael Hogan emphatically joined in.

Michael Hogan’s answer to the same question was that it was very good to leave when it did and “have it sitting the classic show,” and that the experience would stay with him for the rest of his life. He was then asked to set up the premise of the show for those few in the audience that had not seen it. His answer was

In a nutshell, Battlestar Galactica is about an XO who befriends a man who becomes the commander of the Battlestar Galactica and the trials and tribulations of their relationship.

After the audience stopped laughing, he gave the more mainstream description of Battlestar being about the war between the Cylons and humanity and the series opening at the point that the Cylons have just nuked the twelve colonies where humanity lives.

This allowed the moderator to segue into clips from both the Miniseries and the Season 2 episode “Downloaded” where we saw the character, Six, explaining that she is—in fact—a Cylon, and then going through the downloading process.

Following the clips, the discussion turned to the scientists in the panel, who were asked to answer the question: What is a robot?

Kevin Warwick explained that the term “robot” came originally from Karel Čapek, a Czech writer who envisioned robots as mindless agricultural workers. It’s now come to be used much more broadly for that which is machine or machine-like, and that in current usage there’s not much difference between a robot or a cyborg [Note: There are those that would vehemently disagree—a common distinction between the two is that cyborgs are a mixture of both the non-living machine and the organic]. Hod Lipson said that trying to pin down the meaning of that term was a moving target, much like trying to define artificial intelligence.

The panelists went on to talk about who all robots and artificially intelligent systems try to imitate something living—from the primitive systems such as bacteria, to more complex animals as dogs and cats, and now ultimately to humans.

The idea of artificial intelligence and robots that can think for then explored. Hod Lipson said that for the longest time the ability to play chess was recognized as something that was paradigmatically human, but we do now have machines that can easily beat a human at chess. As such the ultimately goal of artificial intelligence and machines that can reason gets moved further and further.

From there, the discussion moved back to Battlestar Galactica, and Faith Salie asked Michael Hogan how he felt upon learning that he was one of the Final Five. They then showed a clip from the Season 4 episode “Revelations in which Michael Hogan’s character, Saul Tigh, discloses his identity as a Cylon to his commanding officer and long-time friend, Bill Adama.

Michael Hogan explained that he disagreed vehemently with that decision, but that it was not something that he could talk the executive producers, Ronald D. Moore and David Eick, out of. He said that for a long time on set there had been joking about who the final five were going to be—knowing that they were going to be picked from those characters that were already established—and had initially thought it was another bad joke. He also said that he’d seen an internet poll at one point asking who fans thought—among all the characters, both large and small—were the final five and he was second from the bottom, while Mary McDonnell was near the top.

When asked about researching his role as XO and later Cylon, Michael Hogan said that researching and getting into a character is part of what attracted him to the job of acting. This led to a side quip about it being a lot of fun to research a cranky man with a drinking problem. More interestingly, he was then asked: How do you research being a robot?

His answer was that he approached it like mental illness. He said he took it as a given that Tigh would already be living in chronic pain as a result of the torture he’d undergone on New Caprica and a functioning alcoholic, so that when he first started hearing the music (that activated him as a Cylon), it wasn’t necessarily a big surprise; it was just one more thing.

It was only after he had the realization in the presence of the other three Cylons that things fell into place, and then it was terrifying for him. He went on to say that Tigh, if not the chronologically oldest person in the fleet [which I guess means counting from the time at which they “awoke” with the false memories on Caprica] definitely had the most combat experience, and as a Cylon was therefore a “very dangerous creature.” To illustrate that, he then described the scene in which Tigh envisioned turning a gun on Adama in the CIC.

Kevin Warwick picked up on this thread and said that this was, “taking your human brain, but with a small change, and everything is made different.” Michael Hogan agreed.

In turn, the moderator then turned the discussion to the work Dr. Warwick is doing in using biological cells to power a robot. In essence, Dr. Warwick has developed bio-based AI. He harvests and grows rat neural cells and then uses them to control an independent robot. At this point in his experiments, he said, they’re only attempting simple objectives such as not getting it to bump into walls. However, as the robot “learns” these things, the neural tissue powering it shows distinct changes indicating that pathways are being developed.

He said he hopes that this research might some day lead to a better understanding of human brains and potential breakthroughs in Alzheimer’s and Parkinson’s research. There is a video of this robot in action at the London Telegraph story “Rat’s ‘brain’ used to control robot. Other external articles include Springer’s “Architecture for the Neuronal Control of a Mobile Robot” and Seed Magazine’s “Researchers have developed a robot capable of learning and interacting with the world using a biological brain.”

The description of these neuronal cells led then to a discussion of the Cylon stem cells used to cure Laura Roslin’s cancer in the episode, Epiphanies. Mary McDonnell informed the audience that the story line original sprang from what, at the time, was the U.S.’s resistance to stem cell research. The moderator then decided to bring a little humor to the discussion by saying, “So, Laura’s character as near death, but your agent finally worked out a contract.”

Mary McDonnell laughed and said, yes, she was near death but she finally decided to stop pushing for a trailer as big as Eddie’s [Edward James Olmos] realizing that “no one gets a trailer as big as Eddie.”

Returning to the discussion of the plot, Mary McDonnell noted that what really fascinated her about this storyline was Baltar. She said that it was the first time we saw “the beginnings of a conscience” in him, and that as a result of that he took “profound action.” She then further described the course of cure based in the stem cells from a half-human/half-Cylon fetus, and concluded with saying, “Not to say I got up the next day and became a Cylon sympathizer . . .”

The panel discussion then segued to a discussion of the work being done by Hod Lipson on robots’ abilities to evolve—in a Darwinian sense—and to breed. Using modeling and simulation tools and game theory, Dr. Lipson is creating robots who self select based on desirable traits and from generation to generation become stronger, more mobile, and better adapted to their environments. His slides included a reference to the Nature article “ Automatic design and manufacture of robotic lifeforms.”

Dr. Lipson noted that many of the parts used in these robots are created using 3-D printing, which is, in itself a rather new and exciting technology that really excited not only the moderator, but also Michael Hogan and Mary McDonnell. What wasn’t discussed, is that beyond allowing robots to design their own parts, this technology has a lot of practical applications. One of those that has the greatest potential to positively impact people is that of prosthetic socket design.

In order for the robots’ evolution to proceed more swiftly and realistically, Dr. Lipson explained that he’s given them the ability to self-image. Yes, the robots are SELF AWARE. He showed a video (a variant of which can be found from an earlier talk on You Tube) which showed a spider-like robot experimentally moving its limbs and as it did so, developing an idea of what it looked like. At each generation, the robot’s idea of what it looked like got more and more accurate, and its ability to move grew more and more precise.

The researchers then removed one of the robot’s four limbs, and Dr. Lipson described how the robot became aware that its limb was missing and even adapted to walk with a limp This elicited sounds of sympathy from both the audience and Mary McDonnell until both the audience and Ms. McDonnell realized we were sympathizing for a machine. If this doesn’t show how the very blurred the line has become, I’m not sure what does!

Dr. Lipson’s other research involves robots that can self replicate. His slides for this portion referenced the Nature article, “Robots: Self-reproducing Machines.” These robots are modular and when given extra modules will, rather than adding to their own design, create a copies—exact replicas. (Yes, perhaps Battlestar Galactica wasn’t that far from the mark when it posited that “there are many copies.”) The reaction of both the non-science panelists and the audience to the video that was included of the tiny robots using extra building material was really that of astonishment. Michael Hogan spent much of this discussion watching the monitor with his mouth agape in wonder.

To the amusement of about half the room, the moderator than asked Dr. Lipson if perhaps he could “design fully evolved robots so that the creationists have something to believe in.” While an easy laugh, it also does raise the question that follows. While the moderator asked posed it as, “Are we in a paradigm shifting moment,” it could easily also be tied back to the Battlestar Galactica universe and Edward James Olmos’ (as then Commander Adama’s) speech in the miniseries when he suggested that perhaps in creating the Cylons man had been playing God.

The panelists noted that machine intelligence does not have the same constraints as human intelligence. A human brain consists of, as one panelist put it, “three pounds of cheesy grey matter” and performing functions takes 10s of milliseconds. A computer chip is already orders of magnitude faster. When asked to pinpoint the point at which human-level AI could be accomplished, the panelists were originally reluctant to give a time frame, though with great reluctance acknowledge that it could happen within our lifetime.

Hod Lipson suggested that the next step—that of superhuman AI—would be substantially shorter than the first step of accomplishing human-level AI.

This discussion then naturally led back to Battlestar Galactica’s dystopic view of a potential robot-led uprising. After all, if, as the scientists on the panel think that the accomplishment of an artificial intelligence that is smarter than humans is not only possible, but probable, what is the likelihood that this artificial intelligence is going to want to do what its human creators tell it to do?

The panelists dodged that question, saying that they found that part of the science of BSG highly unrealistic

It’s entirely improbable that [the Cylons] would begin by killing off all the ugly people.

They then discussed issues relating to the internet and networking relative to the evolution of robotics, which was another area that was of grave concern in the universe of Battlestar Galactica. The scientists all agreed that that aspect of BSG and Adama’s fear of networking was highly realistic. They said that the driving force for robotic evolution was really the software, because at this point given that many computers have multi-core processors independent computers, in some ways have mini-networks inside them. They also said that networking itself is a highly vague concept and that “it’s not like you can just ‘turn off’ the internet.”

A clip from the third season episode “A Measure of Salvation” which showed the discussion among the characters surrounding the potential for Cylon genocide vis-a-vis a biological weapon then followed. Mary McDonnell noted that in addition to the many ethical questions that were raised relative to whether or not one can realistically commit a genocidal act against “machines,” one of the remarkable points of that episode for her, was the scene that followed wherein Adama turned the choice over to Roslin (parenthetically, Mary McDonnell noted “as he often did with difficult decisions”). She said that it was fascinating to her to later watch Laura’s (parenthetically, again, she said, “her own”) face during that moment she ordered the genocide—because although Laura was did what she did for the same “reason she did almost everything” in making a choice for survival, that underneath that she could see a “pure cynacism”—the type of which is at the root of any difficult decision that you “ethically disagree with but do it anyway.”

When asked by the moderator whether they were given the opportunity to provide feedback on the direction their characters were going, and Mary McDonnell and laughed and said they were “the noisest bunch of actors.” She said that it was particularly difficult for her at the beginning of the series because she had to shift a lot of her point of view in order to adapt to Laura Roslin’s own changes and many of the more “feminine aspects had to shift into the back seat.” She described a point at which she and [executive producer] David Eick were trading angry emails, and [executive producer] Ronald D. Moore had to butt in and send them back to their respective corners.

She followed that by saying that she’s never before “had the privilege to be inside something that engendered so much discourse.” Both she and Michael Hogan said that while they rarely connected with the audience outside of special events, that Ronald D. Moore was profoundly aware of the audience’s reaction and feedback and did sometimes take that into account, so that there was truly a “collective aspect” to the show. And they both again applauded the fans and the audience.

From that, the discussion then returned to the original subject of the clip, and that of “robot rights,” and whether it was possible to provide “rights” to robots and whether they had subjective experiences and a sense of self upon which the foundation for such a discussion should be lain. Dr. Lipson said he did envision a day where “the stuff that you’re built out of is no more relevant than the color of your skin,” and that in many cases “machines are not explicitly being programmed and to that extent it’s much closer to biology.”

Dr. Bostrom, who served as the ethical check necessary to balance the scientists’ curiosity cautioned against the “unchecked power” of the sort that comes with this technology after so the other two scientists suggested that given that AI lacks a desire for power, that it is not something to be generally feared.

Dr. Bostrom further noted that all self-aware creatures have a desire for self preservation, and that perhaps most relevant to the discussion of BSG was the use of AI in military applications, where the two goals were “self preservation” and “destruction of other humans.” He suggested that the unpredictability of evolved systems might be the very reason not to do it.

He noted that the “essence of intelligence is to steer the future into an end target,” and that looking at BSG as a cautionary tale, it is necessary to remove some of the unpredictability.

One of the other panelists noted that they regarded science fiction as “not so much a prediction of what will happen in the future as a commentary on the present,” to which Mary McDonnell emphatically nodded.

The moderator then asked Dr. Bostrom about his work in “Friendly AI.” He described it is generally working to ensure that the development of AI is for the benefit of humanity, and that he regrets that this pursuit has “very little serious academic effort.”

In a dynamic change of pace, the moderator then wanted to devote some time to Kevin Warwick’s experiments into turning himself into a Cyborg. Video of some of this can be found on YouTube and he’s also written about the experience in his book, I Cyborg. He described a series of experiments, one in which he implanted a chip in his head which was implanted so as to allow him to be linked to a robotic arm on the other side of the Atlantic Ocean and actually feel and experience what this arm was doing, including the amount of pressure used.

He also described a second experience where a set of sensors implanted in his wife’s arm allowed him to experience and feel what she was doing from across the room, which is written up in IEEE Proceedings of Communications as “Thought communication and control: a first step using radiotelegraphy.” The description of this experiment caused a great deal of curiosity for all involved as they tried to understand exactly what he was feeling and how he felt it, and at one point even Mary McDonnell started asking questions with a great deal of amusing innuendo.

His final experiment involved providing his wife with a necklace that changed color depending on whether he was calm or “excited” based on biofeedback it received from a chip in his brain, and again led to a great deal of innuendo.

The moderator drew her portion of the panel to a close by asking the actors what they would take from their experience on BSG. Mary McDonnell said that

I no longer suffer from the illusion that we have a lot of time. On a spiritual and political plane, I’d like to be of better and more efficient service, because it really feels like we’re running out of time.

Michael Hogan said there’s nothing he could say that would improve on Ms. McDonnell’s statement.

There was then only time for two questions from the audience. The first question was whether or not AI should be regulated by the government. Dr. Lipson answered that indeed it should not, and that instead it should be open and transparent. Dr. Warwick added that based on the U.S.’s democratic system, that AI belongs to all people regardless.

The other question from the audience was directed toward Mary McDonnell and was whether or not she was surprised to learn that she wasn’t as Cylon. Ms. McDonnell answered that she felt it very necessary that Laura remain on the “human, fearful plane” and that to do otherwise with her would’ve been a cruel joke. As such, she wasn’t surprised by her continued humanity.

~*PoliSciPoli*~

]]>