From its earliest inceptions, science fiction has blurred the line between reality and technological fantasy in a remarkably prescient manner. Many of the discoveries and gadgets that have integrated seamlessly into modern life were first preconceived theoretically. More recently, the technologies behind ultra-realistic visual and motion capture effects are simultaneously helping scientists as research tools on a granular level in real time. The dazzling visual effects within the time-jumping space film Interstellar included creating original code for a physics-based ultra-realistic depiction of what it would be like to orbit around and through a black hole. Astrophysics researchers soon utilized the film’s code to visualize black hole surfaces and their effects on nearby objects. Virtual reality, whose initial development was largely rooted in imbuing realism into the gaming and video industries, has advanced towards multi-purpose applications in film, technology and science. The Science Channel is augmenting traditional programming with a ‘virtual experience’ to simulate the challenges and scenarios of an astronaut’s journey into space; VR-equipped GoPro cameras are documenting remote research environments to foster scientific collaboration and share knowledge; it’s even being implemented in health care for improving training, diagnosis and treatment concepts. The ability to record high-definition film of landscapes and isolated areas with drones, which will have an enormous impact on cinematography, carries with it the simultaneous capacity to aid scientists and health workers with disaster relief, wildlife conservation and remote geomapping.

The evolution of entertainment industry technology is sophisticated, computationally powerful and increasingly cross-functional. A cohort of interdisciplinary researchers at Northwestern University is adapting computing and screen resolution developed at DreamWorks Animation Studios as a vehicle for data visualization, innovation and producing more rapid and efficient results. Their efforts, detailed below, and a collective trend towards integration of visual design in interpreting complex research, portends a collaborative future between science and entertainment.

Not long into his tenure as the lead visualization engineer at Northwestern University’s Center For Advanced Molecular Imaging (CAMI), Matt McCrory noticed a striking contrast between the quality of the aesthetic and computational toolkits used in scientific research versus the entertainment industry. “When you spend enough time in the research community, with people who are doing the research and the visualization of their own data, you start to see what an immense gap there is between what Hollywood produces (in terms of visualization) and what research produces.” McCrory, a former lighting technical director at DreamWorks Animation, where he developed technical tools for the visual effects in Shark Tale, Flushed Away and Kung Fu Panda, believes that combining expertise in cutting-edge visual design with emerging tools of molecular medicine, biochemistry and pharmacology can greatly speed up the process of discovery. Initially, it was science that offered the TV and film world the rudimentary seeds of technology that would fuel creative output. But the TV and film world ran with it — so much so, that the line between science and art is less distinguishable than in any other industry. “We’re getting to a point [on the screen] where we can’t discern anymore what’s real and what’s not,” McCrory notes. “That’s how good Hollywood [technology] is getting. At the same time, you see very little progress being made in scientific visualization.”

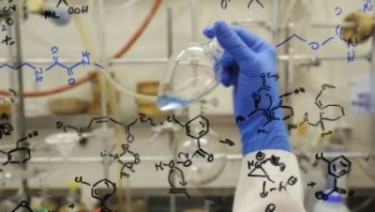

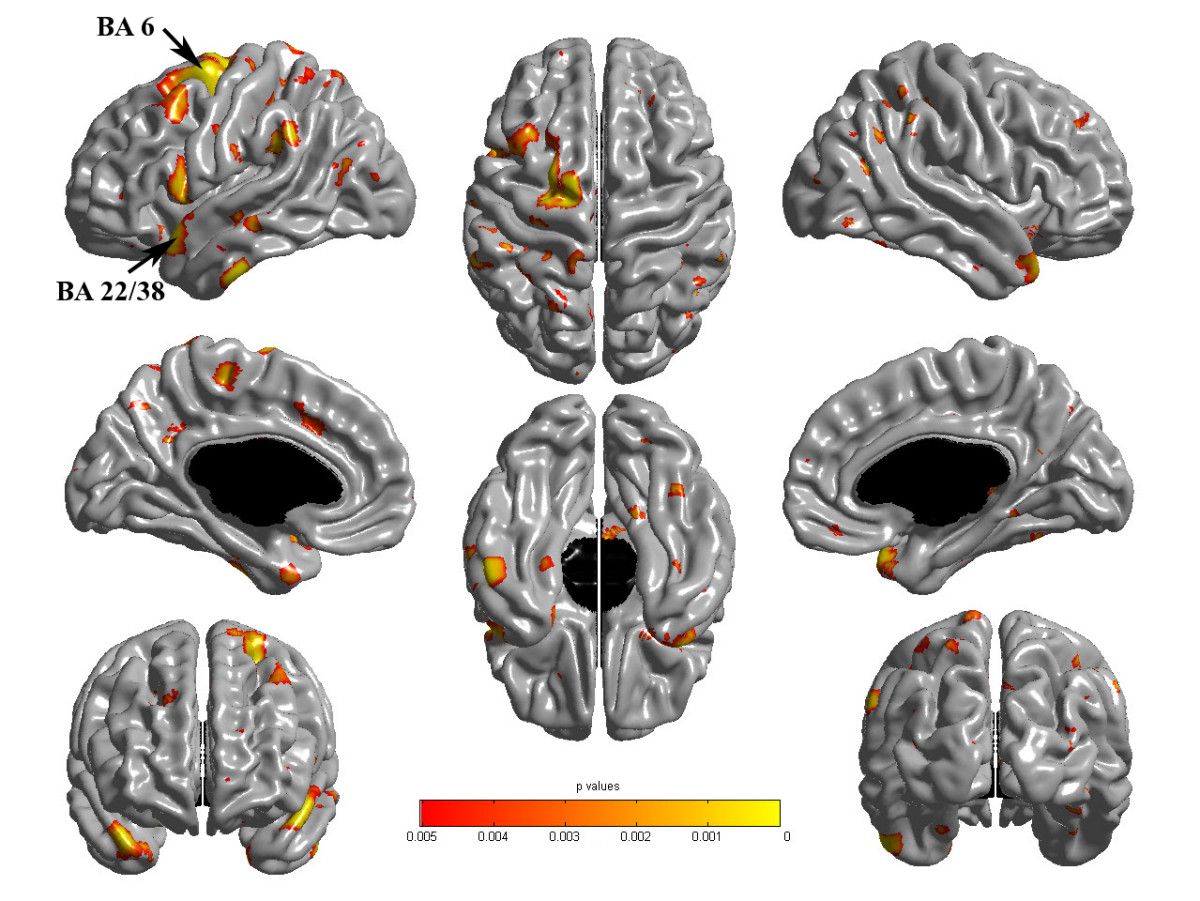

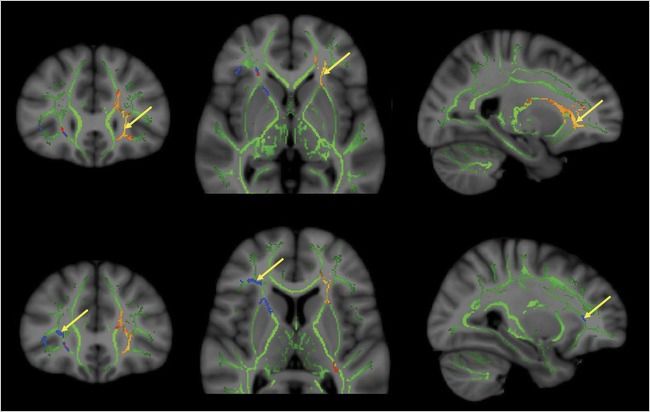

What is most perplexing about the stagnant computing power and visualization in science is that modern research across almost all fields is driven by extremely large, high-resolution data sets. A handful of select MRI imaging scanners are now equipped with magnets ranging from 9.4 to 11.75 Teslas, capable of providing cellular resolution on the micron scale (0.1 to 0.2 millimeters versus 1.5 Tesla hospital scanners, at 1 millimeter resolution) and cellular changes on the microsecond scale. The ultra high-resolution imaging provides researchers with insight into everything from cancer to neurodegenerative diseases. While most biomedical drug discovery today is engineered by robotics equipment, which screens enormous libraries of chemical compounds for activity potential around the clock in a “high-throughput” fashion — from thousands to hundreds of thousands of samples — data must still be analyzed, optimized and implemented by researchers. Astronomical observations (from black holes to galaxies colliding to detailed high-power telescope observations in the billions of pixels) produce some of the largest data sets in all of science. Molecular biology and genetics, which in the genomics era has unveiled great potential for DNA-based sub-cellular therapeutics, has also produced petrabytes of data sets that are a quandary for most researchers to store, let alone visualize.

Unfortunately, most scientists can’t allocate dual resources to both advancing their own research and finding the best technology with which to optimize it. As McCrory points out: “In a field like chemistry or biology, you don’t have people who are working day and night with the next greatest way of rendering photo-realistic images. They’re focused on something related to protein structures or whatever their research is.”

The entertainment industry, on the other hand, has a singular focus on developing and continuously perfecting these tools, as necessitated by proliferation of divergent content sources, screen resolution and powerful capture devices. As an industry insider, McCrory appreciates the competitive evolution, driven by an urgency that science doesn’t often have to grapple with. “They’ve had to solve some serious problems out there and they also have to deal with issues involving timelines, since it’s a profit-driven industry,” he notes. “So they have to come up with [computing] solutions that are purely about efficiency.” Disney’s 2014 animated science film Big Hero 6 was rendered with cutting-edge visualization tools, including a 55,000-core computer and custom proprietary lighting software called Hyperion. Indeed, render farms at LucasFilm and Pixar consist of core data centers and state-of-the-art supercomputing resources that could be independent enterprise server banks.

At Northwestern’s CAMI, this aggregate toolkit is leveraged by scientists and visual engineers as an integrated collaborative research asset. In conjunction with a senior animation specialist and long-time video game developer, McCrory helped to construct an interactive 3D visualization wall consisting of 25 high-resolution screens that comprise 52 million total pixels. Compared to a standard computer (at 1-2 million pixels), the wall allows researchers to visualize and manage entire data sets acquired with higher-quality instruments. Researchers can gain different perspectives on their data in native resolution, often standing in front of it in large groups, and analyze complex structures (such as proteins and DNA) in 3D. The interface facilitates real-time innovation and stunning clarity for complex multi-disciplinary experiments. Biochemists, for example, can partner with neuroscientists to visualize brain activity in a mouse as they perfect drug design for an Alzheimer’s enzyme inhibitor. Additionally, 7 thousand in-house high performing core servers (comparable to most studios) provide undisrupted big data acquisition, storage and mining.

Could there be a day where partnerships between science and entertainment are commonplace? Virtual reality studios such as Wevr, producing cutting-edge content and wearable technology, could become a go-to virtual modeling destination for physicists and structural chemists. Programs like RenderMan, a photo-realistic 3D software developed by Pixar Animation Studios for image synthesis, could enable greater clarity on biological processes and therapeutics targets. Leading global animation studios could be a source of both render farm technology and talent for science centers to increase proficiency in data analysis. One day, as its own visualization capacity grows, McCrory, now pushing pixels at animation studio Rainmaker Entertainment, posits that NUViz/CAMI could even be a mini-studio within Chicago for aspiring filmmakers.

The entertainment industry has always been at the forefront of inspiring us to “dream big” about what is scientifically possible. But now, it can play an active role in making these possibilities a reality.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

The current scientific landscape can best be thought of as a transitional one. With the proliferation of scientific innovation and the role that technology plays in our lives, along with the demand for more of these breakthroughs, comes the simultaneous challenge of balancing affordable lab space, funding and opportunity for young investigators and inventors to shape their companies and test novel projects. Los Angeles science incubator Lab Launch is trying to simplify the process through a revolutionary, not-for-profit approach that serves as a proof of concept for an eventual interconnected network of “discovery hubs”. Founder Llewelyn Cox sits down with ScriptPhD for an insightful podcast that assesses the current scientific climate, the backdrop that catalyzed Lab Launch, and why alternatives to traditional avenues of research are critical for fueling the 21st Century economy.

As science and biotechnology innovation go, we are, to put it in Dickensian terms, in the best of times and the worst of times.

On the one hand, we are in the midst of a pioneering golden age of discovery, biomedical cures and technological evolution. It seems that every day brings limitless possibility and unbridled imagination. Recent development of CRISPR gene-editing machinery will facilitate specific genome splicing and wholescale epigenetic insight into disease and function. Immunotherapy, programming the body’s innate immune system and utilizing it to eradicate targeted tumors, represents the biggest progress in cancer research in decades. For the first time ever, physicists have detected and quantified gravitational waves, underscoring Einsteins theory of gravity, relativity and how the space continuum expands and contracts. The private company SpaceX landed a rocket on a drone ship for the first time, enabling faster, cheaper launches and reusable rockets.

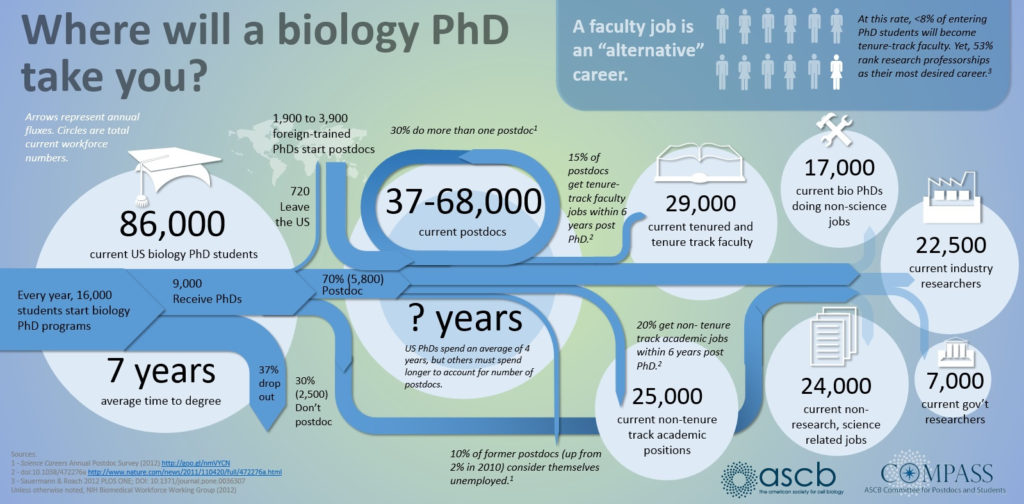

Despite these exciting and hopeful advancements, many of which have the potential to greatly benefit society and quality of life, there remain tangible challenges to fostering and preserving innovation. Academic science produces too many PhDs, which saturates the job market, stifles viable prospects for the most talented scientists and even hurts science in the long run. Exacerbating this problem is a shortage of basic research funding in the United States that represents the worst crisis in 50 years. And while European countries experience a similar pullback in grant availability, developing countries are investing in research as an avenue of future economic growth. High-risk, high-reward research, particularly from young investigators, is suppressed at the expense of “safe research” and already-wealthy, established labs. Conduits towards entrepreneurship are possible, many through commercializing academic findings, but few come without strings attached, start-up companies are in a 48% decline since the 1970s. With research and development stagnating at most big pharmaceutical companies and current biomedical research growth unsustainable, there is an unprecedented opportunity to disrupt the innovation pipeline and create a more robust economy.

In an effort to boost discovery and development, there has been a permeation of venture capital accelerators and think-tank style early stage incubators from the technology sector into basic science; indeed it’s experiencing a proliferating boom. Affordable space, world-class facilities, access to startup capital and a opportunity to explore high-risk ideas — all are attractive to young academics and scientific entrepreneurs. Even pharmaceutical giants are spawning innovation arms as potential sources of future ideas. Large cities like New York are even using incubator space as a catalyst for growing a localized biotechnology-fueled economy. Such opportunities, however, don’t come without risk and collateral to innovators. As Mike Jones of science, inc. warns, the single biggest question that innovators must asses is: “Is the value I am getting equal to the risk I am saving, through equity?” Many incubators and accelerators act as direct conduits to academia and industry, both for talent recruitment and retention of intellectual material. In fact, the business model governing incubator space and asset allocation can often be nebulous, and sometimes further complicated by mandatory “collaborative” sharing not just of materials and space, but data and intellectual property. Even wealthy investors, who are now underwriting academic and private sector research, want a voice in the type of research and how it is conducted.

Amidst this idea-driven revolution was borne the concept of Lab Launch, a transformative permutation of incubator space for fostering pharmaceutical and biotechnology innovation. The fundamental principle behind Los Angeles-based Lab Launch is deceptively simple. As a not-for-profit endeavor, it provides simple, sleek and high-level equipment and space for life science and biotechnology experimentation. Because all shared equipment is donated as overflow from companies and laboratories that no longer need it, costs are minimized towards laboratory management fees and rental of facilities. As a stripped-down discovery engine model, this allows Lab Launch scientists to keep 100% of their intellectual property and equity, something that is virtually unheralded for young innovators at early-development stages. On a more complex level, the potential wide-scale benefits of Lab Launch (and future copycat spawns) are profound and resonant. In an industry where the Boston-San Francisco-San Diego triumvirate presents a near-hegemony for biotechnology funding, development and intellectual assets, the growth of simple, inexpensive science incubators in large cities carries tremendous economic upside. Critics might point out the lack of substantive guidance and elite think tank access of such a platform, yet 90% of all incubators and accelerators still fail, regardless. Moreover, selection criteria are often biased towards specific business interests or research aims that buoy academia and venture capital profiteers, which weed out the most high-risk ideas and participants. How, for example, would a scientist without a PhD or prestigious pedigree get access to a mainstream incubator lab space? How would a radically non-traditional idea or approach merit mainstream support or funding? A recent Harvard Business Review article suggests that lean start-ups with the most efficient, bare-bones development models, have far higher success rates and should be the template for driving an innovation-based economy. As elucidated in the podcast below, opening doors to facilitate proof-of-concept innovation and linking a virtual network of lab spaces will give rise to not just the next Silicon Valley, but the great scientific breakthroughs of tomorrow.

Lab Launch founder Dr. Llewellyn Cox sat down with ScriptPhD for a podcast interview to talk about his revolutionary not-for-profit startup incubator and the challenging scientific environment that inspired the idea. Among our topics of discussion:

•How lack of funding and overflow of PhDs in the current scientific climate stifles creativity and innovation

•Why biotechnology will cultivate exciting new industries in the 21st Century

•How no strings attached incubators like Lab Launch help give rise to Silicon Valleys of the future

•Why we should in fact be hopeful about how scientific progress is advancing

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

The last 25 years have brought an unprecedented level of scientific and technological advances, impacting virtually all dimensions of society, from communication and the digital revolution, to economics and food production to nanotechnology and medicine – and that’s just a start. The next few decades will rapidly expand this progress with exponential discovery and innovation, amidst more pressing global challenges than we’ve ever faced before. The opportunities to develop faster, better and cheaper products that improve modern living are limitless – Tesla electric cars, energy-saving fuels and machines, robotics – but they all share a common basic need for developing and studying materials in a more efficient manner. This will require a real-time acceleration of sharing, analytics and simulation through readily accessible databases. Essentially, an open-source wiki for materials scientists. In our in-depth article below, ScriptPhD.com explains why materials science is the most critical gateway towards 21st Century technology and how California startup company Citrine Informatics is providing revolutionary new information extraction software to create a crowdsourced, open access database available to any scientist.

There has been a public access revolution of sorts transforming science. A necessary one, at that. Science funding is in crisis. The peer-review process is under heavy scrutiny. And scientists are turning to transparency to help. Some notable billionaires are circumventing public funding and privatizing science. Molecular biologist Ethan Perlstein has used crowdfunding to raise hundreds of thousands of dollars from the public at large for a basic research lab. Last fall, as the Ebola crisis gripped the world’s attention, an expert researcher at The Scripps Research Institute (where I received a PhD) appealed to public crowd funding to raise $100,000 for vaccine research. With platforms like Experiment, RocketHub and KickStarter proliferating support for science, the public at large has begun to serve as an important incubator of innovation. More importantly, researchers are increasingly rethinking traditional academic publishing and recognizing the value of open data sharing through publications and databases. The Public Library of Science (PLoS) is the biggest non-profit advocate and publisher of open access research. Mainstream journals like Science have begun publishing free web-based alternatives that are immediately accessible. Calls for unified data sharing have grown louder and more widespread. Even the FDA has announced plans for crowdsourcing a genomics research platform to improve efficiency of diagnostic and clinical tests and their analysis. Far from hindering the scientific process and output, these efforts have accelerated alternative means of funding, data acquisition and exchange, collaboration and ultimately, discovery.

Think about virtually any practical aspect of life and it relies on materials. Transportation of any kind, Kevlar vests, sports equipment, communication devices, clothing, growing and making food… it’s impossible to think about modern society in even the most impoverished developing countries without them. Now think on a bigger scale. Superconductors. Carbon nanotubules. Graphene. The materials of the future that make even these breakthroughs obsolete. So crucial is materials science to all facets of economics, research and quality of life, that in 2011, the United States Government launched a Materials Genome Initiative. A partnership between the private sector industry, universities, and the government, its primary goal is to utilize a materials genome approach to cut the cost and time to market for basic materials products by 50%.

The only problem with materials research? Data. And lots of it. Aided largely by digital services and a proliferation of technology research – not to mention marketplace for the products that it makes possible – there is so much data produced today that the process of testing, developing, tweaking and inserting a material into a product takes about 20 years, according to the National Academy of Sciences. To combat this, materials scientists and engineers have growingly embraced a similar open access philosophy to that of life scientists. Leading science publisher Elsevier recently launched an infrastructure called “Open Data” to facilitate materials science data sharing across thirteen major publications. The Lawrence Berkeley National Laboratory just created the world’s largest database of elastic properties, a virtual gold mine for scientists working on materials that require mechanical properties for things like cars and airplanes. NASA has even opened a Physical Science Informatics database of all of its space station materials research in the hopes that the crowdsourcing accelerates engineering research discovery, applicable both to space and Earth.

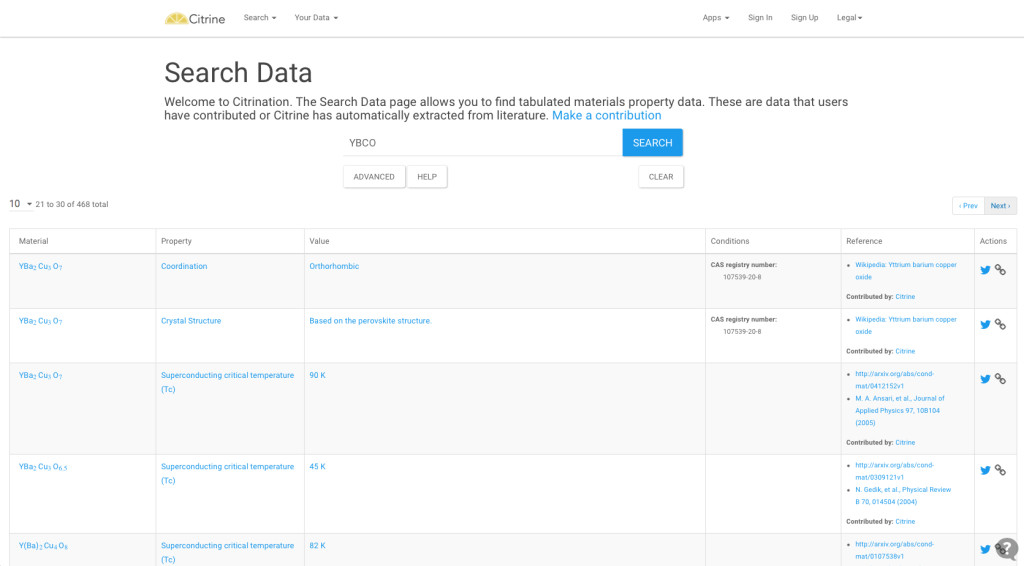

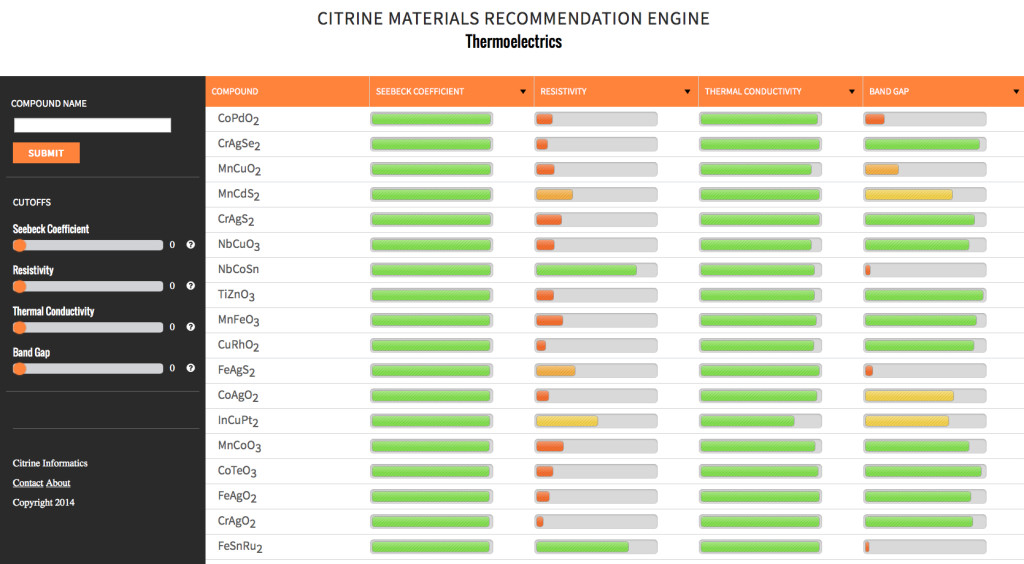

Citrine Informatics, a startup company in California, is hoping to unify these concepts of data sharing and mining through open access web-based software (boosted by crowdsourcing) to build a comprehensive, open database of materials and chemical data. Essentially, Citrine is rolling out a cloud platform (Citrination) that will act as a digital middle man between data acquisition and product development. And rather than storing it in vastly differing locations under differing access guidelines, Citrination’s algorithms gather all available data (from publications to databases to publicly shared data from private companies) in order to show properties under different conditions, including when they might fail. A simple, mainframe registry database allows users to upload data, build customized data sets and see all known properties of a particular material under all different physical conditions (see below). Rater than testing and retesting internal materials data internally, thus lengthening R&D pipelines, research labs can simply model based on available information and adjust experiments accordingly.

“What we do represents a big change in the status quo,” co-founder Bryce Meredig says. “A lot of the scientists in these industries don’t have a natural inclination to turn to software to solve these problems.” Until now. The software will be made free to not for profit research institutions and will sell to materials and chemical companies that want to avail themselves of the wealth of data. Citrine has used a large recent infusion of grant funding to raise their ambition beyond a database and towards applications like 3D printing, renewable energy technology (co-founder Greg Mulholland was recognized by Forbes as a 30 under 30 leader in energy) and the next generation of devices.

The practical benefits to society as a whole of extending open access and crowdsourcing to materials science are tremendous. On an individual level, virtually every computer and mobile device we put in our hands, every gadget and machine we buy in the future will depend on improved materials. On a more wide-scale level, materials will be at the forefront of solving the most complex, important challenges to our planet and its inhabitants, none more important than the energy crisis. Food production, water purification and distribution, transportation, infrastructure all will rely on creating sustainable energy. More ambitious endeavors such as space travel, medical treatments and advanced research collaborations will be even more reliant on new and improved materials. The faster that scientists, researchers and engineers are able to mine data from previous experiments, replicate it and design smarter studies based on computerized algorithms of how those materials behave, the faster they can produce breakthroughs in the laboratory and into the marketplace. The stakes are high. The scientific rewards are infinite. The time to open and use a free-access materials database is now.

This article was sponsored by Citrine Informatics.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

Engineering has an unfortunate image problem. With a seemingly endless array of socioeconomic, technological and large-scale problems to address, and with STEM fields set to comprise the most lucrative 21st Century careers, studying engineering should be a no-brainer. Unfortunately, attracting a wide array of students — or even appreciating engineers as cool — remains difficult, most noticeably among women. When Google Research found out that the #2 reason girls avoid studying STEM fields is perception and stereotypes on screen, they decided to work with Hollywood to change that. Recently, they partnered with the National Academy of Sciences and USC’s prestigious Viterbi School of Engineering to proactively seek out ideas for creating a television program that would showcase a female engineering hero to inspire a new generation of female engineers. The project, entitled “The Next MacGyver,” came to fruition last week in Los Angeles at a star-studded event. ScriptPhD.com was extremely fortunate to receive an invite and have the opportunity to interact with the leaders, scientists and Hollywood representatives that collaborated to make it all possible. Read our full comprehensive coverage below.

“We are in the most exciting era of engineering,” proclaims Yannis C. Yortsos, the Dean of USC’s Engineering School. “I look at engineering technology as leveraging phenomena for useful purposes.” These purposes have been recently unified as the 14 Grand Challenges of Engineering — everything from securing cyberspace to reverse engineering the brain to solving our environmental catastrophes to ensuring global access to food and water. These are monumental problems and they will require a full scale work force to fully realize. It’s no coincidence that STEM jobs are set to grow by 17% by 2024, more than any other sector. Recognizing this opportunity, the US Department of Education (in conjunction with the Science and Technology Council) launched a five-year strategic plan to prioritize STEM education and outreach in all communities.

Despite this golden age, where the possibilities for STEM innovation seem as vast as the challenges facing our world, there is a disconnect in maximizing a full array of talent for the next generation of engineers. There is a noticeable paucity of women and minority students studying STEM fields, with women comprising just 18-20% of all STEM bachelor’s degrees, regardless of the fact that more students are STEM degrees than ever before. Madeline Di Nono, CEO of the Geena Davis Institute on Gender in Media and a judge at the Next MacGyver competition, boils a lot of the disinterest down to a consistent lack of female STEM portrayal in television and film. “It’s a 15:1 ratio of male to female characters for just STEM alone. And most of the science related positions are in life sciences. So we’re not showing females in computer science or mathematics, which is where all the jobs are going to be.” Media portrayals of women (and by proxy minorities) in science remains shallow, biased and appearance-focused (as profiled in-depth by Scientific American). Why does this matter? There is a direct correlation between positive media portrayal and STEM career participation.

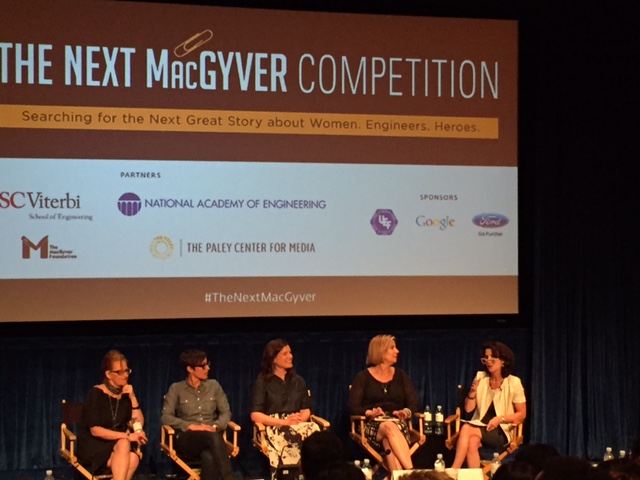

It has been 30 years since the debut of television’s MacGyver, an action adventure series about clever agent Angus MacGyver, working to right the wrongs of the world through innovation. Rather than using a conventional weapon, MacGyver thwarts enemies with his vast array of scientific knowledge — sometimes possessing no more than a paper clip, a box of matches and a roll of duct tape. Creator Lee Zlotoff notes that in those 30 years, the show has run continuously around the world, perhaps fueled in part by a love of MacGyver’s endless ingenuity. Zlotoff noted the uncanny parallels between MacGyver’s thought process and the scientific method: “You look at what you have and you figure out, how do I turn what I have into what I need?” Three decades later, in the spirit of the show, the USC Viterbi School of Engineering partnered with the National Academy of Sciences and the MacGyver Foundation to search for a new MacGyver, a television show centered around a female protagonist engineer who must solve problems, create new opportunities and most importantly, save the day. It was an initiative that started back in 2008 at the National Academy of Sciences, aiming to rebrand engineering entirely, away from geeks and techno-gadget builders towards an emphasis on the much bigger impact that engineering technology has on the world – solving big, global societal problems. USC’s Yortsos says that this big picture resonates distinctly with female students who would otherwise be reluctant to choose engineering as a career. Out of thousands of submitted TV show ideas, twelve were chosen as finalists, each of whom was given five minutes to pitch to a distinguished panel of judges comprising of writers, producers, CEOs and successful show runners. Five winners will have an opportunity to pair with an established Hollywood mentor in writing a pilot and showcasing it for potential production for television.

If The Next MacGyver feels far-reaching in scope, it’s because it has aspirations that stretch beyond simply getting a clever TV show on air. No less than the White House lent its support to the initiative, with an encouraging video from Chief Technology Officer Megan Smith, reiterating the importance of STEM to the future of the 21st Century workforce. As Al Roming, the President of the National Academy of Engineering noted, the great 1950s and 1960s era of engineering growth was fueled by intense competition with the USSR. But we now need to be unified and driven by the 14 grand challenges of engineering and their offshoots. And part of that will include diversifying the engineering workforce and attracting new talent with fresh ideas. As I noted in a 2013 TEDx talk, television and film curry tremendous power and influence to fuel science passion. And the desire to marry engineering and television extends as far back as 1992, when Lockheed and Martin’s Norm Augustine proposed a high-profile show named LA Engineer. Since then, he has remained a passionate advocate for elevating engineers to the highest ranks of decision-making, governance and celebrity status. Andrew Viterbi, namesake of USC’s engineering school, echoed this imperative to elevate engineering to “celebrity status” in a 2012 Forbes editorial. “To me, the stakes seem sufficiently high,” said Adam Smith, Senior Manager of Communications and Marketing at USC’s Viterbi School of Engineering. “If you believe that we have real challenges in this country, whether it is cybersecurity, the drought here in California, making cheaper, more efficient solar energy, whatever it may be, if you believe that we can get by with half the talent in this country, that is great. But I believe, and the School believes, that we need a full creative potential to be tackling these big problems.”

So how does Hollywood feel about this movement and the realistic goal of increasing its array of STEM content? “From Script To Screen,” a panel discussion featuring leaders in the entertainment industry, gave equal parts cautionary advice and hopeful encouragement for aspiring writers and producers. Ann Merchant, the director of the Los Angeles-based Science And Entertainment Exchange, an offshoot of the National Academy of Sciences that connects filmmakers and writers with scientific expertise for accuracy, sees the biggest obstacle facing television depiction of scientists and engineers as a connectivity problem. Writers know so few scientists and engineers that they incorporate stereotypes in their writing or eschew the content altogether. Ann Blanchard, of the Creative Artists Agency, somewhat concurred, noting that writers are often so right-brain focused, that they naturally gravitate towards telling creative stories about creative people. But Danielle Feinberg, a computer engineer and lighting director for Oscar-winning Pixar animated films, sees these misconceptions about scientists and what they do as an illusion. When people find out that you can combine these careers with what you are naturally passionate about to solve real problems, it’s actually possible and exciting. Nevertheless, ABC Fmaily’s Marci Cooperstein, who oversaw and developed the crime drama Stitchers, centered around engineers and neuroscientists, remains optimistic and encouraged about keeping the doors open and encouraging these types of stories, because the demand for new and exciting content is very real. Among 42 scripted networks alone, with many more independent channels, she feels we should celebrate the diversity of science and medical programming that already exists, and build from it. Put together a room of writers and engineers, and they will find a way to tell cool stories.

At the end of the day, Hollywood is in the business of entertaining, telling stories that reflect the contemporary zeitgeist and filling a demand for the subjects that people are most passionate about. The challenge isn’t wanting it, but finding and showcasing it. The panel’s universal advice was to ultimately tell exciting new stories centered around science characters that feel new, flawed and interesting. Be innovative and think about why people are going to care about this character and storyline enough to come back each week for more and incorporate a central engine that will drive the show over several seasons. “Story does trump science,” Merchant pointed out. “But science does drive story.”

The twelve pitches represented a diverse array of procedural, adventure and sci-fi plots, with writers representing an array of traditional screenwriting and scientific training. The five winners, as chosen by the judges and mentors, were as follows:

Miranda Sajdak — Riveting

Sajdak is an accomplished film and TV writer/producer and founder of screenwriting service company ScriptChix. She proposed a World War II-era adventure drama centered around engineer Junie Duncan, who joins the military engineer corps after her fiancee is tragically killed on the front line. Her ingenuity and help in tackling engineering and technology development helps ultimately win the war.

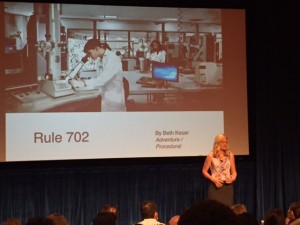

Beth Keser, PhD — Rule 702

Dr. Keser, unique among the winners for being the only pure scientist, is a global leader in the semiconductor industry and leads a technology initiative at San Diego’s Qualcomm. She proposed a crime procedural centered around Mimi, a brilliant scientist with dual PhDs, who forgoes corporate life to be a traveling expert witness for the most complex criminal cases in the world, each of which needs to be investigated and uncovered.

Jayde Lovell — SECs (Science And Engineering Clubs)

Jayde, a rising STEM communication star, launched the YouTube pop science network “Did Someone Say Science?.” Her show proposal is a fun fish-out-of-water drama about 15-year-old Emily, a pretty, popular and privileged high school student. After accidentally burning down her high school gym, she forgoes expulsion only by joining the dreaded, geeky SECs club, and in turn, helping them to win an engineering competition while learning to be cool.

Craig Motlong — Q Branch

Craig is a USC-trained MFA screenwriter and now a creative director at a Seattle advertising agency. His spy action thriller centered around mad scientist Skyler Towne, an engineer leading a corps of researchers at the fringes of the CIA’s “Q Branch,” where they develop and test the gadgets that will help agents stay three steps ahead of the biggest criminals in the world.

Shanee Edwards — Ada and the Machine

Shanee, an award-winning screenwriter, is the film reviewer at SheKnows.com and the host/producer of the web series She Blinded Me With Science. As a fan of traditional scientific figures, Shanee proposed a fictionalized series around real-life 1800s mathematician Ada Lovelace, famous for her work on Charles Babbage’s early mechanical general-purpose computer, the Analytical Engine. In this drama, Ada works with Babbinge to help Scotland Yard fight opponents of the industrial revolution, exploring familiar themes of technology ethics relevant to our lives today.

Craig Motlong, one of five ultimate winners, and one of the few finalists with absolutely no science background, spent several months researching his concept with engineers and CIA experts to see how theoretical technology might be incorporated and utilized in a modern criminal lab. He told me he was equal parts grateful and overwhelmed. “It’s an amazing group of pitches, and seeing everyone pitch their ideas today made me fall in love with each one of them a little bit, so I know it’s gotta be hard to choose from them.”

Whether inspired by social change, pragmatic inquisitiveness or pure scientific ambition, this seminal event was ultimately both a cornerstone for strengthening a growing science/entertainment alliance and a deeply personal quest for all involved. “I don’t know if I was as wrapped up in these issues until I had kids,” said USC’s Smith. “I’ve got two little girls, and I tried thinking about what kind of shows [depicting female science protagonists] I should have them watch. There’s not a lot that I feel really good sharing with them, once you start scanning through the channels.” Motlong, the only male winner, is profoundly influenced by his experience of being surrounded by strong women, including a beloved USC screenwriting instructor. “My grandmother worked during the Depression and had to quit because her husband got a job. My mom had like a couple of options available to her in terms of career, my wife wanted to be a genetic engineer when she was little and can’t remember why she stopped,” he reflected. “So I feel like we are losing generations of talent here, and I’m on the side of the angels, I hope.” NAS’s Ann Merchant sees an overarching vision on an institutional level to help achieve the STEM goals set forth by this competition in influencing the next generation of scientist. “it’s why the National Academy of Sciences and Engineering as an institution has a program [The Science and Entertainment Exchange] based out of Los Angeles, because it is much more than this [single competition].”

Indeed, The Next MacGyver event, while glitzy and glamorous in a way befitting the entertainment industry, still seemed to have succeeded wildly beyond its sponsors’ collective expectations. It was ambitious, sweeping, the first of its kind and required the collaboration of many academic, industry and entertainment alliances. But it might have the power to influence and transform an entire pool of STEM participants, the way ER and CSI transformed renewed interest in emergency medicine and forensic science and justice, respectively. If not this group of pitched shows, then the next. If not this group of writers, then the ones who come after them. Searching for a new MacGyver transcends finding an engineering hero for a new age with new, complex problems. It’s about being a catalyst for meaningful academic change and creative inspiration. Or at the very least opening up Hollywood’s eyes and time slots. Zlotoff, whose MacGyver Foundation supported the event and continually seeks to promote innovation and peaceful change through education opportunities, recognized this in his powerful closing remarks. “The important thing about this competition is that we had this competition. The bell got rung. Women need to be a part of the solution to fixing the problems on this planet. [By recognizing that], we’ve succeeded. We’ve already won.”

The Next MacGyver event was held at the Paley Center For Media in Beverly Hills, CA on July 28, 2015. Follow all of the competition information on their site. Watch a full recap of the event on the Paley Center YouTube Channel.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

“It’s like a war. You don’t know whether you’re going to win the war. You don’t know if you’re going to survive the war. You don’t know if the project is going to survive the war.” The war? Cancer, still one of the leading causes of death despite 40 years passing since the National Cancer Act of 1971 catapulted Richard Nixon’s famous “War on Cancer.” The speaker of the above quote? A scientist at Genentech, a San Francisco-based biotechnology and pharmaceutical company, describing efforts to pursue a then-promising miracle treatment for breast cancer facing numerous obstacles, not the least of which was the patients’ rapid illness. If it sounds like a made-for-Hollywood story, it is. But I Want So Much To Live is no ordinary documentary. It was commissioned as an in-house documentary by Genentech, a rarity in the staid, secretive scientific corporate world. The production values and storytelling offer a tremendous template for Hollywood filmmakers, as science and biomedical content become even more pervasive in film. Finally, the inspirational story behind Herceptin, one of the most successful cancer treatments of all time, offers a testament and rare insight to the dedication and emotion that makes science work. Full story and review under the “continue reading” cut.

For biotechnology and pharmaceutical companies, it is the best of times, it is the worst of times. On the one hand, many people consider this a Golden Era of pharmaceutical discovery and innovation for certain illnesses like cancer. Others, such as HIV, receive poor grades for drug and vaccine development. Furthermore, the FDA recently passed much more stringent controls on drugs brought to market, leaving some to posit that this will have a negative impact on future pharmaceutical breakthroughs. And while a recent documentary chronicles some of the unhealthy profits of the pharmaceutical industry, the enormous cost of developing and bringing medicines to market is often gravely overlooked. Today, the pharmaceutical industry as a whole has one of the lowest favorability scores of any major industry, despite some impressive social contributions, partnerships and global health investments. Much of this public hostility simply comes down to the fact that people don’t know very much about the pharmaceutical industry, notoriously reluctant to publicize or reveal anything about their inner workings.

Science in Hollywood is experiencing no such crisis. In many ways, it is a golden age for science, technology and medicine in film, with more big-budget mainstream films exploring themes and content germane to 21st Century science than ever before. Last year alone, three smart hit movies broached the realities, hopes and anxiety of the technological times we live in, each in a very different way. The stylish and ambitious thriller Limitless explored the possibility of a limitless brain capacity through pharmacopeia, a magical pill that would maximize one’s intelligence and allow 100% brain function around the clock. Certainly echoing the credo of the modern pharmaceutical movement—there is a pill that can solve every problem, whether it’s been invented or not—Limitless fell slightly short in condemning (or even properly acknowledging) the impracticalities ethical irresponsibility of developing such a drug, especially in its ending. Stephen Soderbergh’s surgical and pinpoint-accurate epic Contagion gave audiences a spine-chilling, terrorizing purview into the medical and public health realities of a modern-day pandemic. But while it strove, and succeeded, in showcasing how government agencies, university labs and medical establishments would contend with and fight off such a global disaster, Contagion was never able to connect audiences emotionally either with the characters impacted by the pandemic or with the scientists battling it. No recent movie is a better example of delicate introspection and exposition than the brilliant, poignant, funny and difficult 50/50. On the heels of CNN pondering whether Hollywood could take on cancer came a film that did so with reality, grace and even humor. Partially because it was based on screenwriter Will Reiser’s own brush with cancer, 50/50 set aside the clinical as a secondary backdrop to examine the psychological.

Each of the films above has an important quality that is be an essential component to effective Hollywood science storytelling – scientific accuracy, emotional connection to the outside world and an overview of biomedical impact and innovation. We recently screened an industry documentary, filmed at the request of Genentech scientists, called I Want So Much To Live, that is an excellent blueprint for the way we’d like to see scientific stories portrayed in film. Best of all, it doesn’t sacrifice the human story for the technical one, nor the very real complex emotions that scientists, engineers and doctors feel when they develop and market potentially life-saving technology.

The miracle of Herceptin is really a decade-long journey that started in the labs of UCLA, moved to the pharmaceutical labs of San Francisco, endured countless obstacles, street riots and controversies to end up as one of the most revolutionary breakthroughs in breast cancer treatment research history. Advances in cancer insight always seem to come in evolutionary leaps. For example, the cellular mechanism of how normal cells become cancerous was unknown until Harold Varmus and Michael Bishop established the presence of retroviral oncogenes, genes that control cellular growth and replication. When either disrupted or turned on, these genes contribute to the transformation of normal cells into tumors. Other than the discovery of as an anti-estrogen treatment for breast cancers, relatively little new ground had been gained in fighting the disease. Scientists continued to be perplexed why some women were cured by chemotherapy, which tries to stop cancer cell division by attacking the most rapidly-dividing cells in the body, while others didn’t respond at all. It was not until the late 80s that scientists Alex Ullrich and Michael Shepherd (both featured in the film) discovered that about 20-30% of early-stage breast cancers express amplify a gene called HER-2, a protein embedded in the cell membrane that helps regulate cell growth and signaling. With the help of UCLA scientist Dennis Slamon, famously portrayed by Harry Connick, Jr. in a made-for-TV movie about the development of Herceptin, the scientists soon developed an anti-HER-2 antibody that significantly slowed tumor growth.

An early Phase I clinical trial was conducted simply to establish safety, with 20 volunteers. The lone survivor, still alive to this day, was given 10 weeks to live. Phase II trials honed in on dosage and establishing that the drug performed its intended effects. This time, out of 85 volunteers, 5 survived completely, not a bad result, but not enough for the FDA and the science community. The scientists took a huge risk for their Phase III study. They combined their anti-HER-2 antibody with current treatment. The results were astounding. Out of 450 patients, 50% survived — the highest ever success rate for metastatic cancer!

Think the story ends here? Think again. This is where it just begins to take more emotional twists and turns than a fictitious Hollywood script. Unlike many Hollywood productions, though, the human impact angle was shared equally between all the players in this evolving story, easily this documentary’s most powerful aspect. In order to test their Phase III trials of Herceptin (in concert with chemotherapy treatments available at that time), Genentech had to establish a highly controversial lottery system to pick those who would receive highly limited life-saving quantities of Herceptin, and those who would be categorized in the control studies, and thereby handed a death sentence. So controversial was the lottery system, that it engendered televised protests in the Bay Area, along with anguished pleas from dying patients—the documentary’s title is the first sentence of one such letter: “I want so much to live.” The scientists at Genentech were hardly immune to the weight of each decision, either. They were tormented over the fairness of the lottery system, producing enough high-quality treatment to pass the clinical trial, and even in keeping an unbiased eye on the science to save lives in the long run. Talking about the pressure of those days reduced one of the scientists to tears. And after all was said and done, the lone FDA scientist entrusted with the power to oversee the Herceptin study and green light its approval as a drug? She had just lost her mother to breast cancer. These intertwining fortunes are summarized by executive producer Christie Castro: “By definition, groups of people are imperfect. But those who worked on Herceptin proved that the complexity – indeed, the fantastic mess – that simply comes with being human can sometimes result in something truly worthwhile.”

One of the first patients to get the experimental Herceptin treatment prior to FDA approval, though not profiled in the movie, is flourishing well over a decade after being diagnosed with the most aggressive form of breast cancer. Stories like hers lie at the emotional heart of the I Want So Much To Live story (and Genentech’s motivation for continuing the controversial studies):

Herceptin was officially approved as a drug on September 22, 2000. On October 20, 2010, Herceptin was approved as an adjuvant (joint) treatment with current chemotheraphy drugs for the treatment of aggressive breast cancer. To date, the adjuvant therapy has had an impressive 58% success rate for a cancer that once carried an unlikely rate of survival for those afflicted.

Take a look at the trailer for I Want So Much To Live:

The powerful and well-crafted content of this documentary should serve as a valuable template for how the multi-faceted power of storytelling can be used across multiple industries. It smartly tells a gripping scientific story without either dumbing down the science or elevating it beyond a layperson’s understanding—a certain goal for the increasing amount of cinematic fare such as Contagion. It provides a functional breakdown of the enormous challenges and technical obstacles of the pharmaceutical drug development process. Like many other aspects of science, it is mysterious to the general public, out of their grasp and seemingly always occuring behind closed doors. Especially at a time when public perception of the pharmaceutical industry is at an all-time low, such transparency could strengthen reputations and increase business. “Corporations are,” executive producer Christine Castro reminds us, “groups of people who have ideas, ambitions, conflicts and dreams, and, at the end of the day, a desire to see their work result in something meaningful. That’s why we decided to take a creative chance and face the potential skepticism that a corporation would or could tell an unvarnished story about itself.”

Finally, the film develops a three-dimensional emotional tether to the three different sides impacted by the scientific process: scientists, the agencies that regulate them and society as a whole. There doesn’t always have to be a tacit bad guy, and sometimes, this protagonistic complexity makes for the best story of all. Holder, who started filming I Want So Much To Live around the same time that her late brother was diagnosed with a rare and virulent form of cancer, echoed our sentiment as she reflected on the process of making the film. It allowed her to discover “that science is a creative pursuit as well as a technical one; that science is beautiful and can be accessible; and that anyone, at any time, might have the idea that could one day save lives.”

We can only hope that the harmony of creativity, passion and emotion devoted to all sides of the drug discovery process within this film translates to more private and studio productions dealing with complex scientific and socio-technological issues.

ScriptPhD.com caught up with filmmaker Elizabeth Holder, who directed and produced I Want So Much To Live. Here are some of her thoughts on putting together this incredible story and interacting with the scientists and heroic patients that made it happen:

ScriptPhD.com: Can you tell me where the seeds of inspiration for the story of the drug Herceptin first arose, and what inspired you to tackle this material for your documentary?

Elizabeth Holder: The initial idea to make a documentary film about Herceptin came from executive producer Chris Castro, who upon joining Genentech in 2007 thought that the story would make a compelling documentary film. (She will have to share with you her experience.) I first heard about the project from a friend and began doing research on Herceptin and Genentech. I was excited to work on this film; excited to jump into and explore a new world. My first inspiration came from the people who were the story; the passionate men and women who faced adversity with courage and perseverance, never swaying from their pursuit, making difficult decisions laced with moral and ethical ramifications. I knew this story of individual and collective growth would resonate with many, and would be especially poignant to the employees of Genentech. (This at the time was the intended audience for the film.) When I began working on this film in 2008 I had no idea how personal this journey would become and how connected I would be to the people I would meet and the story I was going to tell.

While I was making the film, my younger brother David was battling cancer – a rare type of cancer for a 33 year old man. While I was meeting with scientists and learning about biotech and drug development for the movie, David was fighting the disease with everything science and medicine could offer. He wrote a blog about his journey, signing off each entry with the words “Plow On”. Each day, I would hope that the scientists would hurry up. Figure it out. But I learned firsthand that science is not a “hurry up” business and that many people are doing everything they can to find ways to stop cancer. My wish is that the film serves to inspire everyone who is on the frontlines in the battle against cancer, to encourage them to keep on fighting the good fight, no matter what, and even on a bad day, to Plow On.

SPhD: How willing were the patients and scientists to contribute to the project?

EH: As you can imagine, everyone, especially scientists, are skeptical. Some people took a bit more convincing than others, but once they started talking, the interviews, both on and off camera, were amazing.

I am grateful to the patients, scientists, activists, executives, and doctors for honestly and enthusiastically sharing their stories, perspective, and experience with me. I quickly became indebted to mentors and colleagues who diligently and without judgment explained and re-explained molecular biology and the drug development process to me. I hope the determination and delight in which they approach their work is reflected in the film.

SPhD: Any of your own preconceived notions that were shattered or altered throughout the making of this film?

EH: I discovered striking similarities between scientists and filmmakers which I did not expect to find. A research scientist and a filmmaker must each imagine an idea, convince others to recognize the value of funding the idea, and then prove the concept. Like many filmmakers, the scientists I met were impassioned about their work and showed great determination in the face of extraordinary odds. Like filmmaking, drug development takes a village. Before making this film I had no idea how many years and how many people it took to develop a drug; the process involves a huge collaborative effort between massive numbers of people in multiple organizations, in various countries.

It was incredible and amazing to me that the scientists would talk about “cells” and “exxons” and “nucleotides” as if they could actually be seen by the human eye. It was also inspiring to me that a scientist is committed enough to work on a research project for their whole career with the knowledge that they might not ever see an outcome in their lifetime. And finally, I was pleased to confirm (though not statistically proven) that a lot of really smart and accomplished people do not have perfectly clean desks.

SPhD: Within the movie, we get a real feel for the dichotomy between the emotional appeals of the desperately ill patients, the cautious, careful FDA scientists, and the Genentech researchers who want to make sure the product they introduce is safe for patients. Was this a thematic element you foresaw or that developed as you pieced the film together?

EH: I carefully planned out the film, yet also left room for new discoveries along the way. (I was constantly learning – from each filmed interview, from advisors, from books.) For each defining moment in the film I made sure to film at least three people talking about the same experience with different opinions. I wanted to make sure that the topic was covered from various perspectives so I could intercut interviews together. I knew that I was not going to use narration. I only wanted people who were part of the story to be telling the story; to engage the audience with their firsthand accounts. I wanted the audience to feel connected emotionally to each person in the film, to empathize with the person on screen even if they disagreed with their tactic and/or goal. Additionally, I knew I was going to use archival footage, photos and authentic documents to organically reveal the isolation and miscommunication, the unwitting partnerships, the building mistrust and the eventual coming together. When I first saw and read the pile of letters saved by Geoff, I knew that I would use it in the film. I carried a few of those letters with me to every interview and pulled them out when it felt right, asking people to read them and respond. The scene was assembled to show how incorrect assumptions lead to strife; to show how each person’s journey was critical to the whole story; and to show how those intertwining stories eventually became the framework for the work that is continuing today.

SPhD: What are your own thoughts on the lottery system that Genentech ultimately used to determine who would be eligible to participate in the Herceptin clinical trials?

EH: I see both sides of the issue, and don’t think there is an easy answer. When interviewing people for this film, I went into each interview with a clean slate, without having any pre-conceived agenda or opinion. It was critical that I empathized with each person and was able to tell the story though the objectives and needs of those who I interviewed, those who had direct experience. I needed to be able to fully see and feel the situation from their point of view. And, to me, judgment is only something that pulls us apart, not together. I am thankful I am in the documentary business and not in the business of making the kind of decisions that had to be made during that time. I am not sure what I would have done if someone I loved needed the drug before it was approved.

~*ScriptPhD*~

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development.

Subscribe to free email notifications of new posts on our home page.

]]>

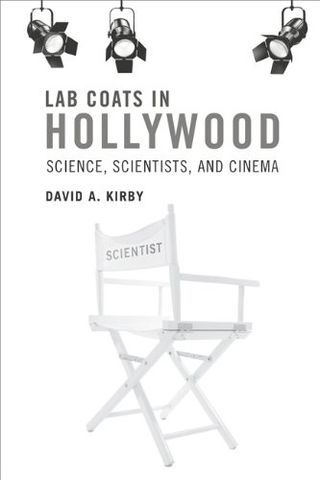

Read through any archive of science fiction movies, and you quickly realize that the merger of pop culture and science dates as far back as the dawn of cinema in the early 1920s. Even more surprising than the enduring prevalence of science in film is that the relationship between film directors, scribes and the science advisors that have influenced their works is equally as rich and timeless. Lab Coats in Hollywood: Science, Scientists, and Cinema (2011, MIT Press), one of the most in-depth books on the intersection of science and Hollywood to date, serves as the backdrop for recounting the history of science and technology in film, how it influenced real-world research and the scientists that contributed their ideas to improve the cinematic realism of science and scientists. For a full ScriptPhD.com review and in-depth extended discussion of science advising in the film industry, please click the “continue reading” cut.

Written by David A. Kirby, Lecturer in Science Communication Studies at the Centre for History of Science, Technology and Medicine at the University of Manchester, England, Lab Coats offers a surprising, detailed analysis of the symbiotic—if sometimes contentious—partnership between filmmakers and scientists. This includes the wide-ranging services science advisors can be asked to provide to members of a film’s production staff, how these ideas are subsequently incorporated into the film, and why the depiction of scientists in film carries such enormous real-world consequences. Thorough, detailed, and honest, Lab Coats in Hollywood is an exhaustive tome of the history of scientists’ impact on cinema and storytelling. It’s also an essential and realistic road map of the challenges that scientists, engineers and other technical advisors might face as they seriously pursue science advising to the film industry as a career.

The essential questions that Lab Coats in Hollywood addresses are these—is it worth it to hire a science advisor for a movie production? Is it worth it for the scientist to be an advisor? The book’s purposefully vague conclusion is that it depends solely on how the scientist can film’s storyline and visual effects. Kirby wisely writes with an objective tone here because the topic is open to a considerable amount of debate among the scientists and filmmakers profiled in the book. Sometimes a scientist is so key to a film’s development, he or she becomes an indispensible part of the day-to-day production. A good example of this is Jack Horner, paleontologist at the Museum of the Rockies in Bozeman, MT, and technical science advisor to Steven Spielberg in Jurassic Park and both of its sequels. Horner, who drew from his own research on the link between dinosaurs and birds for a more realistic depiction of the film’s contentious science, helped filmmakers construct visuals, write dialogue, character reactions, animal behaviors, and map out entire scenes. J. Marvin Herndon, a geophysicist at the Transdyne Corporation, approached the director of the disaster film The Core when he learned the plot was going to be based on his controversial hypothesis about a giant uranium ball in the center of the Earth. Herndon’s ideas were fully incorporated into the film’s plot, while Herndon rode the wave of publicity from the film to publish his research in a PNAS paper. The gold standard of science input, however, were Stanley Kubrik’s multiple science and engineering advisors for 2001: A Space Odyssey, discussed in much further detail below.

Kirby hypothesizes that sometimes, a film’s poor reception might have been avoided with a science advisor. He provides the example of the Arnold Schwarzenegger futuristic sci-fi bomb The Sixth Day, which contained a ludicrously implausible use of human cloning in its main plot. While the film may have been destined for failure, Kirby posits that it only could have benefited from proper script vetting by a scientist. By contrast, the 1998 action adventure thriller Armageddon came under heavy expert criticism for its basic assertion that an asteroid “the size of Texas” could go undetected until eighteen days before impact. Director Michael Bay patently refused to take the advice of his advisor, NASA researcher Ivan Bakey, and admitted he was sacrificing science for plot, but Armageddon went on to be a huge box office hit regardless. Quite often, the presence of a science advisor is helpful, albeit unnecessary. One of the book’s more amusing anecdotes is about Dustin Hoffman’s hyper-obsessive shadowing of a scientist for the making of the pandemic thriller Outbreak (great guide to the movie’s science can be found here). Hoffman was preparing to play a virologist and wanted to infuse realism in all of his character’s reactions. Hoffman kept asking the scientist to document reactions in mundane situations that we all encounter—a traffic jam, for example—only to come to the shocking conclusion that the scientist was a real person just like everyone else.

Most of the time, including scientists in the filmmaking process is at the discretion of the studios because of the one immutable decree reiterated throughout the book: the story is king. When a writer, producer or director hires a science consultant, their expertise is utilized solely to facilitate, improve or augment story elements for the purposes of entertaining the audience. Because of this, one of the most difficult adjustments a science consultant may face is a secondary status on-set even though they may be a superstar in their own field. Some of the other less glamorous aspects of film consulting include heavy negotiations with unionized writers for script or storyline changes, long working hours, a delicate balance between side consulting work and a day job, and most importantly, an inconsistent (sometimes nonexistent) payment structure per project. I was notably thrilled to see Kirby mention the pros and cons of programs such as the National Science Foundation’s Creative Science Studio (a collaboration with USC’s school of the Cinematic Arts) and the National Academy of Science’s Science and Entertainment Exchange, which both provide on-demand scientific expertise to the Hollywood filmmaking community in the hope of increasing and promoting the realism of scientific portrayal in film. While valuable commodities to science communication, both programs have had the unfortunate effect of acclimating Hollywood studios to expect high-level scientific consulting for free.

1968’s 2001: A Space Odyssey is widely considered by popular consensus to be the greatest sci-fi movie ever made, and certainly the most influential. As such, Kirby devotes an entire chapter to detailing the film’s production and integration of science. Director Stanley Kubrik took painstaking detail in scientific accuracy to explore complex ideas about the relationship between humanity and technology, hiring a range of advisors from anthropologists, aeronautical engineers, statisticians, and nuclear physicists for various stages of production. Statistician I. J. Good provided advice on supercomputers, aerospace Harry Lange provided production design, while NASA space scientist Frederick Ordway lent over three years of his time to develop the space technology used in the film. In doing so, Kubrik’s staff consulted with over sixty-five different private companies, government agencies, university groups and research institutions. So real was the space technology in 2001 that moon landing hoax supporters have claimed the real moon landing by United States astronauts, taking place in 1969, was taped on the same sets. Not every science-based film has used science input as meticulously or thoroughly since, but Kubrik’s influence on the film industry’s fascination with science and technology has been an undeniable legacy.

One of the real treats of Lab Coats in Hollywood is the exploration of the two-way relationship between scientists and filmmakers, and how film in turn influences the course of science, as we discuss in more detail below. Between film case studies, critiques and interviews with past science advisors are interstitial vignettes of ways scientists have shaped films we know and love. Even the animated feature Finding Nemo had an oceanography advisor to get the marine biology correct. The seminal moment of the most recent Star Trek installation was due to a piece of off-handed scientific advice from an astronomer. The cloning science of Jurassic Park, so thoroughly researched and pieced together by director Steven Spielberg and science advisor Jack Horner, was actually published in a top-notch journal days ahead of the movie’s premiere. Even in rare spots where the book drags a bit with highly technical analysis are cinematic backstories with details that readers will salivate over. (For example, there’s a very good reason all the kelp went missing from Finding Nemo between its cinematic and DVD releases.)

As the director of a creative scientific consulting company based in Los Angeles, one of the biggest questions I get asked on a regular basis is “What does a science advisor do, exactly?” Lab Coats in Hollywood does an excellent job of recounting stories and case studies of high-profile scientist consultants, all of whom contributed their creative talents to their respective films in different ways, what might be expected (and not expected) of scientists on set, and of giving different areas of expertise that are currently in demand in Hollywood. Kirby breaks down cinematic fact checking, the most frequent task scientists are hired to perform, into three areas within textbook science (known, proven facts that cannot be disputed, such as gravity): public science, something we all know and would think was ridiculous if filmmakers got wrong, expert science, facts that are known to specialists and scientific experts outside of the lay audience, and (most problematic) folk science, incorrect science that has nevertheless been accepted as true by the public. Filmmakers are most likely to alter or modify facts that they perceive as expert science to minimize repercussions at the box office.

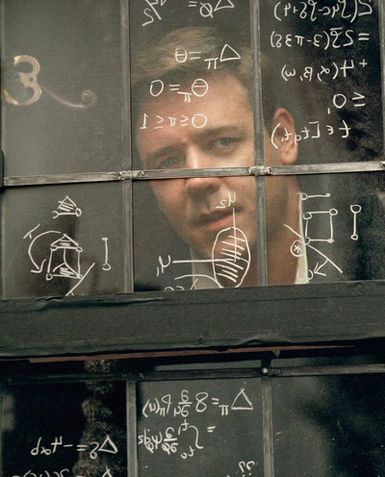

A science advisor is constantly navigating cinematic storytelling constraints and a filmmaker’s desire to utilize only the most visually appealing and interesting aspects of science (regardless of whether the context is always academically appropriate). Another broad area of high demand is in helping actors look and act like a real scientist on screen. Scientists have been hired to do everything from doctoring dialogue to add realism into an actor’s portrayal (the movie Contact and Jodie Foster’s depiction of Dr. Ellie Arroway is a good example of this), training actors in using equipment and pronouncing foreign-sounding jargon, replicating laboratory notebooks or chalkboard scribbles with the symbols and shorthand of science (such as in the mathematics film A Beautiful Mind), and to recreate the physical space of an authentic laboratory. Finally, the scientist’s expertise of the known is used to help construct plausible scenarios and storylines for the speculative, an area that requires the greatest degree of flexibility and compromise from the science advisor. Uncertainty, unexplored research and “what if” scenarios, the bane of every scientist’s existence, happen to be Hollywood’s favorite scenarios, because they allow the greatest creative freedom in storytelling and speculative conceptualization without being negated by a proven scientific impossibility. An entire chapter—the book’s finest—is devoted to two case studies, Deep Impact and The Hulk, where real science concepts (near-Earth asteroid impacts and genetic engineering, respectively) were researched and integrated into the stories that unfolded in the films. (Side note: if you are ever planning on being a science advisor read this section of the book very carefully).

In years past, consulting in films didn’t necessarily bring acclaim to scientists within their own research communities; indeed, Lab Coats recounts many instances where scientists were viewed as betraying science or undermining its seriousness with Hollywood frivolity, including many popular media figures such as Carl Sagan and Paul Ehrlich. Recently, however, consultants have come to be viewed as publicity investments both by studios that hire high-profile researchers for recognition value of their film’s science content and by institutes that benefit from branding and exposure. Science films from the last 10-15 years such as GATTACA, Outbreak, Armageddon, Contact, The Day After Tomorrow and a panoply of space-related flicks have attached big-name scientists as consultants (gene therapy pioneer French Anderson, epidemiologist David Morens, NASA director Ivan Bekey, SETI institute astronomers Seth Shostak and Jill Tartar and climatologist Michael Molitor, respectively). They also happened to revolve around the research salient to our modern era: genetic bioengineering, global infectious diseases, near-earth objects, global warming and (as always) exploring deep space. As such, a mutually beneficial marketing relationship has emerged between science advisors and studios that transcends the film itself resulting in funding and visibility to individual scientists, their research, and even institutes and research centers. The National Severe Storms Laboratory (NSSL) promoted themselves in two recent films, Twister and Dante’s Peak, using the films as a vehicle to promote their scientific work, to brand themselves as heroes underfunded by the government, and to temper public expectations about storm predictions. No institute has had a deeper relationship with Hollywood than NASA, extending back to the Star Trek television series, with intricate involvement and prominent logo display in the films Apollo 13, Armageddon, Mission to Mars, and Space Cowboys. Some critics have argued that this relationship played an integral role in helping NASA maintain a positive public profile after the devastating 1986 Challenger space shuttle disaster. The end result of the aforementioned promotion via cinematic integration can only benefit scientific innovation and public support.

Accurate and favorable portrayal of science content in modern cinema has an even bigger beneficiary than specific research institutes, and that is society itself. Fictional technology portrayed in film – termed a “diegetic prototype” – has often inspired or led directly to real-world application and development. Kirby offers the most impactful case of diegetic prototyping as the 1981 film Threshold, which portrayed the first successful implantation of a permanent artificial heart, a medical marvel that became reality only a year later. Robert Jarvik, inventor of the Jarvik-7 artificial heart used in the transplant, was also a key medical advisor for Threshold, and felt that his participation in the film could both facilitate technological realism and by doing so, help ease public fears about what was then considered a freak surgery, even engendering a ban in Great Britain. Of the many obstacles that expensive, ambitious, large-scale research faces, Kirby argues that skepticism or lack of enthusiasm from the public can be the most difficult to overcome, precisely because it feeds directly into essential political support that makes funding possible. A later example of film as an avenue for promotion of futuristic technology is Minority Report, set in the year 2054, and featuring realistic gestural interfacing technology and visual analytics software used to predict crime before it actually happens. Less than a decade later, technology and gadgets featured in the film have come to fruition in the form of multi-touch interfaces like the iPad and retina scanners, with others in development including insect robots (mimics of the film’s spider robots), facial recognition advertising billboards, crime prediction software and electronic paper. A much more recent example not featured in the book is the 2011 film Limitless, featuring a writer that is able to stimulate and access 100% of his brain at will by taking a nootropic drug. While the fictitious drug portrayed in the film is not yet a neurochemical reality, brain enhancement is a rising field of biomedical research, and may one day indeed yield a brain-boosting pill.

No other scientific feat has been a bigger beneficiary of diegetic prototyping than space travel, starting with 1929’s prophetic masterpiece Frau im Mond [Woman in the Moon], sponsored by the German Rocket Society and advised masterfully by Hermann Oberth, a pioneering German rocket research scientist. The first film to ever present the basics of rocket travel in cinema, and credited with the now-standard countdown to zero before launch in real life, Frau im Mond also featured a prototype of the liquid-fuel rocket and inspired a generation of physicists to contribute to the eventual realization of space travel. Destination Moon, a 1950 American sci-fi film about a privately financed trip to the Moon, was the first film produced in the United States to deal realistically with the prospect of space travel by utilizing the technical and screenplay input of notable science fiction author Robert A. Heinlein. Released seven years before the start of the USSR Sputnik program, Destination Moon set off a wave of iconic space films and television shows such as When Worlds Collide, Red Planet Mars, Conquest of Space and Star Trek in the midst of the 1950s and 1960s Cold War “space race” between the United States and Russia. What theoretical scientific feat will propel the next diegetic prototype? A mission to Mars? Space colonization? Anti-aging research? Advanced stem cell research? Time will only tell.

Ultimately, readers will enjoy Lab Coats in Hollywood for its engaging writing style, detailed exploration of the history of science in film and most of all, valuable advice from fellow scientists who transitioned from the lab to consulting on a movie set. Whether you are a sci-fi film buff or a research scientist aspiring to be a Hollywood consultant, you will find some aspect of this book fascinating. Especially given the rapid proliferation of science and technology content in movies (even those outside of the traditional sci-fi genre), and the input from the scientific community that it will surely necessitate, knowing the benefits and pitfalls of this increasingly in-demand career choice is as important as its significance in ensuring accurate portrayal of scientists to the general public.

~*ScriptPhD*~

***************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development.

Subscribe to free email notifications of new posts on our home page.

]]>