History abounds with examples of unsung science heroes, researchers and visionaries whose tireless efforts led to enormous breakthroughs and advances, often without credit or lasting widespread esteem. This is particularly true for women and minorities, who have historically been under-represented in STEM-related fields. English mathematician Ada Lovelace is broadly considered the first great tech and computing visionary — she pioneered computer programming language and helped construct what is considered the first computing machine (the Babbage Analytical Engine) in the mid-1800s. Physical chemist Dr. Rosalind Franklin performed essential X-ray crystallography work that ultimately revealed the double-helix shape of DNA (Photograph 51 is one of the most important images in the history of science). Her work was shown (without her permission) to rival King’s College biology duo Watson and Crick, who used the indispensable information to elucidate and publish the molecular structure of DNA, for which they would win a Nobel Prize. Dr. Percy Julian, a grandson of slaves and the first African-American chemist ever elected to the National Academy of Sciences, ingeniously pioneered the synthesis of hormones and other medicinal compounds from plants and soybeans. New movie Hidden Figures, based on the exhaustively researched book by Margot Lee Shetterley, tells the story of three such hitherto obscure heroes: Katherine Johnson, Dorothy Vaughn and Mary Jackson, standouts in a cohort of African-American mathematicians that helped NASA launch key missions during the tense 19060s Cold War “space race.” More importantly, Hidden Figures is a significant prototype for purpose-driven popular science communication — a narrative and vehicle for integrated multi-media platforms to encourage STEM diversity and scientific achievement.

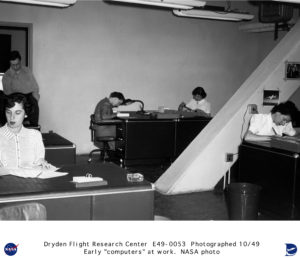

The participation of women in astrophysics, space exploration and aeronautics goes back to the 1800s at the Harvard College Observatory, as chronicled by Dava Sobell in The Glass Universe, a companion book to Hidden Figures. These women, every bit as intellectually capable and scientifically curious as their male counterparts, took the only opportunity afforded to them, as human “computers,” literally calculating, measuring and analyzing the classification of our universe. By the 1930s, the National Advisory Committee for Aeronautics, a precursor that would be subsumed by the creation of NASA in 1958, hired five of these female computers for their Langley aeronautical building in Hampton, Virginia. Shortly after World War II, with the expansion of the female workforce and war fueling innovation for better planes, NACA began hiring college-educated African American female computers. They were segregated to the Western side of the Langley campus (referred to as the “West Computers”), and were required to use separate bathroom and dining facilities, all while being paid less to do equal work as their white counterparts. Many of these daily indignities were chronicled in Hidden Figures. By the 1960s, the Space Program at NASA was defined by the two biggest sociopolitical events of the era: the Cold War and the Civil Rights Movement. Embroiled in an anxious race with Soviet astronauts to launch a man in orbit (and eventually, to the Moon), NASA needed to recruit the brightest minds available to invent seemingly impossible math to make the mission possible. Katherine Goble (later Johnson), was one of those minds.

Katherine Johnson (portrayed by Taraji P. Henson) was a math prodigy. A high school freshman by the time she was 10 years old, Johnson’s fascination with numbers led her to a teaching position, and eventually, as a human calculator at the Langley NASA facility. Hand-picked to assist the Space Task Group, portrayed in the movie as Al Harrison (Kevin Costner), a fictionalized amalgamation of three directors Johnson worked with in her time at NASA, she had to traverse institutionalized racism, sexism and antagonistic collaborators in her path. Johnson would go on to calculate trajectories that sent both Alan Shepard and John Glenn into space, as well as key data for the Apollo Moon landing. Supporting Johnson are her good friends and fellow NASA colleagues Dorothy Vaughan (Octavia Spencer) and Mary Jackson (Janelle Monáe). Vaughan herself was a NASA pioneer, becoming the first black computing section leader and IBM FORTRAN programming expert. Jackson became the first black engineer at NASA, getting special permission to take advanced math courses in a segregated school.

Katherine Johnson’s legacy in science, mathematics, and civil rights cannot be understated. Current NASA chief Charles Bolden thoughtfully paid tribute to the iconic role model in Vanity Fair. “She is a human computer, indeed, but one with a quick wit, a quiet ambition, and a confidence in her talents that rose above her era and her surroundings,” he writes. The Langley NASA facility where she broke barriers and pioneered discovery honored Johnson by dedicating the building in her name last May. Late in 2015, Johnson was bestowed with a Presidential Medal of Freedom by President Barack Obama.

Featured prominently in Hidden Figures, technology giant IBM has had a long-standing relationship with NASA ever since the IBM 7090 became the first computing mainframe to be used for flight simulations, with the iconic System/360 mainframe engineering the Apollo Moon landing. Although IBM mainframes are no longer in use for mathematical calculations at NASA, they are partnering through the use of artificial intelligence for space missions. IBM Watson has the capability to sift through thousands of pages of information to get pilots critical data in real time and even monitor and diagnose astronauts’ health as a virtual/intelligence agent.

More importantly, IBM is taking a leadership role in developing STEM outreach education programs and a continued commitment to diversifying the technology workforce for the demands of the 21st Century. 50 years after Katherine Johnson’s monumental feats at NASA, the K-12 achievement gap between white and black students has barely budged. Furthermore, a 2015 STEM index analysis shows that even as the number of STEM-related degrees and jobs proliferates, deeply entrenched gaps between men and women, and an even wider gap between whites and minorities, remain in obtaining STEM degrees. This is exacerbated in the STEM work force, where diversity has largely stalled and women and minorities remain deeply under-represented. And yet, technology companies will need to fill 650,000 new STEM jobs (the fastest growing sector) by 2018, with the highest demand overall for so-called “middle-skill” jobs that may only require technical or community college preparation. Launched in 2011 by IBM, in collaboration with the New York Department of Education, P-TECH is an ambitious six-year education model predominantly aimed at minorities that combines college courses, internships and mentoring with a four year high school education. Armed with a combined high school and associates’ degree, these students would be immediately ready to fill high-tech, diverse workforce needs. Indeed, IBM’s original P-TECH school in Brooklyn has eclipsed national graduation rates for community college degrees over a two-year period, with the technology company committing to widely expanding the program in the coming years. Technology companies becoming stakeholders in, and even innovators of, educational models and partnerships can have profound impacts in innovation, economic growth and diminishing poverty through opportunity.

Dovetailing with the release of Hidden Figures, IBM has also partnered with The New York Times to launch their first augmented reality experience. Combining advocacy, outreach and data mining, the free, downloadable app called “Outthink Hidden” combines the inspirational stories portrayed in Hidden Figures with digitally-interactive content to create a PokemonGo-style nationwide hunt about STEM figures, historical leaders, places and areas of research across the country. The app can be used interactively at 150 locations in 10 U.S. cities, STEM Centers (such as NASA Langley Research Center and Kennedy Space Center) and STEM Universities to learn not just about the three mathematicians featured in Hidden Figures, but also other diverse STEM pioneers. Coupled with the powerful wide impact of Hollywood storytelling and a complimentary book release, “Outthink Hidden” could be an important prototype for engaging young tech-savvy students, possibly even in organized, classroom environments, and promoting interest in exploring STEM education, careers and mentorship opportunities.

There are no easy solutions for reforming STEM education or diversifying the talent pool in research labs and technology companies. But we can provide compelling narrative through movies and TV shows, and, increasingly, digital content. Perhaps the first step to inspiring and cultivating the next Katherine Johnson is simply to start by telling more stories like hers.

View a trailer for Hidden Figures:

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

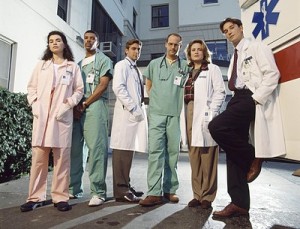

It has become compulsory for modern medical (or scientifically-relevant) shows to rely on a team of advisors and experts for maximal technical accuracy and verisimilitude on screen. Many of these shows have become so culturally embedded that they’ve changed people’s perceptions and influenced policy. Even the Gates Foundation has partnered with popular television shows to embed important storyline messages pertinent to public health, HIV prevention and infectious diseases. But this was not always the case. When Neal Baer joined ER as a young writer and simultaneous medical student, he became the first technical expert to be subsumed as an official part of a production team. His subsequent canon of work has reshaped the integration of socially relevant issues in television content, but has also ushered in an age of public health awareness in Hollywood, and outreach beyond it. Dr. Baer sat down with ScriptPhD to discuss how lessons from ER have fueled his public health efforts as a professor and founder of UCLA’s Global Media Center For Social Impact, including storytelling through public health metrics and leveraging digital technology for propelling action.

Neal Baer’s passion for social outreach and lending a voice to vulnerable and disadvantaged populations was embedded in his genetic code from a very young age. “My mother was a social activist from as long as I can remember. She worked for the ACLU for almost 45 years and she participated in and was arrested for the migrant workers’ grape boycott in the 60s. It had a true and deep impact on me that I saw her commitment to social justice. My father was a surgeon and was very committed to health care and healing. The two of them set my underlying drives and goals by their own example.” Indeed, his diverse interests and innate curiosity led Baer to study writing at Harvard and the American Film Institute and eventually, medicine at Harvard Medical School. Potentially presenting a professional dichotomy, it instead gave him the perfect niche — medical storytelling — that he parlayed into a critical role on the hit show ER.

During his seven-year run as medical advisor and writer on ER, Baer helped usher the show to indisputable influence and critical acclaim. Through the narration of important, germane storylines and communication of health messages that educated and resonated with viewers, ER‘s authenticity caught the attention of the health care community and inspired many television successors. “It had a really profound impact on me, that people learn from television, and we should be as accurate as possible,” Baer reflects. “[Viewers] believe it’s real, because we’re trying to make it look as real as possible. We’re responsible, I think. We can’t just hide behind the façade of: it’s just entertainment.” As show runner of Law & Order: SVU, Baer spearheaded a storyline about rape kit backlogs in New York City that led to a real-life push to clear 17,000 backlogged kits and established a foundation that will help other major US cities do the same. With the help of the CDC and USC’s prestigious Norman Lear Center, Baer launched Hollywood, Health and Society, which has become an indispensable and inexhaustible source of expert information for entertainment industry professionals looking to incorporate health storylines into their projects. In 2013, Baer co-founded the Global Media Center For Media Impact at UCLA’s School of Public Health, with the aim of addressing public health issues through a combination of storytelling and traditional scientific metrics.

Soda Politics

One of Baer’s seminal accomplishments at the helm of the Global Media Center was convincing public health activist Marion Nestle to write the book Soda Politics: Taking on Big Soda (And Winning). Nestle has a long and storied career of food and social policy work, including the seminal book Food Politics. Baer first took note of the nutritional and health impact soda was having on children in his pediatrics practice. “I was just really intrigued by the story of soda, and the power that a product can have on billions of people, and make billions of dollars, where the product is something that one can easily live without,” he says. That story, as told in Soda Politics, is a powerful indictment on the deleterious contribution of soda to the United States’ obesity crisis, environmental damage and political exploitation of sugar producers, among others. More importantly, it’s an anthology of the history of dubious marketing strategies, insider lobbying power and subversive “goodwill” campaigns employed by Big Soda to broaden brand loyalty.

Even more than a public health cautionary tale, Soda Politics is a case study in the power of activism and persistent advocacy. According to a New York Times expose, the drop in soda consumption represents the “single biggest change in the American diet in the last decade.” Nestle meticulously details the exhaustive, persistent and unyielding efforts that have collectively chipped away at the Big Soda hegemony: highly successful soda taxes that have curbed consumption and obesity rates in Mexico, public health efforts to curb soda access in schools and in advertising that specifically targets young children, and emotion-based messaging that has increased public awareness of the deleterious effects of soda and shifted preference towards healthier options, notably water. And as soda companies are inevitably shifting advertising and sales strategy towards , as well as underdeveloped nations that lack access to clean water, the lessons outlined in the narrative of Soda Politics, which will soon be adapted into a documentary, can be implemented on a global scale.

ActionLab Initiative

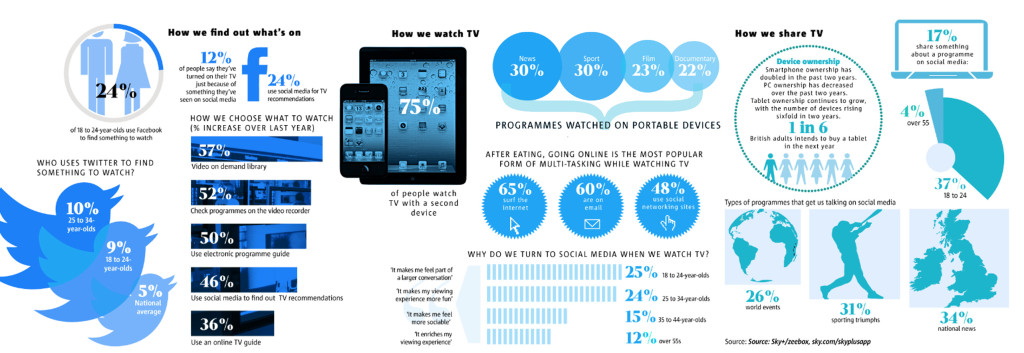

Few technological advancements have had an impact on television consumption and creation like the evolution of digital transmedia and social networking. The (fast-crumbling) traditional model of television was linear: content was produced and broadcast by a select conglomerate of powerful broadcast networks, and somewhat less-powerful cable networks, for direct viewer consumption, measured by demographic ratings and advertising revenue. This model has been disrupted by web-based content streaming such as YouTube, Netflix, Hulu and Amazon, which, in conjunction with fractionated networks, will soon displace traditional TV watching altogether. At the same time, this shifting media landscape has burgeoned a powerful new dynamic among the public: engagement. On-demand content has not only broadened access to high-quality storytelling platforms, but also provides more diverse opportunities to tackle socially relevant issues. This is buoyed by the increased role of social media as an entertainment vector. It raises awareness of TV programs (and influences Hollywood content). But it also fosters intimate, influential and symbiotic conversation alongside the very content it promotes. Enter ActionLab.

One of the critical pillars of the Global Media Center at UCLA, ActionLab hopes to bridge the gap between popular media and social change on topics of critical importance. People will often find inspiration from watching a show, reading a book or even an important public advertising campaign, and be compelled to action. However, they don’t have the resources to translate that desire for action into direct results. “We first designed ActionLab about five or six years ago, because I saw the power that the shows were having – people were inspired, but they just didn’t know what to do,” says Baer. “It’s like catching lightning in a bottle.” As a pilot program, the site will offer pragmatic, interactive steps that people can implement to change their lives, families and communities. ActionLab offers personalized campaigns centered around specific inspirational projects Baer has been involved in, such as the Soda Politics book, the If You Build It documentary and a collaboration with New York Times columnist Nicholas Kristof on his book/documentary A Path Appears. As the initiative expands, however, more entertainment and media content will be tailored towards specific issues, such as wellness, female empowerment in poor countries, eradicating poverty and community-building.

“We are story-driven animals. We collect our thoughts and our memories in story bites,” Baer notes. “We’re always going to be telling stories. We just have new tools with which to tell them and share them. And new tools where we can take the inspiration from them and ignite action.”

Baer joined ScriptPhD.com for an exclusive interview, where he discussed how his medical education and the wide-reaching impact of ER influenced his social activism, why he feels multi-media and cross-platform storytelling are critical for the future of entertainment, and his future endeavors in bridging creative platforms and social engagement.

ScriptPhD: Your path to entertainment is an unusual one – not too many Harvard Medical School graduates go on to produce and write for some of the most impactful television shows in entertainment history. Did you always have this dual interest in medicine and creative pursuits?

Neal Baer: I started out as a writer, and went to Harvard as a graduate student in sociology, [where] I started making documentary films because I wanted to make culture instead of studying it from the ivory tower. So, I got to take a documentary course, and it’s come full circle because my mentor Ed Pinchas made his last film called “One Cut, One Life” recently and I was a producer, before his demise from leukemia. That sent me to film school at the American Film Institute in Los Angeles as a directing fellow, which then sent me to write and direct an ABC after-school special called “Private Affairs” and to work on the show China Beach. I got cold feet [about the entertainment industry] and applied to medical school. I was always interested in medicine. My father was a surgeon, and I realized that a lot of the [television] work I was doing was medically oriented. So I went to Harvard Medical School thinking that I was going to become a pediatrician. Little did I know that my childhood friend John Wells, who had hired me on China Beach, would [also] hire me on “ER” by sending me the script, originally written by Michael Crichton in 1969, and dormant for 25 years until it was discovered in a trunk owned by Steven Spielberg. [Wells] asked me what I thought of the script and I said “It’s like my life only it’s outdated.” I gave him notes on how to update it, and I ultimately became one of the first doctor-writers on television with ER, which set that trend of having doctors on the show to bring verisimilitude.

SPhD: From the series launch in 1994 through 2000, you wrote 19 episodes and created the medical storylines for 150 episodes. This work ran parallel to your own medical education as a medical student, and subsequently an intern and resident. How did the two go hand in hand?

NB: I started writing on ER when I was still a fourth year medical student going back and forth to finish up at Harvard Medical School, and my internship at Children’s Hospital of Los Angeles over six years. And I was very passionate about bringing public health messages to the work that I was doing because I saw the impact that television can have on the audience, particularly the large numbers of people that were watching ER back then.

I was Noah Wylie’s character Dr. Carter. He was a third year [medical student], I was a fourth year. So I was a little bit ahead of him, and I was able to capture what it was like to be the low person on the totem pole and to reflect his struggles through many of the things my friends and I had gone through or were going through. Some of my favorite episodes we did were really drawn on things that actually happened. A friend of mine was sequestered away from the operating table but the surgeons were playing a game of world capitals. And she knew the capital of Zaire, when no one else did, so she was allowed to join the operating table [because of that]. So [we used] that same circumstance for Dr. Carter in an episode. Like you wouldn’t know those things, you had to live through them, and that was the freshness that ER brought. It wasn’t what you think doctors do or how they act but truly what goes on in real life, and a lot of that came from my experience.

SPhD: Do you feel like the storylines that you were creating for the show were germane both to things happening socially as well as reflective of the experience of a young doctor in that time period?

NB: Absolutely. We talked to opinion leaders, we had story nights with doctors, residents and nurses. I would talk to people like Donna Shalala, who was the head of the Department of Health and Human Safetey. I asked the then-head of the National Institutes of Health Harold Varmus, a Nobel Prize winner, “What topics should we do?” And he said “Teen alcohol abuse.” So that is when we had Emile Hirsch do a two-episode arc directly because of that advice. Donna Shalala suggested doing a story about elderly patients sharing perscriptions because they couldn’t afford them and the terrible outcomes that were happening. So we were really able to draw on opinion leaders and also what people were dealing with [at that time] in society: PPOs, all the new things that were happening with medical care in the country, and then on an individual basis, we were struggling with new genetics, new tests, we were the first show to have a lead character who was HIV-positive, and the triple cocktail therapy didn’t even come out until 1996. So we were able to be path-breaking in terms of digging into social issues that had medical relevance. We had seen that on other shows, but not to the extent that ER delved in.

SPhD: One of the legacies of a show like ER is how ahead of its time it was with many prescient storylines and issues that it tackled that are relevant to this very day. Are there any that you look back on that stand out to you in that regard as favorites?

NB: I really like the storyline we did with Julianna Margulies’s character [Nurse Carole Hathaway] when she opened up a clinic in the ER to deal with health issues that just weren’t being addressed, like cervical cancer in Southeast Asian populations and dealing with gaps in care that existed, particularly for poor people in this country, and they still do. Emergency rooms [treating people] is not the best way efficiently, economically or really humanely. It’s great to have good ERs, but that’s not where good preventative health care starts. The ethical dilemmas that we raised in an episode I wrote called “Who’s Happy Now?” involving George Clooney’s character [Dr. Doug Ross] treating a minor child who had advanced cystic fibrosis and wanted to be allowed to die. That issue has come up over and over again and there’s a very different view now about letting young people decide their own fate if they have the cognitive ability, as opposed to doing whatever their parents want done.

SPhD: You’ve had an Appointment at UCLA since 2013 at the Fielding School of Global Health as one of the co-founders of the Global Media Center for Social Impact, with extremely lofty ambitions at the intersection of entertainment, social outreach, technology and public health. Tell me a bit about that partnership and how you decided on an academic appointment at this time in your life.

NB: Well, I’m still doing TV. I just finished a three-year stint running the CBS series Under the Dome, which was a small-scale parable about global climate change. While I was doing that, I took this adjunct professorship at UCLA because I felt that there’s a lot we don’t know about how people take stories, learn them, use them, and I wanted to understand that more. I wanted to have a base to do more work in this area of understanding how storytelling can promote public health, both domestically and globally. Our mission at the Global Media Center for Social Impact (GMI) is to draw on both traditional storytelling modes like film, documentaries, music, and innovative or ‘new’ or transmedia approaches like Facebook, Twitter, Instagram, graphic novels and even cell phones to promote and tell stories that engage and inspire people and that can make a difference in their lives.

SPhD: One of the first major initiatives is a very important book “Soda Politics” by the public health food expert Dr. Marion Nestle. You were actually partially responsible for convincing her to write this book. Why this topic and why is it so critical right now?

NB: I went to Marion Nestle because I was convinced after having read a number of studies, particularly those by Kelly Brownell (who is now Dean of Public Policy at Duke University), that sugar-sweetened sodas are the number one contributor to childhood obesity. Just [recently], in the New York Times, they chronicled a new study that showed that obesity is still on the rise. That entails horrible costs, both emotionally and physically for individuals across this country. It’s very expensive to treat Type II diabetes, and it has terrible consequences – retinal blindness, kidney failure and heart disease. So, I was very concerned about this issue, seeing large numbers of kids coming into Children’s Hospital with Type II diabetes when I was a resident, which we had never seen before. I told Marion Nestle about my concerns because I know she’s always been an advocate for reducing the intake and consumption of soda, so I got [her] research funds from the Robert Wood Johnson Foundation. What’s really interesting is the data on soda consumption really aren’t readily available and you have to pay for it. The USDA used to provide it, but advocates for the soda industry pushed to not make that data easily available. I [also] helped design an e-book, with over 50 actionable steps that you can take to take soda out of the home, schools and community.

SPhD: How has social media engagement via our phones and computers, directly alongside television watching, changed the metric for viewing popularity, content development and key outreach issues that you’re tackling with your ActionLab initiative?

NB: ActionLab does what we weren’t [previously] able to do, because we have the web platforms now to direct people in multi-directional ways. When I first started on ER in 1994, television and media were uni-directional endeavors. We provided the content, and the viewer consumed it. Now, with Twitter [as an example], we’ve moved from a uni-directional to a multi-directional approach. People are responding, we are responding back to them as the content creators, they’re giving us ideas, they’re telling us what they like, what they don’t like, what works, what doesn’t. And it’s reshaping the content, so it’s this very dynamic process now that didn’t exist in the past. We were really sheltered from public opinion. Now, public opinion can drive what we do and we have to be very careful to put on some filters, because we can’t listen to every single thing that is said, of course. But we do look at what people are saying and we do connect with them in ways they never had access to us before.

This multi-directional approach is not just actors and writers and directors discussing their work on social media, but it’s using all of the tools of the internet to build a new way of storytelling. Now, anyone can do their own shows and it’s very inexpensive. There are all kinds of YouTube shows on now that are watched by many people. It’s a kind of Wild West, where anything goes and I think that’s very exciting. It’s changed the whole world of how we consume media. I [wrote] an episode of ER 20 years ago with George Clooney, where he saved a young boy in a water tunnel, that was watched by 48 million people at once. One out of six Americans. That will never happen again. So, we had a different kind of impact. But now, the media landscape is fractured, and we don’t have anywhere near that kind of audience, and we never will again. It’s a much more democratic and open world than it used to be, and I don’t even think we know what the repercussions of that will be.

SPhD: If you had a wish list, what are some other issues or global obstacles that you’d love to see the entertainment world (and media) tackle more than they do?

NB: In terms of specifics, we really need to talk about civic engagement, and we need to tell stories about how [it] can change the world, not only in micro-ways, say like Habitat For Humanity or programs that make us feel better when we do something to help others, but in a macro policy-driven way, like asking how we are going to provide compulsory secondary education around the world, particularly for girls. How do we instate that? How do we take on child marriage and encourage countries, maybe even through economic boycotts, to raise the age of child marriage, a problem that we know places girls in terrible situations, often with no chance of ever making a good living, much less getting out of poverty. So, we need to think both macroly and microly in terms of our storytelling. We need to think about how to use the internet and crowdsourcing for public policy and social change. How can we amalgamate individuals to support [these issues]? We certainly have the tools now, with Facebook, Twitter and Instagram, and our friends and social networks, to spread the word – and a very good way to spread the word is through short stories.

SPhD: You’ve enjoyed a storied career, and achieved the pinnacle of success in two very competitive and difficult industries. What drives Dr. Neal Baer now, at this stage of your life?

NB: Well, I keep thinking about new and innovative ways to use trans media. How do I use graphic novels in Africa to tell the story of HIV and prevention? How do we use cell phones to tell very short stories that can motivate people to go and get tested? Innovative financing to pay for very expensive drugs around the world? So, I’m very much interested in how storytelling gets the word out, because stories don’t just change minds, they change hearts. Stories tickle our emotions in ways that I think we don’t fully understand yet. And I really want to learn more about that. I want to know about what I call the “epigenetics of storytelling.” I’m writing a book about that, looking into research that [uncovers] how stories actually change our brain and how do we use that knowledge to tell better stories.

Neal Baer, MD is a television writer and producer behind hit shows China Beach, ER, Law & Order SVU, Under The Dome, and others. He is a graduate of Harvard University Medical School and completed a pediatrics internship at Children’s Hospital Los Angeles. A former co-chair of USC’s Norman Lear Center Hollywood, Health and Society, Dr. Baer is the founder of the Global Media Center for Social Impact at the Fielding School of Global Health at UCLA.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

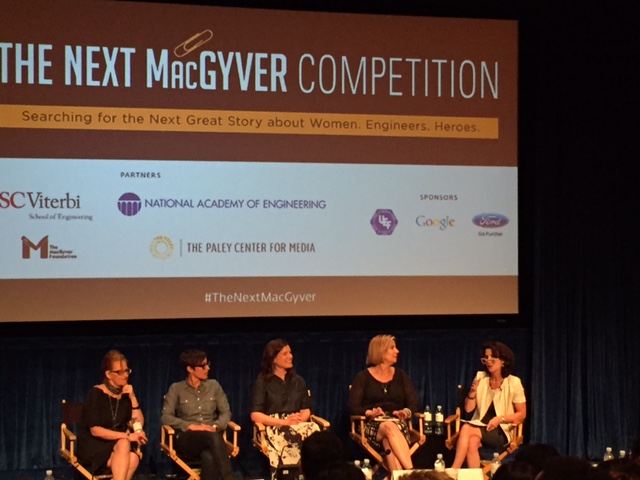

Engineering has an unfortunate image problem. With a seemingly endless array of socioeconomic, technological and large-scale problems to address, and with STEM fields set to comprise the most lucrative 21st Century careers, studying engineering should be a no-brainer. Unfortunately, attracting a wide array of students — or even appreciating engineers as cool — remains difficult, most noticeably among women. When Google Research found out that the #2 reason girls avoid studying STEM fields is perception and stereotypes on screen, they decided to work with Hollywood to change that. Recently, they partnered with the National Academy of Sciences and USC’s prestigious Viterbi School of Engineering to proactively seek out ideas for creating a television program that would showcase a female engineering hero to inspire a new generation of female engineers. The project, entitled “The Next MacGyver,” came to fruition last week in Los Angeles at a star-studded event. ScriptPhD.com was extremely fortunate to receive an invite and have the opportunity to interact with the leaders, scientists and Hollywood representatives that collaborated to make it all possible. Read our full comprehensive coverage below.

“We are in the most exciting era of engineering,” proclaims Yannis C. Yortsos, the Dean of USC’s Engineering School. “I look at engineering technology as leveraging phenomena for useful purposes.” These purposes have been recently unified as the 14 Grand Challenges of Engineering — everything from securing cyberspace to reverse engineering the brain to solving our environmental catastrophes to ensuring global access to food and water. These are monumental problems and they will require a full scale work force to fully realize. It’s no coincidence that STEM jobs are set to grow by 17% by 2024, more than any other sector. Recognizing this opportunity, the US Department of Education (in conjunction with the Science and Technology Council) launched a five-year strategic plan to prioritize STEM education and outreach in all communities.

Despite this golden age, where the possibilities for STEM innovation seem as vast as the challenges facing our world, there is a disconnect in maximizing a full array of talent for the next generation of engineers. There is a noticeable paucity of women and minority students studying STEM fields, with women comprising just 18-20% of all STEM bachelor’s degrees, regardless of the fact that more students are STEM degrees than ever before. Madeline Di Nono, CEO of the Geena Davis Institute on Gender in Media and a judge at the Next MacGyver competition, boils a lot of the disinterest down to a consistent lack of female STEM portrayal in television and film. “It’s a 15:1 ratio of male to female characters for just STEM alone. And most of the science related positions are in life sciences. So we’re not showing females in computer science or mathematics, which is where all the jobs are going to be.” Media portrayals of women (and by proxy minorities) in science remains shallow, biased and appearance-focused (as profiled in-depth by Scientific American). Why does this matter? There is a direct correlation between positive media portrayal and STEM career participation.

It has been 30 years since the debut of television’s MacGyver, an action adventure series about clever agent Angus MacGyver, working to right the wrongs of the world through innovation. Rather than using a conventional weapon, MacGyver thwarts enemies with his vast array of scientific knowledge — sometimes possessing no more than a paper clip, a box of matches and a roll of duct tape. Creator Lee Zlotoff notes that in those 30 years, the show has run continuously around the world, perhaps fueled in part by a love of MacGyver’s endless ingenuity. Zlotoff noted the uncanny parallels between MacGyver’s thought process and the scientific method: “You look at what you have and you figure out, how do I turn what I have into what I need?” Three decades later, in the spirit of the show, the USC Viterbi School of Engineering partnered with the National Academy of Sciences and the MacGyver Foundation to search for a new MacGyver, a television show centered around a female protagonist engineer who must solve problems, create new opportunities and most importantly, save the day. It was an initiative that started back in 2008 at the National Academy of Sciences, aiming to rebrand engineering entirely, away from geeks and techno-gadget builders towards an emphasis on the much bigger impact that engineering technology has on the world – solving big, global societal problems. USC’s Yortsos says that this big picture resonates distinctly with female students who would otherwise be reluctant to choose engineering as a career. Out of thousands of submitted TV show ideas, twelve were chosen as finalists, each of whom was given five minutes to pitch to a distinguished panel of judges comprising of writers, producers, CEOs and successful show runners. Five winners will have an opportunity to pair with an established Hollywood mentor in writing a pilot and showcasing it for potential production for television.

If The Next MacGyver feels far-reaching in scope, it’s because it has aspirations that stretch beyond simply getting a clever TV show on air. No less than the White House lent its support to the initiative, with an encouraging video from Chief Technology Officer Megan Smith, reiterating the importance of STEM to the future of the 21st Century workforce. As Al Roming, the President of the National Academy of Engineering noted, the great 1950s and 1960s era of engineering growth was fueled by intense competition with the USSR. But we now need to be unified and driven by the 14 grand challenges of engineering and their offshoots. And part of that will include diversifying the engineering workforce and attracting new talent with fresh ideas. As I noted in a 2013 TEDx talk, television and film curry tremendous power and influence to fuel science passion. And the desire to marry engineering and television extends as far back as 1992, when Lockheed and Martin’s Norm Augustine proposed a high-profile show named LA Engineer. Since then, he has remained a passionate advocate for elevating engineers to the highest ranks of decision-making, governance and celebrity status. Andrew Viterbi, namesake of USC’s engineering school, echoed this imperative to elevate engineering to “celebrity status” in a 2012 Forbes editorial. “To me, the stakes seem sufficiently high,” said Adam Smith, Senior Manager of Communications and Marketing at USC’s Viterbi School of Engineering. “If you believe that we have real challenges in this country, whether it is cybersecurity, the drought here in California, making cheaper, more efficient solar energy, whatever it may be, if you believe that we can get by with half the talent in this country, that is great. But I believe, and the School believes, that we need a full creative potential to be tackling these big problems.”

So how does Hollywood feel about this movement and the realistic goal of increasing its array of STEM content? “From Script To Screen,” a panel discussion featuring leaders in the entertainment industry, gave equal parts cautionary advice and hopeful encouragement for aspiring writers and producers. Ann Merchant, the director of the Los Angeles-based Science And Entertainment Exchange, an offshoot of the National Academy of Sciences that connects filmmakers and writers with scientific expertise for accuracy, sees the biggest obstacle facing television depiction of scientists and engineers as a connectivity problem. Writers know so few scientists and engineers that they incorporate stereotypes in their writing or eschew the content altogether. Ann Blanchard, of the Creative Artists Agency, somewhat concurred, noting that writers are often so right-brain focused, that they naturally gravitate towards telling creative stories about creative people. But Danielle Feinberg, a computer engineer and lighting director for Oscar-winning Pixar animated films, sees these misconceptions about scientists and what they do as an illusion. When people find out that you can combine these careers with what you are naturally passionate about to solve real problems, it’s actually possible and exciting. Nevertheless, ABC Fmaily’s Marci Cooperstein, who oversaw and developed the crime drama Stitchers, centered around engineers and neuroscientists, remains optimistic and encouraged about keeping the doors open and encouraging these types of stories, because the demand for new and exciting content is very real. Among 42 scripted networks alone, with many more independent channels, she feels we should celebrate the diversity of science and medical programming that already exists, and build from it. Put together a room of writers and engineers, and they will find a way to tell cool stories.

At the end of the day, Hollywood is in the business of entertaining, telling stories that reflect the contemporary zeitgeist and filling a demand for the subjects that people are most passionate about. The challenge isn’t wanting it, but finding and showcasing it. The panel’s universal advice was to ultimately tell exciting new stories centered around science characters that feel new, flawed and interesting. Be innovative and think about why people are going to care about this character and storyline enough to come back each week for more and incorporate a central engine that will drive the show over several seasons. “Story does trump science,” Merchant pointed out. “But science does drive story.”

The twelve pitches represented a diverse array of procedural, adventure and sci-fi plots, with writers representing an array of traditional screenwriting and scientific training. The five winners, as chosen by the judges and mentors, were as follows:

Miranda Sajdak — Riveting

Sajdak is an accomplished film and TV writer/producer and founder of screenwriting service company ScriptChix. She proposed a World War II-era adventure drama centered around engineer Junie Duncan, who joins the military engineer corps after her fiancee is tragically killed on the front line. Her ingenuity and help in tackling engineering and technology development helps ultimately win the war.

Beth Keser, PhD — Rule 702

Dr. Keser, unique among the winners for being the only pure scientist, is a global leader in the semiconductor industry and leads a technology initiative at San Diego’s Qualcomm. She proposed a crime procedural centered around Mimi, a brilliant scientist with dual PhDs, who forgoes corporate life to be a traveling expert witness for the most complex criminal cases in the world, each of which needs to be investigated and uncovered.

Jayde Lovell — SECs (Science And Engineering Clubs)

Jayde, a rising STEM communication star, launched the YouTube pop science network “Did Someone Say Science?.” Her show proposal is a fun fish-out-of-water drama about 15-year-old Emily, a pretty, popular and privileged high school student. After accidentally burning down her high school gym, she forgoes expulsion only by joining the dreaded, geeky SECs club, and in turn, helping them to win an engineering competition while learning to be cool.

Craig Motlong — Q Branch

Craig is a USC-trained MFA screenwriter and now a creative director at a Seattle advertising agency. His spy action thriller centered around mad scientist Skyler Towne, an engineer leading a corps of researchers at the fringes of the CIA’s “Q Branch,” where they develop and test the gadgets that will help agents stay three steps ahead of the biggest criminals in the world.

Shanee Edwards — Ada and the Machine

Shanee, an award-winning screenwriter, is the film reviewer at SheKnows.com and the host/producer of the web series She Blinded Me With Science. As a fan of traditional scientific figures, Shanee proposed a fictionalized series around real-life 1800s mathematician Ada Lovelace, famous for her work on Charles Babbage’s early mechanical general-purpose computer, the Analytical Engine. In this drama, Ada works with Babbinge to help Scotland Yard fight opponents of the industrial revolution, exploring familiar themes of technology ethics relevant to our lives today.

Craig Motlong, one of five ultimate winners, and one of the few finalists with absolutely no science background, spent several months researching his concept with engineers and CIA experts to see how theoretical technology might be incorporated and utilized in a modern criminal lab. He told me he was equal parts grateful and overwhelmed. “It’s an amazing group of pitches, and seeing everyone pitch their ideas today made me fall in love with each one of them a little bit, so I know it’s gotta be hard to choose from them.”

Whether inspired by social change, pragmatic inquisitiveness or pure scientific ambition, this seminal event was ultimately both a cornerstone for strengthening a growing science/entertainment alliance and a deeply personal quest for all involved. “I don’t know if I was as wrapped up in these issues until I had kids,” said USC’s Smith. “I’ve got two little girls, and I tried thinking about what kind of shows [depicting female science protagonists] I should have them watch. There’s not a lot that I feel really good sharing with them, once you start scanning through the channels.” Motlong, the only male winner, is profoundly influenced by his experience of being surrounded by strong women, including a beloved USC screenwriting instructor. “My grandmother worked during the Depression and had to quit because her husband got a job. My mom had like a couple of options available to her in terms of career, my wife wanted to be a genetic engineer when she was little and can’t remember why she stopped,” he reflected. “So I feel like we are losing generations of talent here, and I’m on the side of the angels, I hope.” NAS’s Ann Merchant sees an overarching vision on an institutional level to help achieve the STEM goals set forth by this competition in influencing the next generation of scientist. “it’s why the National Academy of Sciences and Engineering as an institution has a program [The Science and Entertainment Exchange] based out of Los Angeles, because it is much more than this [single competition].”

Indeed, The Next MacGyver event, while glitzy and glamorous in a way befitting the entertainment industry, still seemed to have succeeded wildly beyond its sponsors’ collective expectations. It was ambitious, sweeping, the first of its kind and required the collaboration of many academic, industry and entertainment alliances. But it might have the power to influence and transform an entire pool of STEM participants, the way ER and CSI transformed renewed interest in emergency medicine and forensic science and justice, respectively. If not this group of pitched shows, then the next. If not this group of writers, then the ones who come after them. Searching for a new MacGyver transcends finding an engineering hero for a new age with new, complex problems. It’s about being a catalyst for meaningful academic change and creative inspiration. Or at the very least opening up Hollywood’s eyes and time slots. Zlotoff, whose MacGyver Foundation supported the event and continually seeks to promote innovation and peaceful change through education opportunities, recognized this in his powerful closing remarks. “The important thing about this competition is that we had this competition. The bell got rung. Women need to be a part of the solution to fixing the problems on this planet. [By recognizing that], we’ve succeeded. We’ve already won.”

The Next MacGyver event was held at the Paley Center For Media in Beverly Hills, CA on July 28, 2015. Follow all of the competition information on their site. Watch a full recap of the event on the Paley Center YouTube Channel.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

On February 28, 1998, the revered British medical journal The Lancet published a brief paper by then-high profile but controversial gastroenterologist Andrew Wakefield that claimed to have linked the MMR (measles, mumps and rubella) vaccine with regressive autism and inflammation of the colon in a small case number of children. A subsequent paper published four years later claimed to have isolated the strain of attenuated measles virus used in the MMR vaccine in the colons of autistic children through a polymerase chain reaction (PCR amplification). The effect on vaccination rates in the UK was immediate, with MMR vaccinations reaching a record low in 2003/2004, and parts of London losing herd immunity with vaccination rates of 62%. 15 American states currently have immunization rates below the recommended 90% threshold. Wakefield was eventually exposed as a scientific fraud and an opportunist trying to cash in on people’s fears with ‘alternative clinics’ and pre-planned a ‘safe’ vaccine of his own before the Lancet paper was ever published. Even the 12 children in his study turned out to have been selectively referred by parents convinced of a link between the MMR vaccine and their children’s autism. The original Lancet paper was retracted and Wakefield was stripped of his medical license. By that point, irreparable damage had been done that may take decades to reverse.

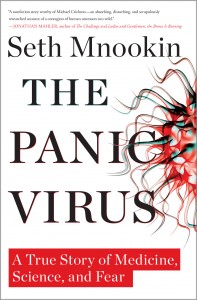

How could a single fraudulent scientific paper, unable to be replicated or validated by the medical community, cause such widespread panic? How could it influence legions of otherwise rational parents to not vaccinate their children against devastating, preventable diseases, at a cost of millions of dollars in treatment and worse yet, unnecessary child fatalities? And why, despite all evidence to the contrary, have people remained adamant in their beliefs that vaccines are responsible for harming otherwise healthy children, whether through autism or other insidious side effects? In his brilliant, timely, meticulously-researched book The Panic Virus, author Seth Mnookin disseminates the aggregate effect of media coverage, echo chamber information exchange, cognitive biases and the desperate anguish of autism parents as fuel for the recent anti-vaccine movement. In doing so, he retraces the triumphs and missteps in the history of vaccines, examines the social impact of rejecting the scientific method in a more broad perspective, and ways that this current utterly preventable public health crisis can be avoided in future scenarios. A review of The Panic Virus, an enthusiastic ScriptPhD.com Editor’s Selection, follows below.

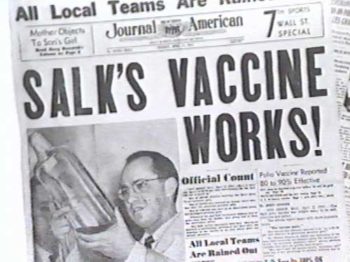

Such fervent controversy over inoculating young children for communicable diseases might have seemed unimaginable to the pre-vaccine generations. It wasn’t long ago, Mnookin chronicles, that death and suffering at the hands of diseases like polio and small pox were the accepted norm. In 18th Century Europe, for example, 400,000 people per year regularly died of small pox, and it caused one third of all cases of blindness. So desperate were people to avoid the illnesses’ ravages, that crude, rudimentary inoculation methods were employed, even at the high risk of death, to achieve life-long immunity. A 1916 polio outbreak in New York City, with fatality rates between 20 and 25 percent, frayed nerves and public health infrastructure to the point of near-anarchy. As the disease waxed and waned in outbreaks throughout the decades that followed, distraught parents had no idea about how to protect their children, who were often far more susceptible to fatality than adults. By the time Jonas Salk’s polio vaccine breakthrough was announced as “safe, effective and potent” on April 12, 1955, pandemonium broke out. “Air raid sirens were set off,” Mnookin writes. “Traffic lights blinked red; churches’ bells rang; grown men and women wept; schoolchildren observed a moment of silence.” Salk’s discovery was hailed as “one of the greatest events in the history of medicine.”

Mnookin doesn’t let scientists off the hook where vaccines are concerned, however, and rightfully so. Starting around World War II, with advances such as the cowpox and polio vaccines, along with the dawning of the Antibiotics Age, eradicating death and suffering from communicable diseases and bacterial infections, a hubris and sense of superiority began to creep into the scientific establishment, with dangerous consequences. Fearing the threat of biological warfare during World War II, a 1941 hastily-constructed US military campaign to vaccinate all US troops against yellow fever resulted in batches contaminated with Hepatitis B, resulting in 300,000 infections and 60 deaths. The first iteration of Salk’s polio vaccine was only 60-90% effective before being perfected and eventually replaced by the more effective Sabin vaccine. Furthermore, dozens of children who had received doses from the first batch of vaccines were paralyzed or killed due to contaminated vaccines that had failed safety tests. In 1976, buoyed by the death of a soldier from a flu virus that bore striking genetic similarity to the 1918 Spanish flu epidemic strain, President Gerald Ford instituted a nation-wide mass vaccination initiative against a “swine flu” epidemic. Unfortunately, although 40 million Americans were vaccinated in three months, 500 developed symptoms of Guillain-Barré syndrome (30 died), seven times higher than would normally be expected as a rare side effect of vaccination. Many people feel that the scars of the 1976 fiasco have incurred a permanent distrust of the medical establishment and have haunted public health influenza immunization efforts to this day.

These black marks on the otherwise miraculous, life-saving history of vaccine development not only instilled a gradual mistrust in public health officials, but laid the groundwork for the incendiary autism-vaccine scandal. The only missing components were a proper context of panic, a snake oil salesman and a compliant media willing to spread his erroneous message.

Enter the autism epidemic and Andrew Wakefield’s hoax. Because this seminal event had such a profound effect on the formation and proliferation of the current anti-vaccine movement, it is chronicled in far greater detail than our introduction above. From precursor incidents that ripened the potential for coercion to the Wakefield’s shoddy methodology and the naive medical community that took him at his word, Mnookin weaves through this case with well-researched scientific facts, interesting interviews and logic. A large chunk of the book is ultimately devoted to the psychology of what the anti-vaccine movement really is: a cognitive bias and a willingness to stay adamant in the belief that vaccines cause harm despite all evidence to the contrary. “If you assume,” he writes, “as I had, that human beings are fundamentally logical creatures, this obsessive preoccupation with a theory that has for all intents and purposes been disproved is hard to fathom. But when it comes to decisions around emotionally charged topics, logic often takes a back seat to a set of unconscious mechanisms that convince us that it is our feelings about a situation and not the facts that represent the truth.”

Given this blog’s objective to cover science and technology in entertainment and media, it would be disingenuous to write about the anti-vaccine movement without recognizing the implicit role played by the media and entertainment industries in exacerbating the polemic. By lending a voice to the anti-vaccine argument, even in a subtle manner or in a journalistic attempt to “be fair to the other side,” over time, an echo chamber of lies turned into an inferno. In 1982, an hour-long NBC documentary called DPT: Vaccine Roulette aired, overemphasizing rare side effects in babies from vaccinations to a nation of alarmed parents and completely undermining their benefits. It was a propaganda piece, but an important hallmark for what would come later. A 2008 episode of the popular ABC hit show Eli Stone irresponsibly aired anti-vaccination propaganda involving a lawyer questioning a pharmaceutical company that manufactures vaccines due to the even then-debunked link to autism. For several recent years, actress and Playboy bunny Jenny McCarthy (who is given an entire chapter by Mnookin) became a tireless advocate against vaccinations, believing that they gave her son autism. She didn’t have any scientific proof for this, but was nevertheless given a platform by everyone from Larry King on CNN to a fawning Oprah Winfrey.

As it turns out, McCarthy’s son never even had autism, but rather a very rare and treatable neurological disorder. In a self-penned editorial for the Chicago Sun-Times, she has officially retroactively denied her anti-vaccine stance, and says she simply wants “more research on their effectiveness.” An extremely sympathetic 2014 eight-page Washington Post magazine article profile of prominent anti-vaccine activist Robert F. Kennedy, Jr. (who believes in the link between vaccines and autism) repeated his talking points numerous times throughout. This, among an endless cycle of interviews and appearances by defiant anti-vaccine proponents, given equal air time side-by-side with frustrated scientists, as if both positions were somehow viable, and worthy of journalistic debate. Once the worm was out of the can, no amount of rational discourse could temper the visceral antipathy that had been created. This is irresponsible, dangerous and flat-out wrong. When the public is confused about an esoteric issue pertaining to science, medicine or technology, influencers in the public eye cannot perpetuate misinformation.

Despite the unanimous medical repudiation of Wakefield’s fraudulent methods and conclusions and the retraction of his Lancet paper, an irreversible and insidious myth had begun permeating, first among the autism community, then spreading to proponents of organic and holistic approaches to health and finally, to mainstream society. In the aftermath of the controversy, epidemiological studies debunking the autism-vaccination “link,” combined with a growing disease crisis, have forced the largest US-based autism advocacy organization to reverse its stance and fully endorse vaccination to a still-divided community. Wakefield remains more defiant than ever, insisting to this day that his research was valid, attempting to sue the British journalism outlet that funded the inquiry into his fraud and peddling holistic treatments for autism as well as his “alternative” vaccine. Sadly, the public health ramifications have nothing short of disastrous, with a dangerous recurrence of several major childhood diseases.

A few examples of the many systemic casualties of the anti-vaccination movement (many occurring just since the publication of Mnookin’s book):

•A summary from the American Medical Association about the nascence of the measles crisis in 2011, when the US saw more measles cases than it had in 15 years

•Immunization rates falling so low that schools in some communities are being forced to terminate personal exemption waivers and, in some cases, legally mandated immunization for public school attendance

•California’s worst whooping cough epidemic in 70 years.

•Most recently, a measles outbreak at Disneyland, resulting in 26 cases spread across four states, after an unvaccinated woman visited the theme park

•Anti-vaccine hysteria has spread to Europe, which has had a measles rise of 348% from 2013 to 2014 (and growing), along with an alarming resurgence of pertussis

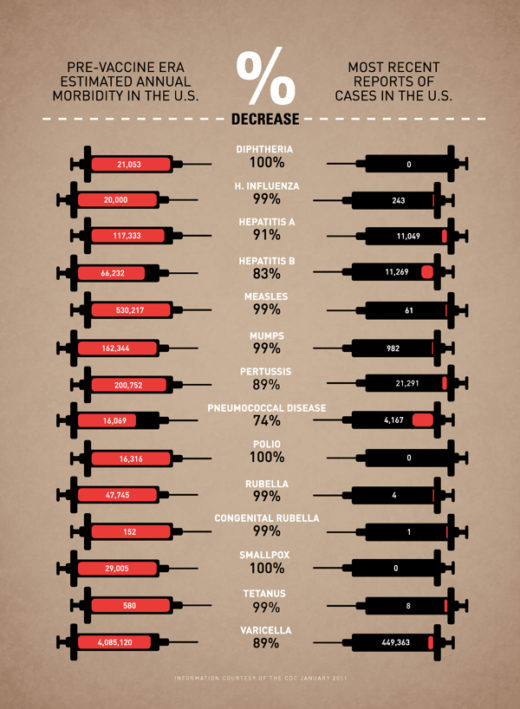

The scientific evidence that vaccines work is indisputable, and as the below infographic summarizes, their impact on morbidity from communicable diseases is miraculous. Sadly, now that the anti-vaccine movement has streamlined into the general population, anxious parents are conflicted as to whether vaccinating is the right choice for their children. We must start by going back to the basics of what a vaccine actually is and how it works. Next, we must reiterate the critical importance that maintaining herd immunity above 92-95% plays in protecting not only those too young or immunocompromised to be vaccinated, but even fully vaccinated populations. If all else fails, try emailing skeptical friends and family a clever graphic cartoon that breaks down digestible vaccine facts. Simply put: getting vaccinated is not a personal choice, it’s a selfish and dangerous choice.

The Panic Virus is first and foremost an incredibly entertaining, well-written narrative of the dawn of an anti-vaccine phenomenon which has reached a critical mass. It is also an important case study and cautionary tale about how we process and disseminate information in the age of the Internet and access to instant information. It is also an indictment on a trigger-happy, ratings-driven, sensationalist media that reports “news” as they interpret it first, and bother to check for facts later. In the case of the anti-vaccine movement of the last few years, the media fueled the fire that Andrew Wakefield started, and once a gaggle of angry, sympathetic parents was released, it was difficult (if-near impossible) to undo the damage. This type of journalism, Mnookin writes, “gives credence to the belief that we can intuit our way through all the various decisions we need to make in our lives and it validates the notion that our feelings are a more reliable barometer of reality than the facts.” Sadly, the autism-vaccine panic movement is not an outlying incident, but rather a disconcerting emblem of a growing anti-science agenda. The UN just released its most dire and alarming report ever issued on man-made climate change impacts, warning that temperature changes and industrial pollution will affect not just the environment, extreme weather events and coastal cities, but even the stability of our global economy itself. Immediate rebuttals from an influential lobbying group tried to undermine the majority of the scientists’ findings. So toxic is the corporate and political resistance to any kind of mitigating action, that some feel we need a technological or political miracle to stave off a certain environmental crisis. At a time when physicists are serious debate on evolution versus creationism and thousands of public schools across the United States use taxpayer funds to teach creationism in the classroom.

Mnookin’s book is an important resource and conversation starter for scientists, researchers and frustrated physicians as they carve out talking points and communication strategies to establish a dialogue with the public at large. When young parents have questions about vaccines (no matter how erroneous or ill-informed), pediatricians should already have materials for engaging in a positive, thoughtful discussion with them. When scientists and researchers encounter anti-science proclivities or subversive efforts to undermine their advocacy for a pressing issue, they should be armed with powerful, articulate communicators — ready and willing to deliberate in the media and convey factual information in an accessible way. When Jenny McCarthy and a gaggle of new-age holistic herbologists were peddling their “mommy instincts” and conspiracy theories against vaccines, far too many scientists and physicians simply thought it was beneath them to even engage in a discussion about something whose certainty and proof of concept was beyond reproach. Now, the newest polling suggests that nothing will change an anti-vaxxer’s mind, not even factual reasoning. Going forward, regardless of the issue at hand, this type of response can never happen again. The cost of complacency or arrogance is nothing short of life or death.

The Panic Virus by Seth Mnookin is currently available on paperback and Kindle wherever books are sold. For further reading on how to deal with the complexities of the anti-vaccine movement aftermath, we suggest the recent book On Immunity: An Inoculation by Northwestern University lecturer Eula Bliss.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

The history of science movies nominated for Oscars is not a very long one. Aside from the technical achievement awards or an occasional nomination for acting merits, the Best Picture category has historically not opened its doors to scientific content, save for notable nominees “A Clockwork Orange,” “District 9,” “Inception” and “Avatar.” A documentary about science has never been nominated for the Best Documentary category, until this year, with How To Survive a Plague, Director David France’s stunning account of the brave activists that brought the AIDS epidemic to the attention of the government and science community in the disease’s darkest early days. “Plague” set history last weekend by becoming the first “Best Documentary” nominee with an almost entirely scientific/biomedical narrative. More importantly, it also established a standard by which future science documentaries should use emotional storytelling to captivate audiences and inspire action. ScriptPhD review and discussion under the “continue reading” cut.

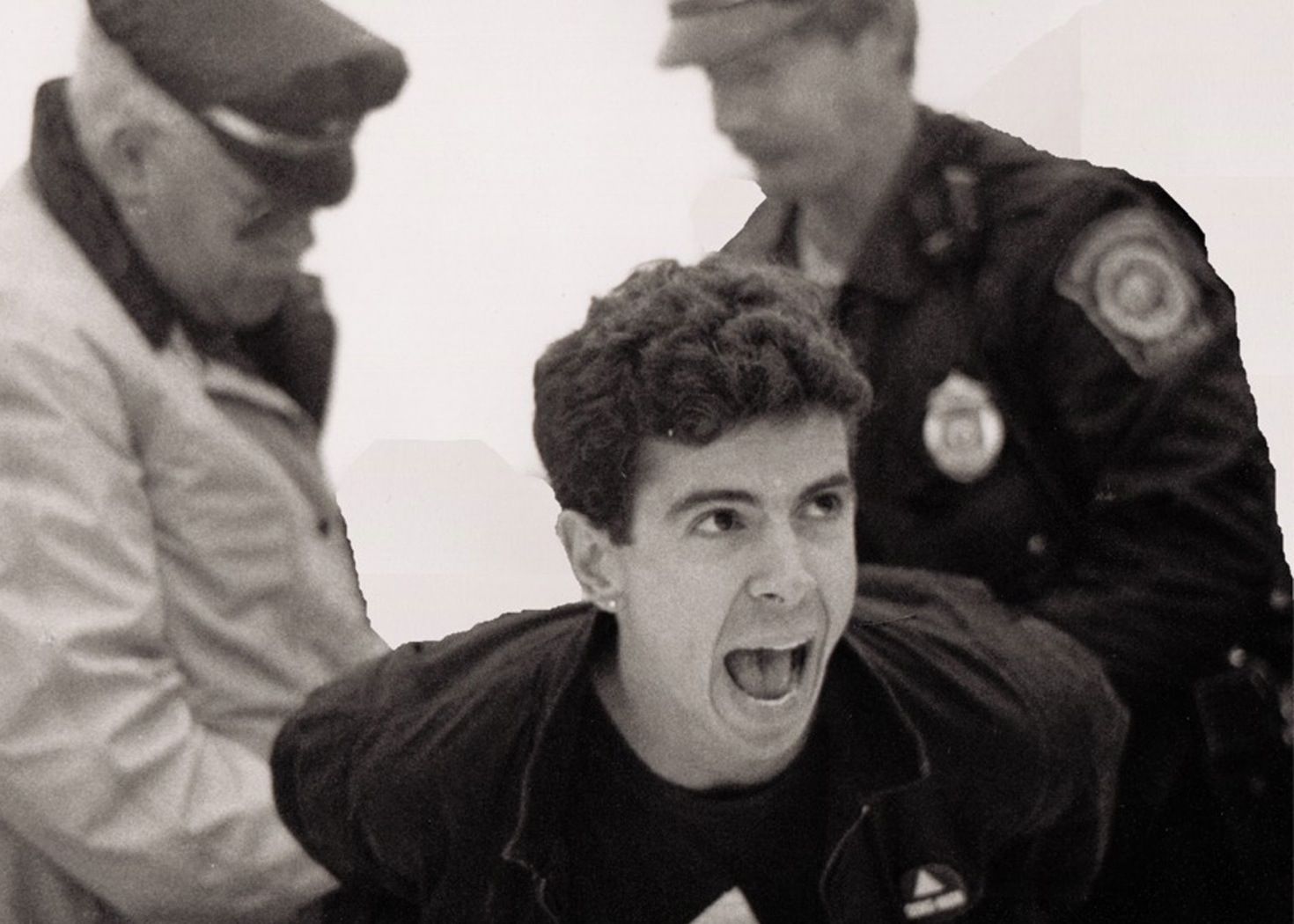

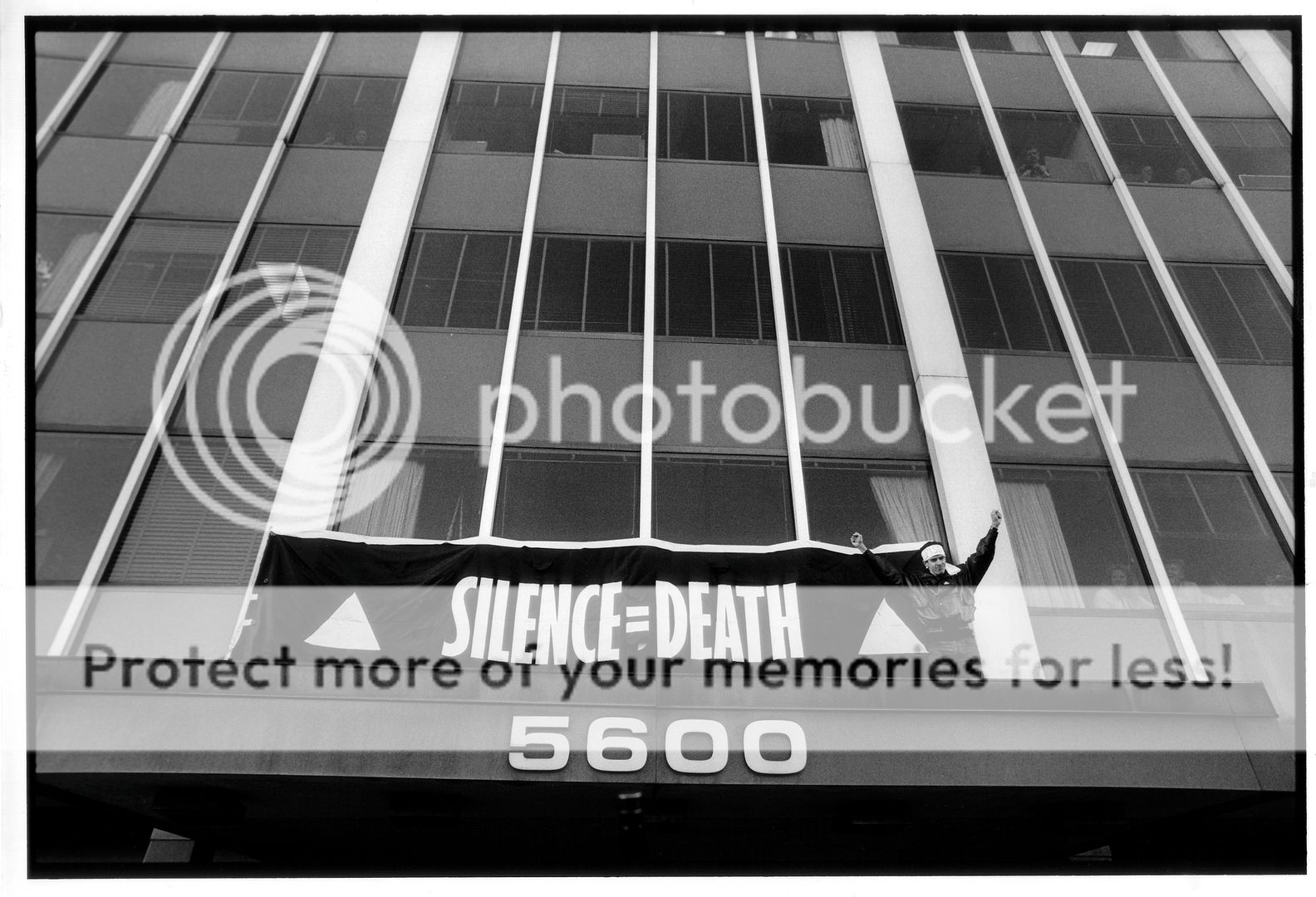

“How To Survive a Plague” picks up where groundbreaking companion AIDS film “And the Band Played On” drops off, around 1987, with the formation of the AIDS Coalition to Unleash Power (ACT UP) advocacy group, which is the central protagonist of the documentary. The AIDS virus had been identified, isolated and diagnosed in patients. But as a running ticker throughout the movie reminds us, the AIDS death toll knew no limit. By 1988, 70,000 people had already perished, a number that would climb to an astonishing 500,000 by 1997. Complacency and frustration were the norm among medical professionals, who treated patients with a series of “what the hell” drugs, when they’d even consider treating them. The scientific community, although recognizing that research was necessary, devoted little money or manpower. Even early drugs that showed experimental efficacy or relieved symptoms in AIDS patients were dismissed.

Scientists and the government were the targets of ACT UP’s fury and protests.

But by the late 80s/early 90s, ACT UP’s mission had reached a critical Phase II: conformity. Extremists and truculent zealots were dismissed to the sidelines, while the group became self-made scientists, learning everything from medicine to virology and immunology to chemistry. Rather than shut down the FDA for a day like they’d done years ago, they showed up to a scientific meeting in suits and ties to hand out a well-thought-out publication worthy proposal on AIDS research and treatment timelines. Impressed scientists took note. By the time charming ACT UP leader Peter Staley addresses an international convention meeting of the American Society for Microbiology, he is given a standing ovation. ACT UP’s fight, the fight of the gay community, had now become a global fight.

In many ways, “How To Survive a Plague” is an emotional contrast to “And the Band Played On,” even though the former is a documentary largely consisting of reel footage of the events it portrays, while the latter is a dramatized account of scientists racing to find the identity of the AIDS virus. Although “Band” touches briefly on the fear, government insouciance and distrust within the gay community in the earliest days of the bourgeoning epidemic, it is very much a pure science film. Its themes of persistence, no-holds-barred competition, stunningly accurate epidemiology and virology details and race to an answer could be about any virus in any historical time. “Plague” puts all of the science and medicine of the AIDS crisis in an emotional and historical context. A running death ticker as the years pass lends an urgency to the battle of the ACT UP activists. Moreover, France inserts actual footage of their protests (the most famous being an all-day takeover of the National Institutes of Health), meetings and press conferences, and difficult-to-watch footage of AIDS that shines an intimate spotlight of realism on the crisis. As France notes, the AIDS crisis burgeoned concomitantly with the appearance of the camcorder, making early AIDS activists “the very first social movement to shoot a world the dominant culture was ignoring.”

Scientists in the movie range from heroes and anti-heroes to ordinary people, which is a rarity in entertainment media. By far the biggest hero is Dr. Iris Long, a chemist with 20 years of experience in retroviral drug development. Although she knew no one with AIDS and never met a homosexual in her life, Dr. Long became a mentor and science advisor to ACT UP. Her fearless leadership and ability to educate the members led to direct reforms at the FDA and NIH. Other members like Bill Bahlman (the first to demand a direct drug treatment for AIDS) and Garance Franke-Ruta (a high school drop out and science nerd who became the group’s leading advocate for science-based activism) led the internal change to join forces with scientists rather than fighting them. And for every scientist that ignored the AIDS crisis was a research pioneer like Anthony Faucci, now head of the NIH Institute of Allergy and Infectious Disease, or a Merck chemist leading the development of anti-retroviral drugs. Moments after a graphics-filled technical explanation of how anti-retroviral drugs inhibit HIV virus replication, one of the Merck scientists interviewed in the film broke down into tears when recollecting the enormity of what they’d accomplished. It’s a stunning, raw moment in a film filled with them. Recent advances in writing for sci-fi have painted more complex, human depictions of scientists and researchers. But such insights are far too rare in documentaries.

In a strangely macabre way, “Plague” is an emotional feel good story, but one that isn’t over yet. Through the darkest days of rallying a tone-deaf world, all the while losing members day by day, ACT UP’s commitment and perseverance never failed. By the time surviving members, some of whom professed in footage that they never expected to live, are finally revealed in the present day in the film’s last act, the audience is flooded with gratitude and catharsis. The science world, which once didn’t know what to make of this emerging virus, took only one year from the time the first protease inhibitor hit the market to come up with and approve the current three-drug treatment cocktail.

But the film’s unstated, looming conclusion is that we will never get back the millions of people who died during a decade of silence. Too many people continue to perish, most in what has become a new frontier for the AIDS crisis. The fight for a cure or prevention is not over. And a new plague could always be around the corner. It is our hope that future documentarians take note of both the film’s message and its delivery style.

Take a look at the official trailer for “How To Survive a Plague”:

~*ScriptPhD*~

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development.

Subscribe to free email notifications of new posts on our home page.

]]>“The wars of the 21st Century will be fought over water.” —Ismail Serageldin, World Bank

Watching the devastation and havoc caused by Hurricane Sandy and several recent water-related natural disasters, it’s hard to imagine that global water shortages represent an environmental crisis on par with climate change. But if current water usage habits do not abate, or if major technological advances to help recycle clean water are not implemented, this is precisely the scenario we are facing—a majority of 21st Century conflicts being fought over water. From the producers of socially-conscious films An Inconvenient Truth and Food, Inc., Last Call at the Oasis is a timely documentary that chronicles current challenges in worldwide water supply, outlines the variables that contribute to chronic shortages and interviews leading environmental scientists and activists about the ramifications of chemical contamination in drinking water. More than just an environmental polemic, Last Call is a stirring call to action for engineering and technology solutions to a decidedly solvable problem. A ScriptPhD.com review under the “continue reading” cut.

Although the Earth is composed of 70% water, only 0.7% (or 1% of the total supply) of it is fresh and potable, which presents a considerable resource challenge for a growing population expected to hit 9 billion people in 2050. In a profile series of some of the world’s most populous metropolises, Last Call vividly demonstrates that stark imagery of shortage crises is no longer confined to third world countries or women traveling miles with a precious gallon of water perched on their heads. The Aral Sea, a critical climate buffer for Russia and surrounding Central Asia neighbors, is one-half its original size and devoid of fish. The worst global droughts in a millennium have increased food prices 10% and raised a very real prospect of food riots. Urban water shortages, such as an epic 2008 shortage that forced Barcelona to import emergency water, will be far more common. The United States, by far the biggest consumer of water in the world, could also face the biggest impact. Lake Mead, the biggest supplier of water in America and a portal to the electricity-generating Hoover Dam, is only 40% full. Hoover Dam, which stops generating electricity when water levels are at 1050 feet, faces that daunting prospect in less than 4 years!

One strength of Last Call is that it is framed around a fairly uniform and well-substantiated hypothesis: water shortage is directly related to profligate, unsustainable water usage. Some usage, such as the 80% that is devoted to agriculture and food production, will merit evaluation for future conservation methods. California and Australia, two agricultural behemoths half a world apart, both face similar threats to their industries. But others, such as watering lawns. are unnecessary habits that can be reduced or eliminated. Toilets, most of which still flush 6 gallons in a single use, are the single biggest user of water in our homes—6 billion gallons per day! The US is also the largest consumer of bottled water in the world, with $11 billion in sales, even though bottled water, unlike municipal tap water, is under the jurisdiction of the FDA, not the EPA. As chronicled in the documentary Tapped, 45% of all bottled water starts off as tapped water, and has been subject to over 100 recalls for contamination.

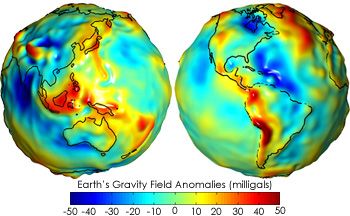

A cohort of science and environmental experts bolsters Last Call’s message with the latest scientific research in the area. NASA scientists at the Jet Propulsion Laboratory are using a program called the Gravity Recovery and Climate Experiment (GRACE) Satellite to measure the change in oceans, including water depletion, rise in sea levels and circulation through a series of gravity maps. Erin Brockovich, famously portrayed by Julia Roberts in the eponymous film, appears throughout the documentary to discuss still-ongoing issues with water contamination, corporate pollution and lack of EPA regulation. UC Berkeley marine biologist Tyrone Hayes expounds on what we can learn from genetic irregularities in amphibians found in contaminated habitats.

Take a look at a trailer for Last Call at the Oasis:

Indeed, chemical contamination is the only issue that supersedes overuse as a threat to our water supply. Drugs, antibiotics and other chemicals, which cannot be treated at sewage treatment plants, are increasingly finding their way into the water supply, many of them at the hands of large corporations. Between 2004 and 2009, there were one half a million violations of the Clean Water Act. Last Call doesn’t spare the eye-opening details that will make you think twice when you take a sip of water. Atrazine, for example, is the best-selling pesticide in the world, and the most-used on the US corn supply. Unfortunately, it has also been associated with breast cancer and altered testosterone levels in sea life, and is being investigated for safety by the EPA, with a decision expected in 2013. More disturbing is the contamination from concentrated animal feeding operations (CAFOs) near major rivers and lakes. Tons of manure from cows, one of which contributes the waste of 23 humans, is dumped into artificial lagoons that then seep into interconnected groundwater supplies.

It’s not all doom and gloom with this documentary, however. Unlike other polemics in its genre, Last Call doesn’t simply outline the crisis, it also offers implementable solutions and a challenge for an entire generation of engineers and scientists. At the top of the list is a greater scrutiny of polluters and the pollutants they release into the water supply without impunity. But solutions such as recycling sewage water, which has made Singapore a global model for water technology and reuse, are at our fingertips, if developed and marketed properly. The city of Los Angeles has already announced plans to recycle 4.9 billion gallons of waste water by 2019. Last Call is an effective final call to save a fast-dwindling resource through science, innovation and conservation.

Last Call at the Oasis went out on DVD November 6th.

~*ScriptPhD*~

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development.

Subscribe to free email notifications of new posts on our home page.

]]>