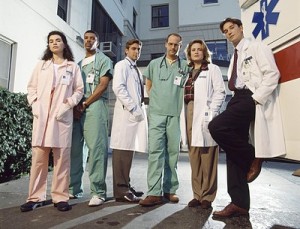

It has become compulsory for modern medical (or scientifically-relevant) shows to rely on a team of advisors and experts for maximal technical accuracy and verisimilitude on screen. Many of these shows have become so culturally embedded that they’ve changed people’s perceptions and influenced policy. Even the Gates Foundation has partnered with popular television shows to embed important storyline messages pertinent to public health, HIV prevention and infectious diseases. But this was not always the case. When Neal Baer joined ER as a young writer and simultaneous medical student, he became the first technical expert to be subsumed as an official part of a production team. His subsequent canon of work has reshaped the integration of socially relevant issues in television content, but has also ushered in an age of public health awareness in Hollywood, and outreach beyond it. Dr. Baer sat down with ScriptPhD to discuss how lessons from ER have fueled his public health efforts as a professor and founder of UCLA’s Global Media Center For Social Impact, including storytelling through public health metrics and leveraging digital technology for propelling action.

Neal Baer’s passion for social outreach and lending a voice to vulnerable and disadvantaged populations was embedded in his genetic code from a very young age. “My mother was a social activist from as long as I can remember. She worked for the ACLU for almost 45 years and she participated in and was arrested for the migrant workers’ grape boycott in the 60s. It had a true and deep impact on me that I saw her commitment to social justice. My father was a surgeon and was very committed to health care and healing. The two of them set my underlying drives and goals by their own example.” Indeed, his diverse interests and innate curiosity led Baer to study writing at Harvard and the American Film Institute and eventually, medicine at Harvard Medical School. Potentially presenting a professional dichotomy, it instead gave him the perfect niche — medical storytelling — that he parlayed into a critical role on the hit show ER.

During his seven-year run as medical advisor and writer on ER, Baer helped usher the show to indisputable influence and critical acclaim. Through the narration of important, germane storylines and communication of health messages that educated and resonated with viewers, ER‘s authenticity caught the attention of the health care community and inspired many television successors. “It had a really profound impact on me, that people learn from television, and we should be as accurate as possible,” Baer reflects. “[Viewers] believe it’s real, because we’re trying to make it look as real as possible. We’re responsible, I think. We can’t just hide behind the façade of: it’s just entertainment.” As show runner of Law & Order: SVU, Baer spearheaded a storyline about rape kit backlogs in New York City that led to a real-life push to clear 17,000 backlogged kits and established a foundation that will help other major US cities do the same. With the help of the CDC and USC’s prestigious Norman Lear Center, Baer launched Hollywood, Health and Society, which has become an indispensable and inexhaustible source of expert information for entertainment industry professionals looking to incorporate health storylines into their projects. In 2013, Baer co-founded the Global Media Center For Media Impact at UCLA’s School of Public Health, with the aim of addressing public health issues through a combination of storytelling and traditional scientific metrics.

Soda Politics

One of Baer’s seminal accomplishments at the helm of the Global Media Center was convincing public health activist Marion Nestle to write the book Soda Politics: Taking on Big Soda (And Winning). Nestle has a long and storied career of food and social policy work, including the seminal book Food Politics. Baer first took note of the nutritional and health impact soda was having on children in his pediatrics practice. “I was just really intrigued by the story of soda, and the power that a product can have on billions of people, and make billions of dollars, where the product is something that one can easily live without,” he says. That story, as told in Soda Politics, is a powerful indictment on the deleterious contribution of soda to the United States’ obesity crisis, environmental damage and political exploitation of sugar producers, among others. More importantly, it’s an anthology of the history of dubious marketing strategies, insider lobbying power and subversive “goodwill” campaigns employed by Big Soda to broaden brand loyalty.

Even more than a public health cautionary tale, Soda Politics is a case study in the power of activism and persistent advocacy. According to a New York Times expose, the drop in soda consumption represents the “single biggest change in the American diet in the last decade.” Nestle meticulously details the exhaustive, persistent and unyielding efforts that have collectively chipped away at the Big Soda hegemony: highly successful soda taxes that have curbed consumption and obesity rates in Mexico, public health efforts to curb soda access in schools and in advertising that specifically targets young children, and emotion-based messaging that has increased public awareness of the deleterious effects of soda and shifted preference towards healthier options, notably water. And as soda companies are inevitably shifting advertising and sales strategy towards , as well as underdeveloped nations that lack access to clean water, the lessons outlined in the narrative of Soda Politics, which will soon be adapted into a documentary, can be implemented on a global scale.

ActionLab Initiative

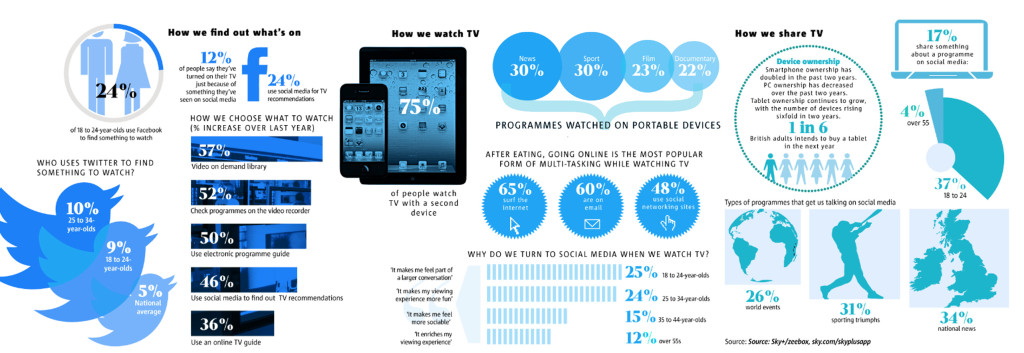

Few technological advancements have had an impact on television consumption and creation like the evolution of digital transmedia and social networking. The (fast-crumbling) traditional model of television was linear: content was produced and broadcast by a select conglomerate of powerful broadcast networks, and somewhat less-powerful cable networks, for direct viewer consumption, measured by demographic ratings and advertising revenue. This model has been disrupted by web-based content streaming such as YouTube, Netflix, Hulu and Amazon, which, in conjunction with fractionated networks, will soon displace traditional TV watching altogether. At the same time, this shifting media landscape has burgeoned a powerful new dynamic among the public: engagement. On-demand content has not only broadened access to high-quality storytelling platforms, but also provides more diverse opportunities to tackle socially relevant issues. This is buoyed by the increased role of social media as an entertainment vector. It raises awareness of TV programs (and influences Hollywood content). But it also fosters intimate, influential and symbiotic conversation alongside the very content it promotes. Enter ActionLab.

One of the critical pillars of the Global Media Center at UCLA, ActionLab hopes to bridge the gap between popular media and social change on topics of critical importance. People will often find inspiration from watching a show, reading a book or even an important public advertising campaign, and be compelled to action. However, they don’t have the resources to translate that desire for action into direct results. “We first designed ActionLab about five or six years ago, because I saw the power that the shows were having – people were inspired, but they just didn’t know what to do,” says Baer. “It’s like catching lightning in a bottle.” As a pilot program, the site will offer pragmatic, interactive steps that people can implement to change their lives, families and communities. ActionLab offers personalized campaigns centered around specific inspirational projects Baer has been involved in, such as the Soda Politics book, the If You Build It documentary and a collaboration with New York Times columnist Nicholas Kristof on his book/documentary A Path Appears. As the initiative expands, however, more entertainment and media content will be tailored towards specific issues, such as wellness, female empowerment in poor countries, eradicating poverty and community-building.

“We are story-driven animals. We collect our thoughts and our memories in story bites,” Baer notes. “We’re always going to be telling stories. We just have new tools with which to tell them and share them. And new tools where we can take the inspiration from them and ignite action.”

Baer joined ScriptPhD.com for an exclusive interview, where he discussed how his medical education and the wide-reaching impact of ER influenced his social activism, why he feels multi-media and cross-platform storytelling are critical for the future of entertainment, and his future endeavors in bridging creative platforms and social engagement.

ScriptPhD: Your path to entertainment is an unusual one – not too many Harvard Medical School graduates go on to produce and write for some of the most impactful television shows in entertainment history. Did you always have this dual interest in medicine and creative pursuits?

Neal Baer: I started out as a writer, and went to Harvard as a graduate student in sociology, [where] I started making documentary films because I wanted to make culture instead of studying it from the ivory tower. So, I got to take a documentary course, and it’s come full circle because my mentor Ed Pinchas made his last film called “One Cut, One Life” recently and I was a producer, before his demise from leukemia. That sent me to film school at the American Film Institute in Los Angeles as a directing fellow, which then sent me to write and direct an ABC after-school special called “Private Affairs” and to work on the show China Beach. I got cold feet [about the entertainment industry] and applied to medical school. I was always interested in medicine. My father was a surgeon, and I realized that a lot of the [television] work I was doing was medically oriented. So I went to Harvard Medical School thinking that I was going to become a pediatrician. Little did I know that my childhood friend John Wells, who had hired me on China Beach, would [also] hire me on “ER” by sending me the script, originally written by Michael Crichton in 1969, and dormant for 25 years until it was discovered in a trunk owned by Steven Spielberg. [Wells] asked me what I thought of the script and I said “It’s like my life only it’s outdated.” I gave him notes on how to update it, and I ultimately became one of the first doctor-writers on television with ER, which set that trend of having doctors on the show to bring verisimilitude.

SPhD: From the series launch in 1994 through 2000, you wrote 19 episodes and created the medical storylines for 150 episodes. This work ran parallel to your own medical education as a medical student, and subsequently an intern and resident. How did the two go hand in hand?

NB: I started writing on ER when I was still a fourth year medical student going back and forth to finish up at Harvard Medical School, and my internship at Children’s Hospital of Los Angeles over six years. And I was very passionate about bringing public health messages to the work that I was doing because I saw the impact that television can have on the audience, particularly the large numbers of people that were watching ER back then.

I was Noah Wylie’s character Dr. Carter. He was a third year [medical student], I was a fourth year. So I was a little bit ahead of him, and I was able to capture what it was like to be the low person on the totem pole and to reflect his struggles through many of the things my friends and I had gone through or were going through. Some of my favorite episodes we did were really drawn on things that actually happened. A friend of mine was sequestered away from the operating table but the surgeons were playing a game of world capitals. And she knew the capital of Zaire, when no one else did, so she was allowed to join the operating table [because of that]. So [we used] that same circumstance for Dr. Carter in an episode. Like you wouldn’t know those things, you had to live through them, and that was the freshness that ER brought. It wasn’t what you think doctors do or how they act but truly what goes on in real life, and a lot of that came from my experience.

SPhD: Do you feel like the storylines that you were creating for the show were germane both to things happening socially as well as reflective of the experience of a young doctor in that time period?

NB: Absolutely. We talked to opinion leaders, we had story nights with doctors, residents and nurses. I would talk to people like Donna Shalala, who was the head of the Department of Health and Human Safetey. I asked the then-head of the National Institutes of Health Harold Varmus, a Nobel Prize winner, “What topics should we do?” And he said “Teen alcohol abuse.” So that is when we had Emile Hirsch do a two-episode arc directly because of that advice. Donna Shalala suggested doing a story about elderly patients sharing perscriptions because they couldn’t afford them and the terrible outcomes that were happening. So we were really able to draw on opinion leaders and also what people were dealing with [at that time] in society: PPOs, all the new things that were happening with medical care in the country, and then on an individual basis, we were struggling with new genetics, new tests, we were the first show to have a lead character who was HIV-positive, and the triple cocktail therapy didn’t even come out until 1996. So we were able to be path-breaking in terms of digging into social issues that had medical relevance. We had seen that on other shows, but not to the extent that ER delved in.

SPhD: One of the legacies of a show like ER is how ahead of its time it was with many prescient storylines and issues that it tackled that are relevant to this very day. Are there any that you look back on that stand out to you in that regard as favorites?

NB: I really like the storyline we did with Julianna Margulies’s character [Nurse Carole Hathaway] when she opened up a clinic in the ER to deal with health issues that just weren’t being addressed, like cervical cancer in Southeast Asian populations and dealing with gaps in care that existed, particularly for poor people in this country, and they still do. Emergency rooms [treating people] is not the best way efficiently, economically or really humanely. It’s great to have good ERs, but that’s not where good preventative health care starts. The ethical dilemmas that we raised in an episode I wrote called “Who’s Happy Now?” involving George Clooney’s character [Dr. Doug Ross] treating a minor child who had advanced cystic fibrosis and wanted to be allowed to die. That issue has come up over and over again and there’s a very different view now about letting young people decide their own fate if they have the cognitive ability, as opposed to doing whatever their parents want done.

SPhD: You’ve had an Appointment at UCLA since 2013 at the Fielding School of Global Health as one of the co-founders of the Global Media Center for Social Impact, with extremely lofty ambitions at the intersection of entertainment, social outreach, technology and public health. Tell me a bit about that partnership and how you decided on an academic appointment at this time in your life.

NB: Well, I’m still doing TV. I just finished a three-year stint running the CBS series Under the Dome, which was a small-scale parable about global climate change. While I was doing that, I took this adjunct professorship at UCLA because I felt that there’s a lot we don’t know about how people take stories, learn them, use them, and I wanted to understand that more. I wanted to have a base to do more work in this area of understanding how storytelling can promote public health, both domestically and globally. Our mission at the Global Media Center for Social Impact (GMI) is to draw on both traditional storytelling modes like film, documentaries, music, and innovative or ‘new’ or transmedia approaches like Facebook, Twitter, Instagram, graphic novels and even cell phones to promote and tell stories that engage and inspire people and that can make a difference in their lives.

SPhD: One of the first major initiatives is a very important book “Soda Politics” by the public health food expert Dr. Marion Nestle. You were actually partially responsible for convincing her to write this book. Why this topic and why is it so critical right now?

NB: I went to Marion Nestle because I was convinced after having read a number of studies, particularly those by Kelly Brownell (who is now Dean of Public Policy at Duke University), that sugar-sweetened sodas are the number one contributor to childhood obesity. Just [recently], in the New York Times, they chronicled a new study that showed that obesity is still on the rise. That entails horrible costs, both emotionally and physically for individuals across this country. It’s very expensive to treat Type II diabetes, and it has terrible consequences – retinal blindness, kidney failure and heart disease. So, I was very concerned about this issue, seeing large numbers of kids coming into Children’s Hospital with Type II diabetes when I was a resident, which we had never seen before. I told Marion Nestle about my concerns because I know she’s always been an advocate for reducing the intake and consumption of soda, so I got [her] research funds from the Robert Wood Johnson Foundation. What’s really interesting is the data on soda consumption really aren’t readily available and you have to pay for it. The USDA used to provide it, but advocates for the soda industry pushed to not make that data easily available. I [also] helped design an e-book, with over 50 actionable steps that you can take to take soda out of the home, schools and community.

SPhD: How has social media engagement via our phones and computers, directly alongside television watching, changed the metric for viewing popularity, content development and key outreach issues that you’re tackling with your ActionLab initiative?

NB: ActionLab does what we weren’t [previously] able to do, because we have the web platforms now to direct people in multi-directional ways. When I first started on ER in 1994, television and media were uni-directional endeavors. We provided the content, and the viewer consumed it. Now, with Twitter [as an example], we’ve moved from a uni-directional to a multi-directional approach. People are responding, we are responding back to them as the content creators, they’re giving us ideas, they’re telling us what they like, what they don’t like, what works, what doesn’t. And it’s reshaping the content, so it’s this very dynamic process now that didn’t exist in the past. We were really sheltered from public opinion. Now, public opinion can drive what we do and we have to be very careful to put on some filters, because we can’t listen to every single thing that is said, of course. But we do look at what people are saying and we do connect with them in ways they never had access to us before.

This multi-directional approach is not just actors and writers and directors discussing their work on social media, but it’s using all of the tools of the internet to build a new way of storytelling. Now, anyone can do their own shows and it’s very inexpensive. There are all kinds of YouTube shows on now that are watched by many people. It’s a kind of Wild West, where anything goes and I think that’s very exciting. It’s changed the whole world of how we consume media. I [wrote] an episode of ER 20 years ago with George Clooney, where he saved a young boy in a water tunnel, that was watched by 48 million people at once. One out of six Americans. That will never happen again. So, we had a different kind of impact. But now, the media landscape is fractured, and we don’t have anywhere near that kind of audience, and we never will again. It’s a much more democratic and open world than it used to be, and I don’t even think we know what the repercussions of that will be.

SPhD: If you had a wish list, what are some other issues or global obstacles that you’d love to see the entertainment world (and media) tackle more than they do?

NB: In terms of specifics, we really need to talk about civic engagement, and we need to tell stories about how [it] can change the world, not only in micro-ways, say like Habitat For Humanity or programs that make us feel better when we do something to help others, but in a macro policy-driven way, like asking how we are going to provide compulsory secondary education around the world, particularly for girls. How do we instate that? How do we take on child marriage and encourage countries, maybe even through economic boycotts, to raise the age of child marriage, a problem that we know places girls in terrible situations, often with no chance of ever making a good living, much less getting out of poverty. So, we need to think both macroly and microly in terms of our storytelling. We need to think about how to use the internet and crowdsourcing for public policy and social change. How can we amalgamate individuals to support [these issues]? We certainly have the tools now, with Facebook, Twitter and Instagram, and our friends and social networks, to spread the word – and a very good way to spread the word is through short stories.

SPhD: You’ve enjoyed a storied career, and achieved the pinnacle of success in two very competitive and difficult industries. What drives Dr. Neal Baer now, at this stage of your life?

NB: Well, I keep thinking about new and innovative ways to use trans media. How do I use graphic novels in Africa to tell the story of HIV and prevention? How do we use cell phones to tell very short stories that can motivate people to go and get tested? Innovative financing to pay for very expensive drugs around the world? So, I’m very much interested in how storytelling gets the word out, because stories don’t just change minds, they change hearts. Stories tickle our emotions in ways that I think we don’t fully understand yet. And I really want to learn more about that. I want to know about what I call the “epigenetics of storytelling.” I’m writing a book about that, looking into research that [uncovers] how stories actually change our brain and how do we use that knowledge to tell better stories.

Neal Baer, MD is a television writer and producer behind hit shows China Beach, ER, Law & Order SVU, Under The Dome, and others. He is a graduate of Harvard University Medical School and completed a pediatrics internship at Children’s Hospital Los Angeles. A former co-chair of USC’s Norman Lear Center Hollywood, Health and Society, Dr. Baer is the founder of the Global Media Center for Social Impact at the Fielding School of Global Health at UCLA.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

Space exploration is enjoying its greatest popularity revival since the Cold War, both in entertainment and the realm of human imagination. Thanks in large part to blockbusters like Gravity, The Martian and Interstellar, not to mention privatized innovation from companies like SpaceX, and fascination with inter-galactic colonization has never been more trenchant. Despite the brimming enthusiasm, there hasn’t been a film or TV series that has tackled the subject matter in a nuanced way. Until now. The Expanse, ambitiously and faithfully adapted by SyFy Channel from the best-selling sci-fi book series, is the best space epic series since Battlestar Galactica. It embraces similar complex, grandiose and ethically woven storylines of human survival and morality amidst inevitable technological advancement. Below, a full ScriptPhD review and in-depth podcast with The Expanse showrunner Naren Shankar.

200 years in future, humans have successfully colonized space, but not without discord. The Earth, overpopulated and severely crunched for resources, has expanded to the asteroid belt and a powerful, wealthy and now-autonomous Mars. Though the colonies of the asteroid belt are controlled by Earth (largely to pillage materials and water), its denizens are second-class citizens, exploited by wealthy corporations for deadly labor. Inter-colony friction, class warfare, resource allocation and uprising frame the backdrop for a political standoff between Mars and Earth that could destroy humanity.

Deeper questions of righteous terrorism, political conspiracy and human rights are embodied in a triumvirate of smart, interweaving plots that will eventually coalesce to unravel the fundamental mystery. Josephus Miller (Thomas Jane) is a great detective, but a lowly belter and miserable alcoholic, mostly paid to settle minor Belt security and corporate matters. But when he’s hired to botch an investigation into the disappearance of a wealthy Earth magnate’s family, Miller starts to uncover dangerous connections between political unrest and the missing heiress. Jim Holden (Steven Strait) is a reluctant hero – a “Belter” ship captain thrown into a tragic quest for justice – who unwittingly leads his mates directly into the conflict between Mars and Earth and, as he delves deeper, unravels a potentially calamitous galactic threat. Finely balancing this tightwire is Chrisjen Avasarala (Shohreh Aghdashloo), the Deputy Undersecretary of the United Nations, who must balance the moral quandaries of peacekeeping with a steely determination to avoid war at all costs.

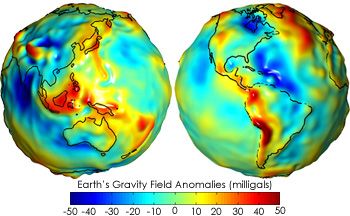

Colonization is a very trendy topic right now in space and astrophysics circles, particularly on Mars, having discovered liquid water, which fosters favorable conditions for the evolution and sustainability of life. Could it ever actually happen? There would certainly be considerable engineering and habitability obstacles.

For now, modest manned exploration of Mars and Europa by human astronauts is a tentative first step for NASA.

The Expanse assumes all these challenges and explorations have ben overcome, and picks up at a time when humans biggest problem isn’t conquering space – it’s conquering each other. The show is sleek and very technologically adept, in direct visual contrast to the more dilapidated environment of Battlestar Galactica. Fans of geek chic technology can ogle at complex docking stations as ships move around the belt to and from Earth and Mars, see through tablets, pills that induce omniscience during interrogations and ubiquitous voice-controlled artificial intelligence. However, though a new way of life has been established, remnants of our current quotidian existence and human essence are still instantly recognizable. This isn’t the techno-invasive dystopia of Blade Runner or Minority Report.

Like, Battlestar Galactica, (a show The Expanse will invariably be compared to) there is a crisp, smart overarching commentary on human existentialism under tense circumstances. Survival and life in space. Adapting to the changing gravitational forces and physical conditions of travel between planets and the asteroid belt colonies. Most importantly, navigating the incendiary dynamics of a species on the brink of all-out galactic warfare. As show runner Naren Shankar mentions in our podcast below, all great sci-fi is historically rooted in allegory – the exploration of disruptive technological innovation (and the fear thereof) as a symbol of combating inequality and/or political injustice. At a time of great social upheaval in our world, a fight for dwindling global resources and against proliferating environmental devastation, many of the themes explored in The Expanse books and series are eerily salient. Perhaps they also act as a reminder that even if a technological revolution facilitates an eventual expansion into outer space, our tapestry of inclinations (good and bad) is sure to follow.

Naren Shankar, the executive producer and show runner of The Expanse, helped develop the adaptation of the sci-fi series buoyed by decades of merging the creative compasses of science and entertainment. A PhD-educated physicist and engineer, Shankar was a writer/producer on Star Trek: The Next Generation, Almost Human and Grimm, as well as a co-showrunner of the groundbreaking forensics procedural CSI. Dr. Shankar exclusively joined the ScriptPhD.com podcast to discuss his transition from PhD scientist to working Hollywood writer, the lasting iconic impact of Star Trek and CSI and how The Expanse evokes the best allegory and elements of the sci-fi genre to tell an existential narrative. Listen below:

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

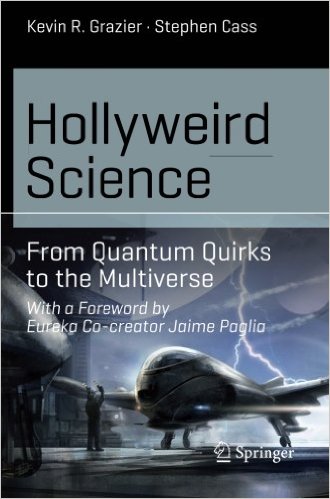

Dr. Kevin Grazier has made a career of studying intergalactic planetary formation, and, over the last few years, helping Hollywood writers integrate physics smartly into storylines for popular TV shows like Battlestar Galactica, Eureka, Defiance and the blockbuster film Gravity. His latest book, Hollyweird Science: From Quantum Quirks to the Multiverse traverses delightfully through the science-entertainment duality as it first breaks down the portrayal of science in movies and television, grounding the audience in screenplay lexicon, then elucidates a panoply of physics and astronomy principles through the lens of storylines, superpowers and sci-fi magic. With the help of notable science journalist Stephen Cass, Hollyweird Science is accessible to the layperson sci-fi fan wishing to learn more about science, a professional scientist wanting to apply their knowledge to higher-order examples from TV and film or Hollywood writers and producers of future science-based materials. From case studies, to in-depth interviews to breaking down the Universe and its phenomena one superhero and far-away galaxy at a time, this first volume of an eventual trilogy is the essential foundation towards understanding how science is integrated into a story and ensuring that future TV shows and movies do so more accurately than ever before. Full ScriptPhD review and podcast with author and science advisor Dr. Grazier below.

Most people who watch movies and TV shows never went to film school. They are not familiar with the intricacies of three-act structure, tropes, conceits and MacGuffins that are the skeletal framework of a standard storytelling toolkit. Yet no genre is more rooted in and dependent on setup and buying into a payoff than sci-fi and films conceptualized in scientific logic. Many, if not most, critiques of science in entertainment don’t fully acknowledge that integrating abstruse science/technology with the complex constraints of time, length, character development and screenplay format is incredibly demanding. Hollyweird Science does point out some egregious examples of “information pollution” and the “Hollywood Curriculum Cycle” – the perpetuation bad, if not fictitious, science. But after grounding the reader in a primer of the fundamental building blocks of movie-making and TV structure, not only is there a more positive, forgiving tone in breaking down the history of the sci-fi canon (some of which predicted many of the technological gadgets we enjoy today), but even a celebration of just how much and how often Hollywood gets the science right.

Conversely, the vast majority of Hollywood writers, producers and directors don’t regularly come across PhD scientists in real life, and have to form impressions of doctors, scientists and engineers based on… other portrayals in entertainment. Scientists, after all, represent only 0.2 percent of the U.S. population as a whole, and less than 700,000 of all jobs belong to doctors and surgeons. And while these professions are amply represented on screen in number, that’s not necessarily been the case in accuracy. The insular self-reliance of screenwriters on their own biases has led to stereotyping and pigeonholing of scientists into a series of familiar archetypes (nerds, aloof omniscient sidekicks), as Grazier and Cass take us through a thorough, labyrinthine archive of TV and movie scientists. But as scientists have become more involved in advising productions, and have become more prominent and visible in today’s innovation-driven society, their on screen counterparts have likewise become a more accurate reflection of these demographics – mainstream hits like The Big Bang Theory, CSI (and its many procedural spinoffs), Breaking Bad and films like Gravity, The Martian, Interstellar and The Imitation Game are just a recent sampling.

If you’re going to teach a diverse group of readers about the principles of physics, astronomy, quantum mechanics and energy forms, it’s best to start with the basics. Even if you’ve never picked up a physics textbook, Hollyweird Science provides a fundamental overview of matter, mass, elements, energy, planet and star formation, time, radiation and the quantum mechanics of universe behavior. More important than what these principles are, Grazier discerns what they are not, with running examples from iconic television series, movies and sci-fi characters. What exactly is the difference between weight and mass and force, per the opening scene of the film Gravity? How are different forms of energy classified? Are the radioactive giants of Godzilla and King Kong realistic? What exactly happens when Scotty is beamed up? Buoying the analytical content are a myriad of interviews with writers and producers, expounding honestly about working with scientists, incorporating science into storytelling and where conflicts arose in the creative process.

People who want to delve into more complex science can do so through “science boxes” embedded throughout the book – sophisticated mathematical and physics analyses of entertainment staples, trivial and significant. Among my favorites: why Alice in Wonderland is a great example of allometric scaling, the thermal radiation of cinematography lighting, hypothesizing Einsteinian relativity for the Back To The Future DeLorean, and just how hot is The Human Torch in the Fantastic Four? (Pretty dang hot.)

The next time readers see an asteroid making a deep impact, characters zipping through interplanetary travel, or an evil plot to harbor a new form of destructive energy, they’ll have a scientific foundation to ask simple, but important, questions. Is this reasonable science, rooted in the principles of physics? Even if embellished for the sake of advancing a story, could it theoretically happen? And for Hollywood writers, how can science advance a plot or help a character solve their connundrum? In our podcast below, Dr. Grazier explains why physics and astronomy were such an important bedrock of the first book – and of science-based entertainment – and previews what other areas of science, technology and medicine future sequels will analyze.

In the long run, Hollyweird Science will serve as far more than just a groundbreaking book, regardless of its rather seamless nexus between fun pop culture break-down and serious scientific didactic tool. It’s a part of a conceptual bridge towards an inevitable intellecutal alignment between Hollywood, science and technology. Over the last 10-15 years, portayal of scientists and ubiquity of science content has increased exponentially on screen – so much so, that what was a fringe niche even 20 years ago is now mainstream and has powerful influence in public perception and support for science. Science and technology will proliferate in importance to society, not just in the form of personal gadgets, but as problem-solving tools for global issues like climate change, water access and advancing health quality. Moreover, at a time when Americans’ grasp of basic science is flimsy, at best, any material that can repurpose the universal love of movies and television to impart knowledge and generate excitement is significant. We are at the precipice of forging a permanent link between Hollywood, science and pop culture. The Hollyweird series is the perfect start.

In an exclusive podcast conversation with ScriptPhD.com, Dr. Grazier discussed the overarching themes and concepts that influenced both “Hollyweird Science” and his ongoing consulting in the entertainment industry. These include:

•How the current Golden Age of sci-fi arose and why there’s more science and technology content in entertainment than ever

•Why scientists and screenwriters are remarkably similar

•Why physics and astronomy are the building blocks of the majority of science fiction

•How the “Hollyweird Science” trilogy can be used as a didactic tool for scientists and entertainment figures

•His favorite moments working both in science and entertainment

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

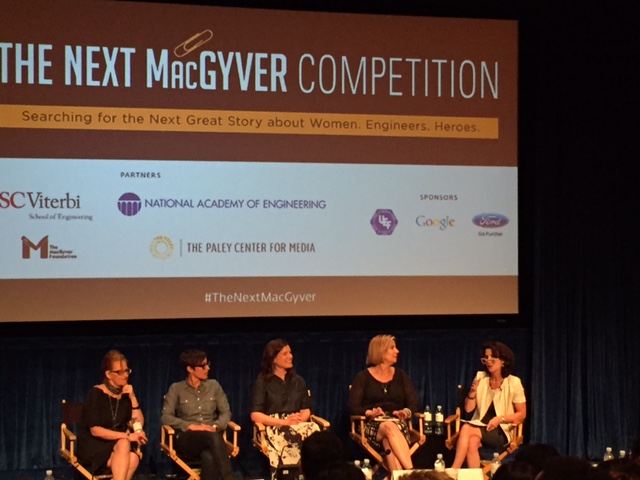

Engineering has an unfortunate image problem. With a seemingly endless array of socioeconomic, technological and large-scale problems to address, and with STEM fields set to comprise the most lucrative 21st Century careers, studying engineering should be a no-brainer. Unfortunately, attracting a wide array of students — or even appreciating engineers as cool — remains difficult, most noticeably among women. When Google Research found out that the #2 reason girls avoid studying STEM fields is perception and stereotypes on screen, they decided to work with Hollywood to change that. Recently, they partnered with the National Academy of Sciences and USC’s prestigious Viterbi School of Engineering to proactively seek out ideas for creating a television program that would showcase a female engineering hero to inspire a new generation of female engineers. The project, entitled “The Next MacGyver,” came to fruition last week in Los Angeles at a star-studded event. ScriptPhD.com was extremely fortunate to receive an invite and have the opportunity to interact with the leaders, scientists and Hollywood representatives that collaborated to make it all possible. Read our full comprehensive coverage below.

“We are in the most exciting era of engineering,” proclaims Yannis C. Yortsos, the Dean of USC’s Engineering School. “I look at engineering technology as leveraging phenomena for useful purposes.” These purposes have been recently unified as the 14 Grand Challenges of Engineering — everything from securing cyberspace to reverse engineering the brain to solving our environmental catastrophes to ensuring global access to food and water. These are monumental problems and they will require a full scale work force to fully realize. It’s no coincidence that STEM jobs are set to grow by 17% by 2024, more than any other sector. Recognizing this opportunity, the US Department of Education (in conjunction with the Science and Technology Council) launched a five-year strategic plan to prioritize STEM education and outreach in all communities.

Despite this golden age, where the possibilities for STEM innovation seem as vast as the challenges facing our world, there is a disconnect in maximizing a full array of talent for the next generation of engineers. There is a noticeable paucity of women and minority students studying STEM fields, with women comprising just 18-20% of all STEM bachelor’s degrees, regardless of the fact that more students are STEM degrees than ever before. Madeline Di Nono, CEO of the Geena Davis Institute on Gender in Media and a judge at the Next MacGyver competition, boils a lot of the disinterest down to a consistent lack of female STEM portrayal in television and film. “It’s a 15:1 ratio of male to female characters for just STEM alone. And most of the science related positions are in life sciences. So we’re not showing females in computer science or mathematics, which is where all the jobs are going to be.” Media portrayals of women (and by proxy minorities) in science remains shallow, biased and appearance-focused (as profiled in-depth by Scientific American). Why does this matter? There is a direct correlation between positive media portrayal and STEM career participation.

It has been 30 years since the debut of television’s MacGyver, an action adventure series about clever agent Angus MacGyver, working to right the wrongs of the world through innovation. Rather than using a conventional weapon, MacGyver thwarts enemies with his vast array of scientific knowledge — sometimes possessing no more than a paper clip, a box of matches and a roll of duct tape. Creator Lee Zlotoff notes that in those 30 years, the show has run continuously around the world, perhaps fueled in part by a love of MacGyver’s endless ingenuity. Zlotoff noted the uncanny parallels between MacGyver’s thought process and the scientific method: “You look at what you have and you figure out, how do I turn what I have into what I need?” Three decades later, in the spirit of the show, the USC Viterbi School of Engineering partnered with the National Academy of Sciences and the MacGyver Foundation to search for a new MacGyver, a television show centered around a female protagonist engineer who must solve problems, create new opportunities and most importantly, save the day. It was an initiative that started back in 2008 at the National Academy of Sciences, aiming to rebrand engineering entirely, away from geeks and techno-gadget builders towards an emphasis on the much bigger impact that engineering technology has on the world – solving big, global societal problems. USC’s Yortsos says that this big picture resonates distinctly with female students who would otherwise be reluctant to choose engineering as a career. Out of thousands of submitted TV show ideas, twelve were chosen as finalists, each of whom was given five minutes to pitch to a distinguished panel of judges comprising of writers, producers, CEOs and successful show runners. Five winners will have an opportunity to pair with an established Hollywood mentor in writing a pilot and showcasing it for potential production for television.

If The Next MacGyver feels far-reaching in scope, it’s because it has aspirations that stretch beyond simply getting a clever TV show on air. No less than the White House lent its support to the initiative, with an encouraging video from Chief Technology Officer Megan Smith, reiterating the importance of STEM to the future of the 21st Century workforce. As Al Roming, the President of the National Academy of Engineering noted, the great 1950s and 1960s era of engineering growth was fueled by intense competition with the USSR. But we now need to be unified and driven by the 14 grand challenges of engineering and their offshoots. And part of that will include diversifying the engineering workforce and attracting new talent with fresh ideas. As I noted in a 2013 TEDx talk, television and film curry tremendous power and influence to fuel science passion. And the desire to marry engineering and television extends as far back as 1992, when Lockheed and Martin’s Norm Augustine proposed a high-profile show named LA Engineer. Since then, he has remained a passionate advocate for elevating engineers to the highest ranks of decision-making, governance and celebrity status. Andrew Viterbi, namesake of USC’s engineering school, echoed this imperative to elevate engineering to “celebrity status” in a 2012 Forbes editorial. “To me, the stakes seem sufficiently high,” said Adam Smith, Senior Manager of Communications and Marketing at USC’s Viterbi School of Engineering. “If you believe that we have real challenges in this country, whether it is cybersecurity, the drought here in California, making cheaper, more efficient solar energy, whatever it may be, if you believe that we can get by with half the talent in this country, that is great. But I believe, and the School believes, that we need a full creative potential to be tackling these big problems.”

So how does Hollywood feel about this movement and the realistic goal of increasing its array of STEM content? “From Script To Screen,” a panel discussion featuring leaders in the entertainment industry, gave equal parts cautionary advice and hopeful encouragement for aspiring writers and producers. Ann Merchant, the director of the Los Angeles-based Science And Entertainment Exchange, an offshoot of the National Academy of Sciences that connects filmmakers and writers with scientific expertise for accuracy, sees the biggest obstacle facing television depiction of scientists and engineers as a connectivity problem. Writers know so few scientists and engineers that they incorporate stereotypes in their writing or eschew the content altogether. Ann Blanchard, of the Creative Artists Agency, somewhat concurred, noting that writers are often so right-brain focused, that they naturally gravitate towards telling creative stories about creative people. But Danielle Feinberg, a computer engineer and lighting director for Oscar-winning Pixar animated films, sees these misconceptions about scientists and what they do as an illusion. When people find out that you can combine these careers with what you are naturally passionate about to solve real problems, it’s actually possible and exciting. Nevertheless, ABC Fmaily’s Marci Cooperstein, who oversaw and developed the crime drama Stitchers, centered around engineers and neuroscientists, remains optimistic and encouraged about keeping the doors open and encouraging these types of stories, because the demand for new and exciting content is very real. Among 42 scripted networks alone, with many more independent channels, she feels we should celebrate the diversity of science and medical programming that already exists, and build from it. Put together a room of writers and engineers, and they will find a way to tell cool stories.

At the end of the day, Hollywood is in the business of entertaining, telling stories that reflect the contemporary zeitgeist and filling a demand for the subjects that people are most passionate about. The challenge isn’t wanting it, but finding and showcasing it. The panel’s universal advice was to ultimately tell exciting new stories centered around science characters that feel new, flawed and interesting. Be innovative and think about why people are going to care about this character and storyline enough to come back each week for more and incorporate a central engine that will drive the show over several seasons. “Story does trump science,” Merchant pointed out. “But science does drive story.”

The twelve pitches represented a diverse array of procedural, adventure and sci-fi plots, with writers representing an array of traditional screenwriting and scientific training. The five winners, as chosen by the judges and mentors, were as follows:

Miranda Sajdak — Riveting

Sajdak is an accomplished film and TV writer/producer and founder of screenwriting service company ScriptChix. She proposed a World War II-era adventure drama centered around engineer Junie Duncan, who joins the military engineer corps after her fiancee is tragically killed on the front line. Her ingenuity and help in tackling engineering and technology development helps ultimately win the war.

Beth Keser, PhD — Rule 702

Dr. Keser, unique among the winners for being the only pure scientist, is a global leader in the semiconductor industry and leads a technology initiative at San Diego’s Qualcomm. She proposed a crime procedural centered around Mimi, a brilliant scientist with dual PhDs, who forgoes corporate life to be a traveling expert witness for the most complex criminal cases in the world, each of which needs to be investigated and uncovered.

Jayde Lovell — SECs (Science And Engineering Clubs)

Jayde, a rising STEM communication star, launched the YouTube pop science network “Did Someone Say Science?.” Her show proposal is a fun fish-out-of-water drama about 15-year-old Emily, a pretty, popular and privileged high school student. After accidentally burning down her high school gym, she forgoes expulsion only by joining the dreaded, geeky SECs club, and in turn, helping them to win an engineering competition while learning to be cool.

Craig Motlong — Q Branch

Craig is a USC-trained MFA screenwriter and now a creative director at a Seattle advertising agency. His spy action thriller centered around mad scientist Skyler Towne, an engineer leading a corps of researchers at the fringes of the CIA’s “Q Branch,” where they develop and test the gadgets that will help agents stay three steps ahead of the biggest criminals in the world.

Shanee Edwards — Ada and the Machine

Shanee, an award-winning screenwriter, is the film reviewer at SheKnows.com and the host/producer of the web series She Blinded Me With Science. As a fan of traditional scientific figures, Shanee proposed a fictionalized series around real-life 1800s mathematician Ada Lovelace, famous for her work on Charles Babbage’s early mechanical general-purpose computer, the Analytical Engine. In this drama, Ada works with Babbinge to help Scotland Yard fight opponents of the industrial revolution, exploring familiar themes of technology ethics relevant to our lives today.

Craig Motlong, one of five ultimate winners, and one of the few finalists with absolutely no science background, spent several months researching his concept with engineers and CIA experts to see how theoretical technology might be incorporated and utilized in a modern criminal lab. He told me he was equal parts grateful and overwhelmed. “It’s an amazing group of pitches, and seeing everyone pitch their ideas today made me fall in love with each one of them a little bit, so I know it’s gotta be hard to choose from them.”

Whether inspired by social change, pragmatic inquisitiveness or pure scientific ambition, this seminal event was ultimately both a cornerstone for strengthening a growing science/entertainment alliance and a deeply personal quest for all involved. “I don’t know if I was as wrapped up in these issues until I had kids,” said USC’s Smith. “I’ve got two little girls, and I tried thinking about what kind of shows [depicting female science protagonists] I should have them watch. There’s not a lot that I feel really good sharing with them, once you start scanning through the channels.” Motlong, the only male winner, is profoundly influenced by his experience of being surrounded by strong women, including a beloved USC screenwriting instructor. “My grandmother worked during the Depression and had to quit because her husband got a job. My mom had like a couple of options available to her in terms of career, my wife wanted to be a genetic engineer when she was little and can’t remember why she stopped,” he reflected. “So I feel like we are losing generations of talent here, and I’m on the side of the angels, I hope.” NAS’s Ann Merchant sees an overarching vision on an institutional level to help achieve the STEM goals set forth by this competition in influencing the next generation of scientist. “it’s why the National Academy of Sciences and Engineering as an institution has a program [The Science and Entertainment Exchange] based out of Los Angeles, because it is much more than this [single competition].”

Indeed, The Next MacGyver event, while glitzy and glamorous in a way befitting the entertainment industry, still seemed to have succeeded wildly beyond its sponsors’ collective expectations. It was ambitious, sweeping, the first of its kind and required the collaboration of many academic, industry and entertainment alliances. But it might have the power to influence and transform an entire pool of STEM participants, the way ER and CSI transformed renewed interest in emergency medicine and forensic science and justice, respectively. If not this group of pitched shows, then the next. If not this group of writers, then the ones who come after them. Searching for a new MacGyver transcends finding an engineering hero for a new age with new, complex problems. It’s about being a catalyst for meaningful academic change and creative inspiration. Or at the very least opening up Hollywood’s eyes and time slots. Zlotoff, whose MacGyver Foundation supported the event and continually seeks to promote innovation and peaceful change through education opportunities, recognized this in his powerful closing remarks. “The important thing about this competition is that we had this competition. The bell got rung. Women need to be a part of the solution to fixing the problems on this planet. [By recognizing that], we’ve succeeded. We’ve already won.”

The Next MacGyver event was held at the Paley Center For Media in Beverly Hills, CA on July 28, 2015. Follow all of the competition information on their site. Watch a full recap of the event on the Paley Center YouTube Channel.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

From a sci-fi and entertainment perspective, 2015 may undoubtedly be nicknamed “The Year of The Robot.” Several cinematic releases have already explored various angles of futuristic artificial intelligence (from the forgettable Chappie to the mainstream blockbuster Marvel’s Avengers: Age of Ultron to the intelligent sleeper indie hit Ex Machina), with several more on the way later this year. Two television series premiering this summer, limited series Humans on AMC and Mr. Robot on USA add thoughtful, layered (and very entertaining) discussions on the ethics and socio-economic impact of the technology affecting the age we live in. While Humans revolves around hyper-evolved robot companions, and Mr. Robot a singular shadowy eponymous cyberhacking organization, both represent enthusiastic Editor’s Selection recommendations from ScriptPhD. Reviews and an exclusive interview with Humans creators/writers Jonathan Brackley and Sam Vincent below.

Never in human history has technology and its potential reached a greater permeation of and importance in our daily lives than at the current moment. Indeed, it might even be changing the way our brains function! With entertainment often acting as a reflection of socially pertinent issues and zeitgeist motifs, it’s only natural to examine the depths to which robots (or any artificial technology) might subsume human life. Will they take over our jobs? Become smarter than us? Nefariously affect human society? These fears about the emotional lines between humans and their technology are at the heart of AMC’s new limited series Humans. It is set in the not-too-distant future, where the must have tech accessory is a ‘synth,’ a highly malleable, impeccably programmed robotic servant capable of providing any services – at the individual, family or macro-corporate level. It’s an idyllic ambition, fully realized. Busy, dysfunctional parents Joe and Laura obtain family android Anita to take care of basic housework and child rearing to free up time. Beat cop Pete’s rehabilitation android is indispensable to his paralyzed wife. And even though he doesn’t want a new synth, scientist George Millikan is thrust with a ‘care unit’ Vera by the Health Service to monitor his recovery from a stroke. They can pick fruit, clean up trash, work mindlessly in factories and sweat shops make meals, even provide service in brothels – an endless range of servile labor that we are uncomfortable or unwilling to do ourselves.

Humans brilliantly weaves the problems of this artificial intelligence narrative into multiple interweaving story lines. Anita may be the perfect house servant to Joe, but her omniscience and omnipresence borders on creepiness to wife Laura (and by proxy, the audience). Is Dr. Millikan (who helped craft the original synth technology) right that you can’t recycle them the way you would an old iPhone model? Or is he naive for loving his synth Odi like a son? And even if you create a Special Technologies Task Force to handle synth-related incidents, guaranteeing no harm to humans and minimal, if any, malfunctions, how can there be no nefarious downside to a piece of technology? They could, in theory, be obtained illegally and reprogrammed for subversive activity. If the original creator of the synths wanted to create a semblance of human life – “They were meant to feel,” he maintains – then are we culpable for their enslaved state? Should we feel relieved to see a synth break out of the brothel she’s forced to work in, or another mysterious group of synths that have somehow become sentient unite clandestinely to dream of a dimension where they’re free?

In reality, we already are in the midst of an age of artificial intelligence – computers. Powerful, fast, already capable of taking over our workforce and reshaping our society, they are the amorphous technological preamble to more specifically tailored robots, incurring all of the same trepidation and uncertainty. Mr. Robot, one of the smartest TV pilots in recent memory, is a cautionary tale about cyberhacking, socioeconomic vulnerability and the sheer reliance our society unknowingly places in computers. Its central themes are physically embodied in the central character of Elliot, a brilliant cybersecurity engineer by day/vigilante cyberhacker by night, battling schizophrenia and extreme social anxiety. To Elliott, the ubiquitous nature of computer power is simultaneously appealing and repulsive. Everything is electronic today – money, corporate transactions, even the way we communicate socially. As a hacker, he manipulates these elements with ease to get close to people and to solve injustice (carrying a Dexter-style digital cemetery of his conquests). But as someone who craves human contact he loathes the way technology has deteriorated human interaction and encouraged nameless, faceless corporate greed.

Elliot works for Allstate Security, whose biggest client is an emblem of corporate evil and economic diffidence. When they are hacked, Elliot discovers that it’s a private digital call to arms by a mysterious underground group called Mr. Robot (resembling the cybervigilante group Anonymous). They’ve hatched a plan to to concoct a wide-scale economic cyber attack that will result in the single biggest redistribution of wealth and debt forgiveness in history, and recruited Elliot into their organization. The question, and intriguing premise of the series, is whether Elliot can juggle his clean-cut day job, subversive underground hacking and protecting society one cyberterrorist act at a time, or if they will collapse under the burden of his conscience and mental illness.

Humans is a purview into the inevitable future, albeit one that may be creeping on us faster than we want it to. Even if hyper-advanced artificial intelligence is not an imminent reality and our fears might be overblown, the impact of technology on economics and human evolution is a reality we will have to grapple with eventually. And one that must inform the bioethics of any advanced sentient computing technology we create and release into the world. Mr. Robot is a stark reminder of our current present, that cyberterrorism is the new normal, that its global impact is immense, and (as with the case of artificial robots), our advancement of and reliance on technology is outpacing humans’ ability to control it.

ScriptPhD.com was extremely fortunate to chat directly with Humans writers Jonathan Brackley and Sam Vincent about the premise and thematic implications of their show. Here’s what they had to say:

ScriptPhD.com: Is “Humans” meant to be a cautionary tale about the dangers of complex artificial intelligence run amok or a hypothetical bioethical exploration of how such technology would permeate and affect human society?

Jonathan and Sam: Both! On one level, the synths are a metaphor for our real technology, and what it’s doing to us, as it becomes ever more human-like and user-friendly – but also more powerful and mysterious. It’s not so much hypothesising as it is extrapolating real world trends. But on a deeper story level, we play with the question – could these machines become more, and if so, what would happen? Though “run amok” has negative connotations – we’re trying to be more balanced. Who says a complex AI given free rein wouldn’t make things better?

SPhD: I found it interesting that there’s a tremendous range of emotions in how the humans related to and felt about their “synths.” George has a familial affection for his, Laura is creeped out/jealous of hers while her husband Joe is largely indifferent, policeman Peter grudgingly finds his synth to be a useful rehabilitation tool for his wife after an accident. Isn’t this reflective of the range of emotions in how humans react to the current technology in our lives, and maybe always will?

J&S: There’s always a wide range of attitudes towards any new technology – some adopt enthusiastically, others are suspicious. But maybe it’s become a more emotive question as we increasingly use our technology to conduct every aspect of our existence, including our emotional lives. Our feelings are already tangled up in our tech, and we can’t see that changing any time soon.

SPhD: Like many recent works exploring Artificial Intelligence, at the root of “Humans” is a sense of fear. Which is greater – the fear of losing our flaws and imperfections (the very things that make us human) or the genuine fear that the sentient “synths” have of us and their enslavement?

J&S: Though we show that synths certainly can’t take their continued existence for granted, there’s as much love as fear in the relationships between our characters. For us, the fear of how our technology is changing us is more powerful – purely because it’s really happening, and has been for a long time. But maybe it’s not to be feared – or not all of it at least…

Catch a trailer and closer series look at the making of Humans here:

And catch the FULL first episode of Mr. Robot here:

Mr. Robot airs on USA Network with full episodes available online.

Humans premieres on June 28, 2015 on AMC Television (USA) and airs on Channel 4 (UK).

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.

As part of an ongoing recommitment to its sci-fi genre roots, SyFy Channel is unveiling the original scripted drama Ascension, for now a six hour mini-series, and possible launch for a future series. It follows a crew aboard the starship Ascension, as part of a 1960s mission that sent 600 men, women and children on a 100 year planned voyage to populate a new world. In the midst of political unrest onboard the vessel, the approach of a critical juncture in the mission and the first-ever murder onboard the craft, the audience soon learns, there is more to the mission than meets the eye. Which can also be said of this multi-layered, ambitious, sophisticated mini-series. Full ScriptPhD review below.

In the late 1950s and early 1960s, largely fueled by the heigh of Cold War tensions with the Soviet Union and fears of mutual nuclear destruction, the United States government, in conjunction with NASA, launched a project that would have sent 150 people into various corners of space — from the Moon, to Mars and eventually Saturn. Code-named Orion, the project officially launched in 1958 at General Atomics in San Diego under the leadership of nuclear researcher Frederick deHoffman, Los Alamos weapons specialist Theodore Taylor and theoretical physicist Freeman Dyson. Largely fueled by Dyson, Orion’s aim was to build a spacecraft equipped with atomic bombs, that would propel the rocket further and further into space through a series of well-timed explosions (nuclear propulsion). The partial test ban treaty of 1963 ended the grandiose project, which remains classified to this day.

Ascension is the seamless fictional transition borne of asking “what if” questions about the erstwhile Project Orion. What if it never ended? What if it was still ongoing? What would be the psychological ramifications of entire generations of people born, raised and living on a closed vessel? Is human habitation of other planets an uncertainty or inevitability? And so Project Orion continued on as Project Ascension, under the hands of Abraham Enzmann. A crew of 600 was sent off into space not knowing the fate of humanity, frozen in time, and as far as they know — all that would be left of mankind.

Ascension carries on in the vein of stylish series such as Caprica, Helix and Defiance, with sleek sci-fi gadgetry and a spaceship capable of mimicking an entire world (including a beach!) for 100 years. This is no dilapidated, aging Battlestar Galactica. However, because time is frozen in the 1960s, all technology, clothes and cultural collections reflect that era — think Mad Men in space. Nostalgia reigns with references to the Space Race via speeches from President Kennedy, along with film and television cornerstones of that era.

51 years into the mission, on the evening of the annual launch party celebration, a kind of Ascension independence day, the unthinkable happens: the first ever murder onboard the ship. Captain William Denninger puts first officer Aaron Gault in charge of investigating. Soon, the motives for the murder become convoluted amidst internal politics and the looming “Insurrection,” a point of no return in which communication with Earth is no longer possible.

This year’s space epic Interstellar explored the science of traveling 10 billion light years away from Earth – ambitiously but not without factual fault. And to be sure, Ascension will address the challenges and physics of nuclear propulsion to the far reaches of space, starting with a radiation storm midway through the first episode. But rather than bogging itself down in the astrophysical minutiae of space travel, Ascension smartly focuses on the human drama and existential questions such a voyage would incur, precisely what made Battlestar Galactica such compelling sci-fi television. Would there be internal psychological ramifications to this journey? All residents of the ship seem to go through an adolescent period termed “The Crisis,” where they come to grips with the fact that they have no future, and a pre-determined fate. Furthermore, the murder victim’s young sister appears to be a “seer” with telekinetic insight into the nefarious inner workings of the ship.

Would there be class division and political turmoil aboard such a confined community? There is a decidedly troublesome rift between the ranking officers of the upper quarters and the “Below Deckers”: butchers, steelworkers and other blue-collar craftsmen that appear on the edge of a revolt. Compounding their efforts are the Captain’s wife, Viondra Denninger (whom fans will recognize as Cylon Number Six from BSG), a cunning, manipulative power broker and the man seeking to wrestle control of the ship from her husband. Back on Earth, we meet Harris Enzmann, the son of the dying Project Ascension founder. Seemingly a low level government engineer, nor remotely interested in preserving his father’s legacy, his role in Project Ascension is convoluted yet significant.

Project Ascension is indeed an experiment critical for human survival — just not the one anyone onboard thinks it is. Amidst an awakened collective imagination about space exploration, including 2015’s IMAX Mars mission movie Journey To Space, this is one sci-fi mission worth taking.

View a trailer for Ascension:

Ascension is a three-day mini-series event on SyFy Channel, beginning Monday, December 15.

~*ScriptPhD*~

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire us for creative content development.

Subscribe to free email notifications of new posts on our home page.

]]>

The biggest threat to mankind may not end up being an enormous weapon; in fact, it might be too small to visualize without a microscope. Between global interconnectedness and instant travel, the age of genomic manipulation, and ever-emerging infectious disease possibilities, our biggest fears should be rooted in global health and bioterrorism. We got a recent taste of this with Stephen Soderberg’s academic, sterile 2011 film Contagion. Helix, a brilliant new sci-fi thriller from Battlestar Galactica creator Ronald D. Moore, isn’t overly concerned with whether the audience knows the difference between antivirals and a retrovirus or heavy-handed attempts at replicating laboratory experiments and epidemiology lectures. What it does do is explore infectious disease outbreak and bioterrorism in the greater context of global health and medicine in a visceral, visually chilling way. In the world of Helix, it’s not a matter of if, just when… and what we do about it after the fact. ScriptPhD.com reviews the first three episodes under the “continue reading” cut.

The benign opening scenes of Helix take place virtually every day at the Centers for Disease Control, along with global health centers all over the world. Dr. Alan Farragut, leader of a CDC outbreak team, is assembling and training a group of researchers to investigate a possible viral outbreak at a remote research called Arctic Biosystems. Tucked away in Antarctica under extreme working conditions, and completely removed from international oversight, the self-contained building employs 106 scientists from 35 countries. One of these scientists, Dr. Peter Farragut, is not only Alan’s brother but also appears to be Patient Zero.

It doesn’t take the newly assembled team long to discover that all is not as it seems at Arctic Biosystems. Deceipt and evasiveness from the staff lead to the discovery of a frightening web of animal research, uncovering the tip of an iceberg of ‘pseudoscience’ experimentation that may have led to the viral outbreak, among other dangers. The mysterious Dr. Hiroshi Hatake, the head of Ilaria Corporation, which runs the facility, may have nefarious motivations, yet is desperately reliant on the CDC researchers to contain the situation. The involvement of the US Army engineers and scientists, culminating in a shocking, devastating ending to the third episode, hints that the CDC doesn’t think the outbreak was accidental. Most frightening of all is the discovery of two separate strains of the virus, Narvic A and Narvic B, the former of which turns victims into a bag of Ebola-like hemorrhagic black sludge, while the latter rewires the brain to create superhuman strength – a perfect contagion machine.

With some pretty brilliant sci-fi minds orchestrating the series, including Moore, Lost alum Steven Maeda and Contact producer Lynda Obst, it’s not surprising that Helix extrapolates extremely accurate and salient themes facing today’s scientific environment. Spot on is the friction between communication and collaboration between the agencies depicted on the show – the CDC, bioengineers from the US Army and the fictional Arctic Biosystems research facility. In reality, identifying and curtailing emerging infectious disease outbreaks requires a network of collaboration among, chiefly, the World Health Organization, the CDC, the US Army Medical Research Institute of Infectious Diseases (USAMRIID, famously portrayed in the film Outbreak) and local medical, research and epidemiological outposts at the outbreak site(s). In addition to managing egos, agencies must quickly share proprietary data and balance global oversight (WHO) with local and federal juristictions, which can be a challenge even under ordinary conditions. To that extent, including a revised set of international health regulations in 2005 and the establishment of an official highly transimissible form of the virus created a hailstorm of controversy. In addition to a publishing moratorium of 60 days and censorship of key data, debate raged on the necessity of publishing the findings at all from a national security standpoint and the benefit to risk value of such “dual-use” research. Similar fears of “playing God” were stoked after the creation of a fully synthetic cell by J. Craig Venter and the team behind the Genome Project.

As with Moore’s other SyFy series, Battlestar Galactica, Helix is not perfect, and will need time and patience (from both the network and viewing audience) to strike the right chemistry and develop evenness in its storytelling. The dialogue feels forced at times, particularly among the lead characters and with rapid fire high-level scientific jargon, of which there is a surprising amount. Certain scenes involving the gruesomeness of the viruses feel too long and repetitive in the first episodes, but this will quickly dissipate as the plot develops. But for all of its minor blemishes, Helix is one of the smartest scientific premises to hit television in recent times, and looks to deftly explore familiar sci-fi themes of bioengineering ethics and the risks of ‘playing God’ just because we have the technology to do so.

We’ve become accustomed to sci-fi terrifying us visually, such as the ‘walker’ zombies of The Walking Dead or even psychologically, as in the recent hit movie Gravity. But Helix’s terror is drawn from the utter plausibility of the scenario it presents.

View an extended 15 minute sample of the Helix pilot here:

Helix will air on Friday nights at 10:00 PM ET/PT on SyFy channel.

~*ScriptPhD*~

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire us for creative content development.

Subscribe to free email notifications of new posts on our home page.

]]>

For every friendly robot we see in science fiction such as Star Wars‘s C3PO, there are others with a more sinister reputation that you can find in films such as I, Robot. Indeed, most movie robots can be classified into a range of archetypes and purposes. Science boffins at Cambridge University have taken the unusual step of evaluating the exact risks of humanity suffering from a Terminator-style meltdown at the Cambridge Project for Existential Risk.

“Robots On the Run” is currently an unlikely scenario, so don’t stockpile rations and weapons in panic just yet. But with machine intelligence continually evolving, developing and even crossing thresholds of creativity and and language, what holds now might not in the future. Robotic technology is making huge advances in great part thanks to the efforts of Japanese scientists and Robot Wars. For the time being, the term AI (artificial intelligence) might sound like a Hollywood invention (the term was translated by Steven Spielberg in a landmark film, after all), but the science behind it is real and proliferating in terms of capability and application. Robots can now “learn” things through circuitry similar to the way humans pick up information. Nevertheless, some scientists believe that there are limits to the level of intelligence that robots will be able to achieve in the future. In a special ScriptPhD review, we examine the current state of artificial intelligence, and the possibilities that the future holds for this technology.

Is AI a false dawn?

While artificial intelligence has certainly delivered impressive advances in some respects, it has also not successfully implemented the kind of groundbreaking high-order human activity that some would have envisaged long ago. Replicating technology such as thought, conversation and reasoning in robots is extraordinarily complicated. Take, for example, teaching robots to talk. AI programming has enabled robots to hold rudimentary conversations together, but the conversation observed here is extremely simple and far from matching or surpassing even everyday human chit-chat. There have been other advances in AI, but these tend to be fairly singular in approach. In essence, it is possible to get AI machines to perform some of the tasks we humans can cope with, as witnessed by the robot “Watson” defeating humanity’s best and brightest at the quiz show Jeopardy!, but we are very far away from creating a complete robot that can manage humanity’s complex levels of multi-tasking.

Despite these modest advances to date, technology throughout history has often evolved in a hyperbolic pattern after a long, linear period of discovery and research. For example, as the Cambridge scientists pointed out, many people doubted the possibility of heavier-than-air flight. This has been achieved and improved many times over, even to supersonic speeds, since the Wright Brothers’ unprecedented world’s first successful airplane flight. In last year’s sci-fi epic Prometheus the android David is an engineered human designed to assist an exploratory ship’s crew in every way. David anticipates their desires, needs, yet also exhibits the ability to reason, share emotions and feel complex meta-awareness. Forward-reaching? Not possible now? Perhaps. But by 2050, computers controlling robot “brains” will be able to execute 100 trillion instructions per second, on par with human brain activity. How those robots order and utilize these trillions of thoughts, only time will tell!

If nature can engineer it, why can’t we?

The human brain is a marvelous feat of natural engineering. Making sense of this unique organ singularly differentiates the human species from all others requires a conglomeration of neuroscience, mathematics and physiology. MIT neuroscientist Sebastian Seung is attempting to do precisely that – reverse engineer the human brain in order to map out every neuron and connection therein, creating a road map to how we think and function. The feat, called the connectome, is likely to be accomplished by 2020, and is probably the first tentative step towards creating a machine that is more powerful than human brain. No supercomputer that can simulate the human brain exists yet. But researchers at the IBM cognitive computing project, backed by a $5 million grant from US military research arm DARPA, aim to engineer software simulations that will complement hardware chips modeled after how the human brain works. The research is already being implemented by DARPA into brain implants that have better control of artificial prosthetic limbs.