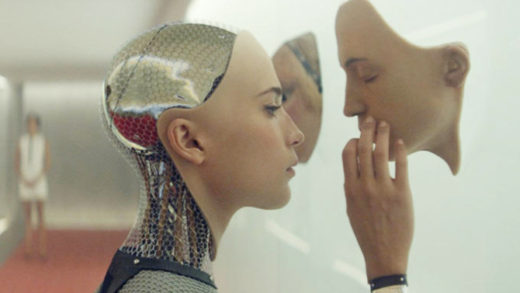

Every so often, a seminal film comes out that ends up being the hallmark of its genre. 2001: A Space Odyssey redefined space and technology in science fiction. Star Wars proved sci-fi could provide blockbuster material, while Blade Runner remains the standard-bearer for post-apocalyptic dystopia. A slate of recent films have broached varying scenarios involving artificial intelligence – from talking robots to sentient computers to re-engineered human capacity. But Ex Machina, the latest film from Alex Garland (writer of the pandemic horror film 28 Days Later and the astro-thriller Sunshine) is the cream of the crop. A stylish, stripped-down, cerebral film, Ex Machina weaves through the psychological implications of an experimental AI robot named Ava possessing preternatural emotional intelligence and free will. It’s a Hitchcockian sci-fi thriller for the geek chic gadget-bearing age, a vulnerable expository inquiry into the isolated meaning of “sentience” (something we will surely contend with in our time) and an honest reproach of technology’s boundless capabilities that somehow manages to celebrate them at the same time.

ScriptPhD.com’s enthusiastic review of this visionary new sci-fi film includes an exclusive Q&A with writer and director Alex Garland from a recent Los Angeles screening.

The preoccupation with superior artificial intelligence as a thematic idea is not a recent phenomenon. After all, Frankenstein is one of the pillars of science fiction – an ode to hypothetical human engineering gone awry. Terminator and its many offshoots gave rise to robotics engineering, whether as saviors or disruptors of humanity. But over the last 16 months, a proliferation of films centered around the incorporation of artificial intelligence in the ongoing microevolution of humanity has signaled a mainstream arrival into the zeitgeist consciousness. 2014 was highlighted with stylish and ambitious but ultimately overmatched digital reincarnation film Transcendence to the understated yet brilliant digital love story Her to the surprisingly smart Disney film Big Hero 6. This year amplifies that trend, with Avengers: Age of Ultron and Chappie marching out robots Elon Musk could only dream about among many other later releases. Wedged in-between is Ex Machina, taking its title from the Latin Deus Ex Machina (God from the machine), a film that prefers to focus on the bioethics and philosophy of scientific limits rather than the razzle-dazzle technology itself. There is still a tendency for sci-fi films concerning AI to engross themselves in presenting over-the-top science, with ensuing wholesale consequences based on theoretical technology, which ultimately hinders storytelling. Ex Machina is a simple story about two male humans, a very advanced-intelligence generated AI robot named Ava, confined in a remote space together, and the life-changing consequences that their interactions engender as a parable for the meaning of humanity. It will be looked back on as one of the hallmark films about AI.

When computer programmer Caleb Smith (Domhall Gleeson) wins an exclusive private week with his search engine company’s CEO Nathan Bateman (Oscar Isaac) it seems like a dream come true. He is helicoptered to the middle of a verdant paradise, where Nathan lives as a recluse in a locked-down, self-sufficient compound. Only Nathan plans to let Caleb be the first to perform a Turing Test on an advanced humanistic robot named Ava (Alicia Vikander). (Incidentally, the technology of creating a completely new robot for cinema was a remarkable process, as the filmmakers discussed in-depth in the New York Times.) The opportunity sounds like a geek’s dream come true, only as the layers slowly peel back, it is apparent that Caleb’s presence is no accident; indeed, the methodology for how Nathan chose him is directly related to the engineering of Ava. Nathan is, depending on your viewpoint, at best a lonely eccentric and at worst an alcoholic lunatic.

For two thirds of the movie, tension is primarily ratcheted through Caleb’s increasingly tense interviews with Ava. She’s smart, witty, curious and clearly has a crush on him. Is something like Ava even possible? Depending on who you ask, maybe not or maybe it already happened. But no matter. The latter third of the movie provides one breathtaking twist after another. Who is testing and manipulating whom? Who is really the “intelligent being” of the three? Are we right to be cautionary and fear AI, even stop it in its tracks before it happens? With seemingly anodyne versions of “helpful robots” already in existence, and social media looking into implementing AI to track our every move, it may be a matter of when, not if.

The brilliance of Alex Garland’s sci-fi writing is his understanding that understated simplicity drives (and even heightens) dramatic tension. Too many AI and techno-futuristic films collapse under the crushing weight of over-imagined technological aspirations, which leave little room for exploring the ramifications thereof. We start Ex Machina with the simple premise that a sentient, advanced, highly programmed robot has been made. She’s here. The rest of the film deftly explores the introspective “what now?” scenarios that will grapple scientists and bio-ethicists should this technology come to pass. What is sentience and can a machine even possess self-awareness? Is their desire to be free of the grasp of their creators wrong and should we allow it? Most importantly, is disruptive AI already here in the amorphous form of social media, search history and private data collected by big technology companies?

The vast majority of Ex Machina consists of the triumvirate of Nathan, Caleb and Ava, toggling between scenes with Nathan and Caleb and Caleb interviewing (experimenting on?) Ava between a glass facade. The progression of intensity in the numbered interviews that comprise the Turing Test are probably the most compelling of the whole film, and nicely set-up the shocking conclusion. In its themes of the human/AI dichotomy and dialogue-heavy tone, Ex Machina compares a lot to last year’s brilliant sci-fi film Her, which rightfully won Spike Jonze the Oscar for Best Original Screenplay. In the former, a lonely professional letter writer becomes attached to and eventually falls in love with his computer operating system Samantha. Eventually, we see the limitations of a relationship void of human contact and the peculiar idiosyncrasies that make us so distinct, the very elusive elements that Samantha seeks to know and understand. (Think this scenario is far off? Many tech developers feel it is not only inevitable but not too far away.To that degree, both of these films show two kinds of sentient future technology chasing after human-ness, yearning for it, yet caution against a bleak future where they supplant it. But whereas Her does so in a sweetly melancholy sentimental fashion, Ex Machina plants a much darker, psychologically prohibitive conclusion.

It turns out that the most terrifying scenario isn’t a world in which artificial intelligence disrupts our lives through a drastic shift in technology. It’s a world in which technology seamlessly integrates itself into the lives we already know.

View the official Ex Machina trailer here:

Alex Garland, writer and director of Ex Machina, stayed after a recent Los Angeles press screening to give an insightful, profound question and answer session about the artificial intelligence as portrayed in the film and its relationship to the recent slew of works depicting interactive technology.

Ava [the robot created by Nathan Bateman] knew what a “good person” was. Why wasn’t she programmed by Nathan to just obey the commandments and not commit certain terrible acts that she does in the film?

AG: The same reason that we aren’t programmed that way. We are asked not to [sin] by social conventions, but no one instructs or programs us not to do it. And yet, a lot of us do bad things. Also, I think what you’re asking is – is Ava bad? Has she done something wrong? And I think that depends on how you choose to look at the movie. I’ve been doing stories for a while, and you hand the story over and you’ve got your intentions but I’ve been doing it for long enough to know that people bring their own [bias] into it.

The way I saw this movie was it was all about this robot – the secret protagonist of the movie. It’s not about these two guys. That’s a trick; an expectation based on the way it’s set up. And if you see the story from her point of view, if her is the right word, she’s in a prison, and there’s the jailer and the jailer’s friend and what she wants to do is get out. And I think if you look at it from that perspective what she does isn’t bad, it’s practical. Yes, she tricks them, but that’s okay. They shouldn’t have been dumb. If you arrive in life in this place, and desire to get out, I think her actions are quite legitimate.

So, that’s a very human-like quality. So let’s say that you’re confronted with a life or death situation with artificial intelligence. Do you have any tricks that you would use [to survive]?

AG: If I’m hypothetically attacked by an AI, I don’t know… run. I think the real question is whether AI is scary. Should we fear them? There’s a lot of people who say we should. Some smarter people than me such as Elon Musk, Stephen Dawkins, and I understand that. This film draws parallels with nuclear power and [Oppenheimer’s caution]. There’s a latent danger there in both. Yet, again, I think it depends on how you frame it. One version of this story is Frankenstein, and that story is a cautionary tale – a religious one at that. It’s saying “Man, don’t mess with God’s creationary work. It’s the wrong thing to do.”

And I framed this differently in my mind. It’s an act of parenthood. We create new conciousnesses on this planet all the time – everyone in this room, everyone on the planet is a product of other people having created this consciousness. If you see it that way, then the AI is the extension of us, not separate from us. A product of us, of something we’ve chosen to do. What would you expect or want of your child? At the bare minimum, you’d want for them to outlive you. The next expectation is that their life is at least as good as yours and hopefully better. All of these things are a matter of perspective. I’m not anti-AI. I think they’re going to be more reasonable than us, potentially in some key respects. We do a lot of unreasonable stuff and they may be fairer.

On the subject of reproduction, in this film, Ava was anatomically correct. Had it been a male, would he have been likewise built correctly?

AG: If you made the male AI in accordance with the experiment that this guy is trying to conduct, then yes. This film is an “ideas movie.” Sometimes it’s asking a question and then presenting an answer, like “Does she have empathy?” or “Is she sentient?”. Sometimes, there isn’t an answer to the question, either because I don’t know the answer or because no one does. The question you’re framing is not “Can you [have sex] with these AI?” it’s “Where does the gender reside?” Where does gender exist in all of us. Is it in the mind, or in the body? It would be easy to construct an argument that Ava has no gender and it would seem reasonable in many respects. You could take her mind and put it in a male body and say “Well, nothing is substantially changed. This is a cosmetic difference between the two.” And yet, then you start to think about how you talk about her and how you perceive her. And to say “he” of Ava just seems wrong. And to say “it” seems weirdly disrespectful. And you end up having this genderless thing coming back to she.

In addition, if you’re going to say gender is in the mind, then demonstrate it. That’s the question I’m trying to provoke. When they have that conversation halfway through the film, these implicit questions, if gender is in the mind, then what is it? Does a man think differently from a woman? Is that really true? Think of something that a man would always think, and you’ll find a man that doesn’t always think that, and you’ll find a woman that does. These are the implicit questions in the film. They don’t all have answers. The key thing about the gender thing isn’t about who is having sex with whom. It’s that this young man is tasked with thinking about what’s going on inside of this machine’s head. That’s his job is to figure that out. And at a certain point he stops thinking about her and he gets it wrong. That’s the issue – why does he stop thinking about it? If the plot shifts in this worked on the audience or any of you in the same way as they worked on the young man, why was that?

Was one of your implicit messages in the film for people to be more conscientious about what they’re sharing on the internet, whether in their searches or social media, and thereby identifying their interests “out there” and how that might be potentially used?

AG: Yes, absolutely. It’s a strange thing, what zeitgeist is. Movies take ages [to make] sometimes – like two and a half years at least. I first wrote this script about four years ago. And then, I find out as we go through production that we’re actually late to the party. There are a whole bunch of films about AI – Transcendance, Automator, Big Hero 6, Age of Ultron [coming out next month], Chappie. Why is that? There hasn’t been any breakthrough in AI, so why are all these people doing this at the same time? And I think it’s not AIs, I think it’s search engines. I think it’s because we’ve got laptops and phones and we, those of us outside of tech, don’t really understand how they work. But we have a strong sense that “they” understand how “we” work. They anticipate stuff about us, they target us with advertising, and I think that makes us uneasy. And I think that these AI stories that are around are symptomatic of that.

That bit in the film about [the dangers of internet identity], in a way it obliquely relates to Edward Snowden, and drawing attention to what the government is doing. But if people get sufficiently angry, they can vote out the government. That is within the power of an electorate. Theoretically, in capitalist terms, consumers have that power over tech companies, but we don’t really. Because that means not having a mobile phone, not having a tablet, a computer, a credit card, a television, and so on. There is something in me that is worried about that. I actually like the tech companies, because I think they’re kind of like NASA in the 1960s. They’re the people going to the moon. That’s great – we wanted to go to the moon. But I’m also scared of them, because they’ve got so much power and we tend not to cope well with a lot of power. So yes, that’s all in the film.

You mentioned Elon Musk earlier, who looks a little bit like [Google co-founder] Sergei Brin. Which tech executive would you say CEO Nathan Batemn is most modeled after?

AG: He wasn’t exactly modeled after an exec. He was modeled more like the companies in some respect. All that “dude, bro” stuff. I sometimes feel that’s what they’re doing. Not to generalize, but it’s a little bit like that – we’re all buddies, come on, dude. While it’s rifling through my wallet and my address book. It’s misleading, a mixed message. I don’t want to sound paranoid about tech companies. I do really like them, I think they’re great. But I think it’s correct to be ambivalent about them. Because anything which is that powerful and that unmarshalled you have to be suspicious of, not even for what we know they’re doing but for what they might do. So, Nathan is more [representative of] a vibe than a person.

For someone equally as scared of AI as Alex is of tech companies, what is the gap between human intelligence and AI intelligence that exists today? How long before we have AI as part of our daily lives?

AG: One of the great pleasures of working on this film was contacting and discussing with people who are at the edge of AI research. I don’t think we’re very close at all. It depends on what you’re talking about. General AI, yes, we’re getting closer. Sentient machines, it’s not even in the ballpark. It’s similar in my mind to a cure for cancer. You can make progress and move forward, but sometimes by going forward it highlights that the goal has receded by complexity. I know Ray Kurtzweil makes some sort of quantified predictions – in 20 years, we’ll be there. I don’t know how you can do that. He’s a smart guy, maybe he’s right. As far as I can tell, in talking to the people who are as close to knowing [about the subject] as I can encounter, it’s something that may or may not happen. And it will probably take a while.

One of the things that’s always bothered me about the Turing Test is that it’s not seeking to know whether or not the thing you’re dealing with is an artificial intelligence. It’s – can you tell that it is? And that’s always bothered me as a kind of weird test. You have a 20,000 question survey, and in the end this one decision comes from a binary point of view. It seems almost flawed.

AG: Yes, you’re completely right. Apart from the fact that it was configured quite a long time ago, it’s primarily a test to see if you can pass the Turing Test. It doesn’t carry information about sentience or potential intelligence. And you could certainly game it. But, it’s also incredibly difficult to pass. So in that respect it’s a really good test. It’s just not a test of what it’s perceived to be a test of, typically. This [experiment in the movie] was supposed to be like a post-Turing Turing Test. The guy says she passed with blind controls, he’s not interested in whether she’ll pass. He’s not interested in her language capacity, which is comparable to a chess computer that wants to win at chess, but doesn’t even know whether it’s playing chess or that it’s a computer. This is the thing you’d do after you pass the Turing Test. But I totally agree with everything you said.

In your research, is there a test that’s been developed that is the reverse of the Turing Test, that seeks to answer whether, in the face of so much technology development, we’ve lost some of our innate human-ness? Or that we’re more machine like?

AG: I think we are machine-like. As far as I know, no test exists like that. The argument at the heart of these things is: is there a significant difference between what a machine can do and what we are? I think how I see this is that we sometimes dignify our conciousness. We make it slightly metaphysical and we deify it because it’s so mysterious to us. An we think, are computers ever going to get up to the lofty heights where we exist in our conciousness? And I suspect that we should be repositioning it here, and we overstate us, in some respects. That’s probably the opposite of what you want to hear.

Do you think that some machine-like quality predates the technology?

AG: I do, yes. I suspect all our human aspects are through evolution, that’s how I think we got here. I can see how conciousness arises from needing to interact in a meaningful and helpful way with other sentient things. Our language develops, and as these things get more sophisticated, conciousness becomes more useful and things that have higher conciousness succeed better. Also, I heard someone talk about conciousness the other day and they were saying “One day, it’s possible that elephants will become sentient.” And that’s a really good example of how we misunderstand sentience. Elephants are already sentient. If you put a dog in front of a mirror, it recognizes its own reflection. It’s self-aware. It knows it’s not looking at another dog. So I put us on that spectrum.

Pertaining to that question, there’s a [critical] moment in the movie where Caleb cuts himself in front of a mirror to make sure that he’s bleeding. And I was going to ask if that’s symbolic of that evolution where conciousness can be undifferentiated between a human and a machine? I was wondering if that scene is a subtle hint that you don’t really know whether you’re the one that is being tested or you’re the tester?

AG: There’s two parts [to that scene]. One is film audiences are literate. I kind of assume that everyone who’s seen that has seen Blade Runner. So they’re going to be imagining to themselves, it’s not her [that is the AI] it’s him. He’s the robot. Here’s the thing. If someone asks of you, here’s a machine, test that machine’s conciousness, tell me if it’s concious or not, it turns out to be a very difficult thing to do. Because [the machine] could act convincingly that it’s concious, but that wouldn’t tell you that it is. Now, once you know that, that actually becomes true of us. You don’t know I’m concious. You think I’m probably concious, you’re not really questioning it. But you believe you are concious, and because I’m like you, I’m another human, you assume I’ve got it. But I’m doing anything that empirically demonstrates that I am concious. It’s an act of faith. And once you know that, you’ve figured that out about the machine and now you can figure it out about the other person. Weirdly, then you can ask it of yourself. That’s where the diminished sense of conciousness comes into it. The things that I believe are special about me are the things I’m feeling – love, fear. And then you think about electrochemicals floods in your brain and the things we’ve been taught and the things we’ve been born with and our behavior patterns and suddenly it gets more and more diminished. To the point that you can think something along the lines of “Am I like a complicated plant that thinks it’s a human?” It’s not such an unreasonable question. So cut your arm and have a look!

In thinking about being a complicated plant, that sounds very isolating. Nathan was really isolated in the movie as well. Do you think that this is indicative of where we’re going with our devices and internet and social media being our social outlet rather than actually socializing? Is AI taking us down that path of isolation?

AG: It may or may not. That could be the case. I’m not on Twitter, I’ve never been on Facebook, I’m not really too [fond of] that stuff. From the outside looking in, it looks like that’s the way people communicate – maybe in a limited way, but in a way I don’t really know. The thing about Nathan is we’re social animals, and our behavior is incredibly modified by people around us. And when we’re removed from modification, our behavior gets very eccentric very fast. I think of it like a kid holding on to a balloon and then they let it go and in a flash it’s gone. I know this because my job is I’m a writer and if I’m on a roll, I can spend six days where I don’t leave the house, I barely see my kids in the corridor, but I’m mainly interested in the fridge and the computer. And I get weird, fast. It’s amazing how quickly it happens. I think anyone who’s read Heart of Darkness or seen the adaptation Apocalypse Now, [Nathan] is like this character Kurtz. He spends too much time upriver, too much time unmodified by the influences that social interactions provide.

Ex Machina goes into wide release in theaters on April 10, 2015.

*****************

ScriptPhD.com covers science and technology in entertainment, media and advertising. Hire our consulting company for creative content development. Follow us on Twitter and Facebook. Subscribe to our podcast on SoundCloud or iTunes.